Graph Convolutional Network (GCN) by Hand ✍️

Graph Convolutional Networks (GCNs), introduced by Thomas Kipf and Max Welling in 2017, have emerged as a powerful tool in the analysis and interpretation of data structured as graphs.

GCNs have found many successful applications:

Social network analysis

Recommendation systems

Biological network interpretation

Drug discovery

Molecular chemistry

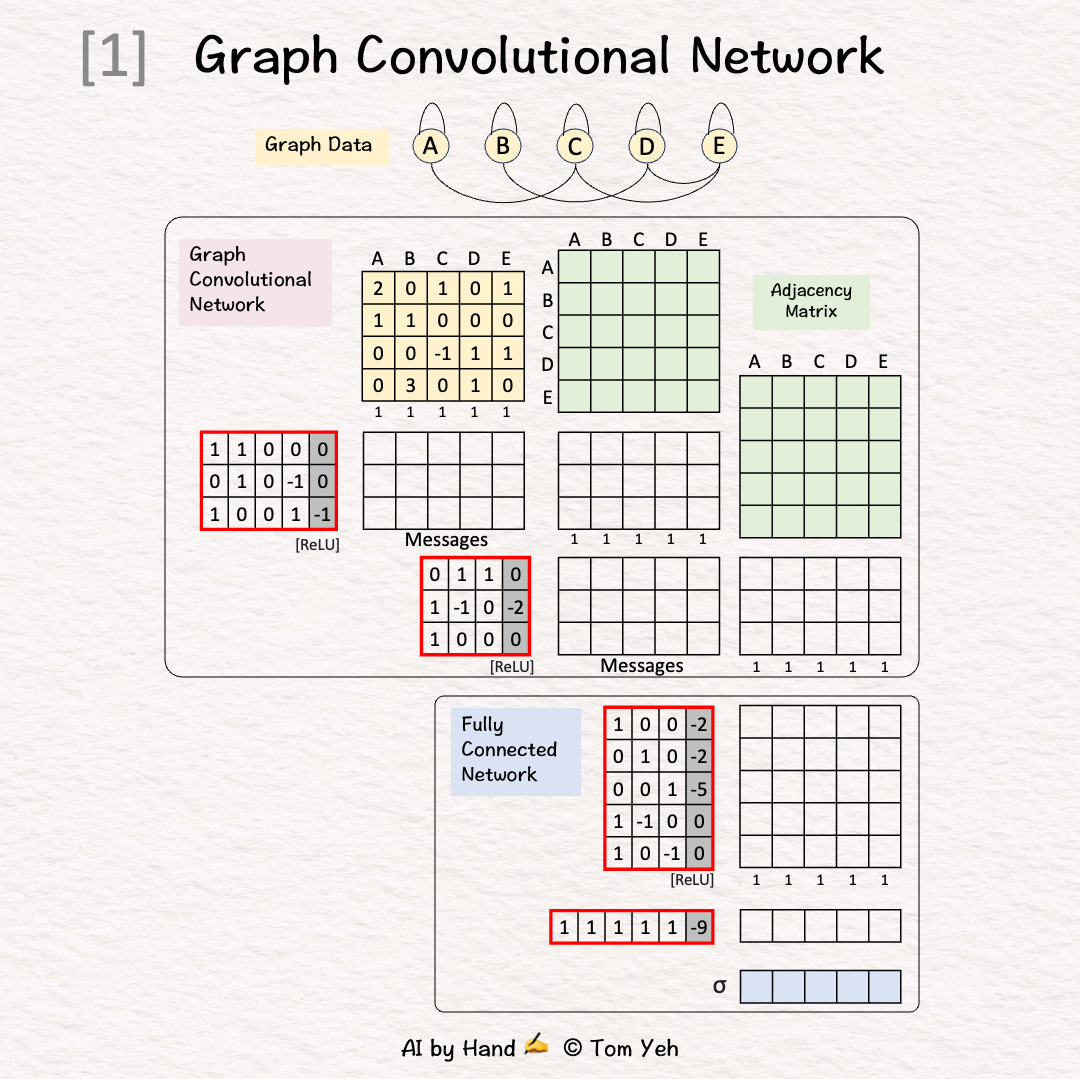

This exercise demonstrates how GCN works in a simple application: binary classification.

Goal

Predict if a node in a graph is X.

Architecture

🟪 Graph Convolutional Network (GCN)

GCN1(4,3)

GCN2(3,3)

🟦 Fully Connected Network (FCN)

Linear1(3,5)

ReLU

Linear2(5,1)

Sigmoid

Simplications:

Adjacent matrices are not normalized.

ReLU is applied to messages directly.

Walkthrough

[1] Given

↳ A graph with five nodes A, B, C, D, E

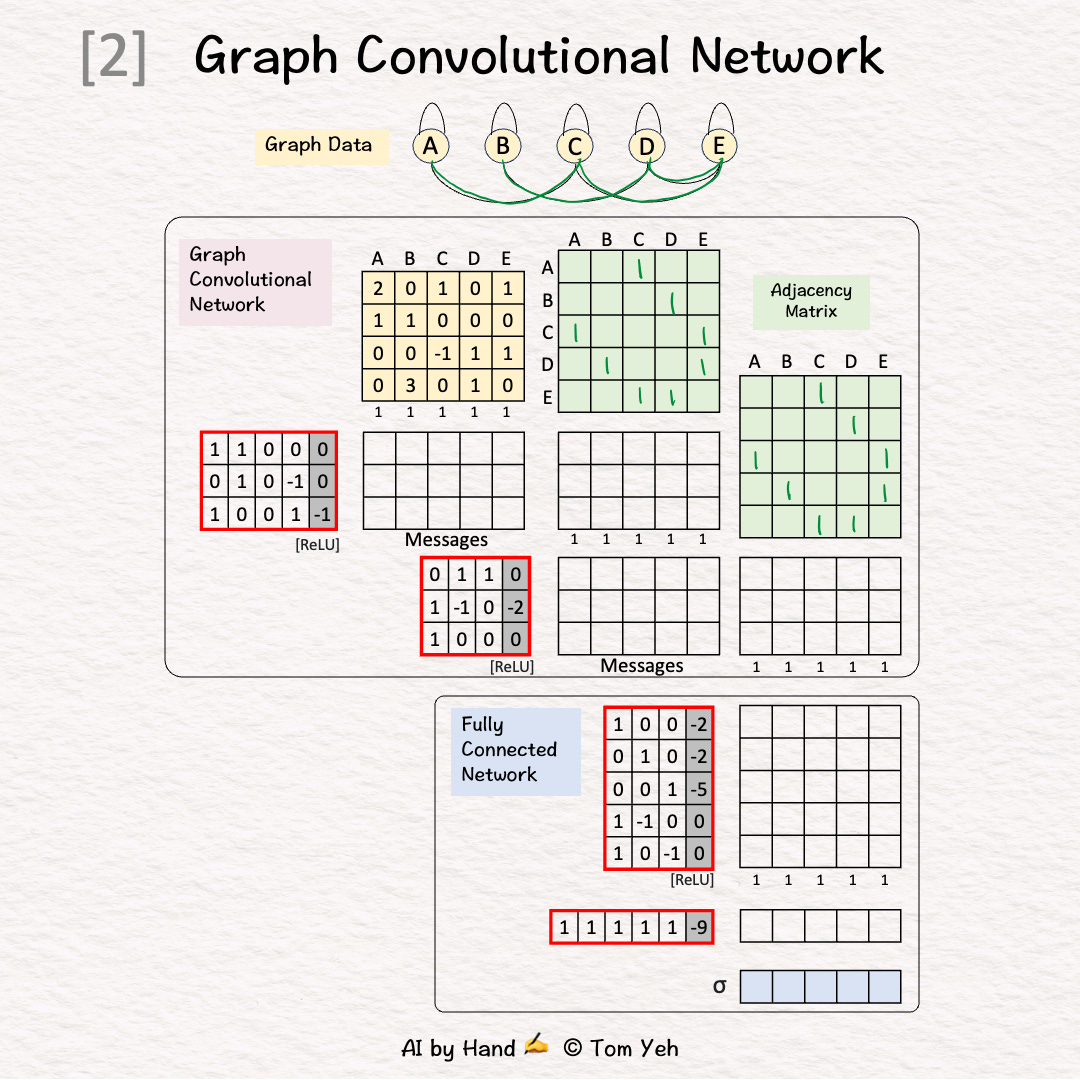

[2] 🟩 Adjacency Matrix: Neighbors

↳ Add 1 for each edge to neighbors

↳ Repeat in both directions (e.g., A->C, C->A)

↳ Repeat for both GCN layers

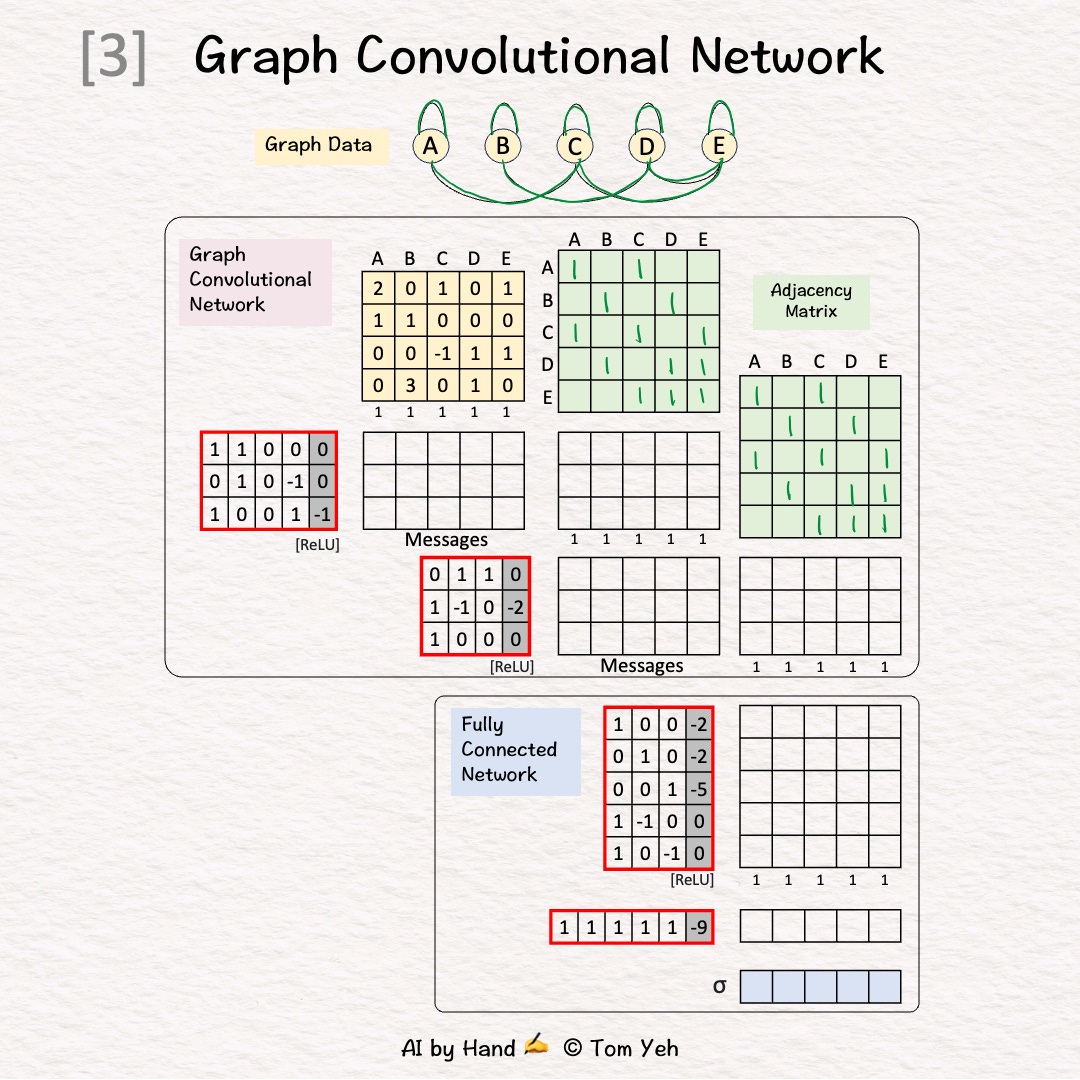

[3] 🟩 Adjacency Matrix: Self

↳ Add 1's for each self loop

↳ Equivalent to adding the identity matrix

↳ Repeat for both GCN layers

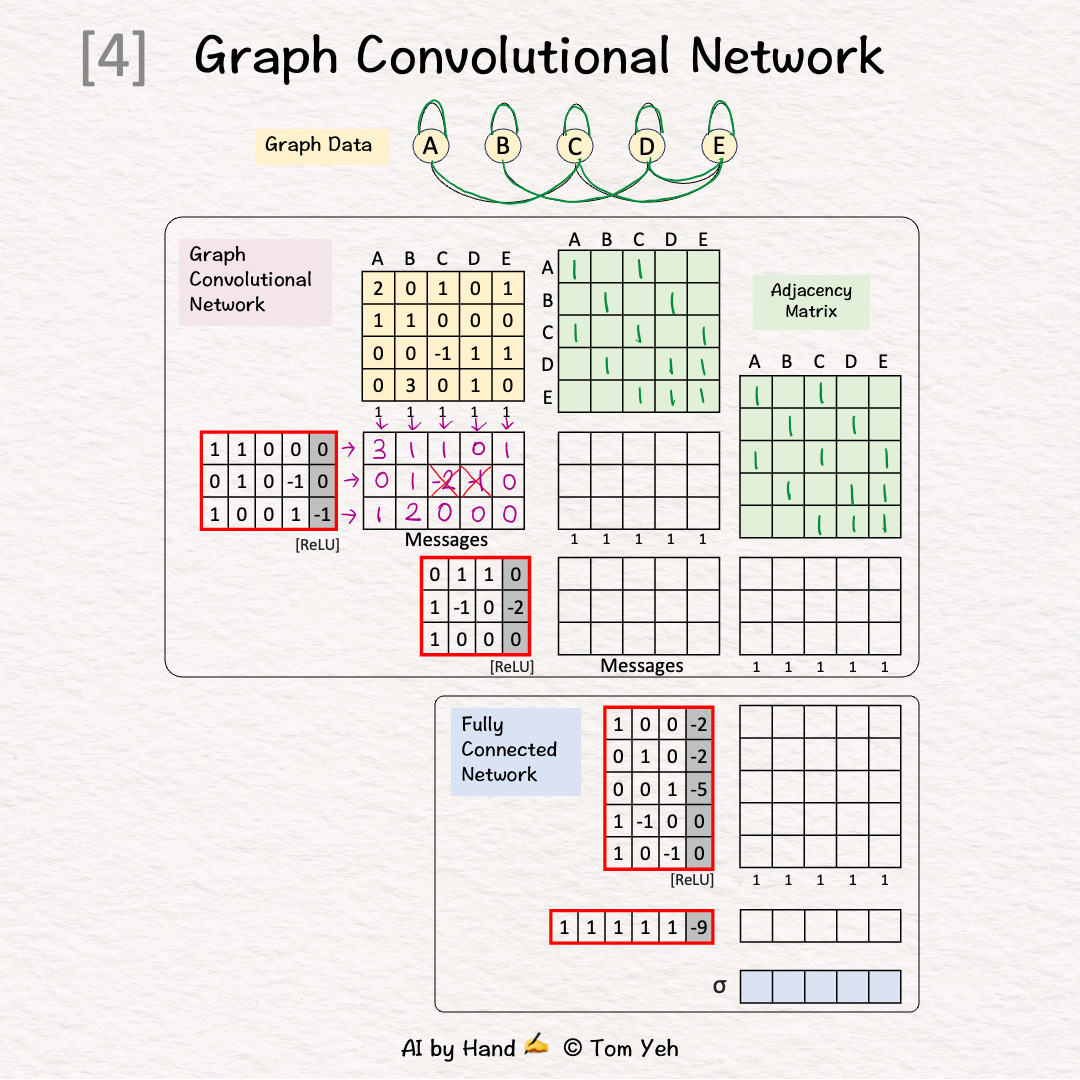

[4] 🟪 GCN1: Messages

↳ Multiply the node embeddings 🟨 with weights and biases

↳ Apply ReLU (negatives → 0)

↳ The result is one message per node

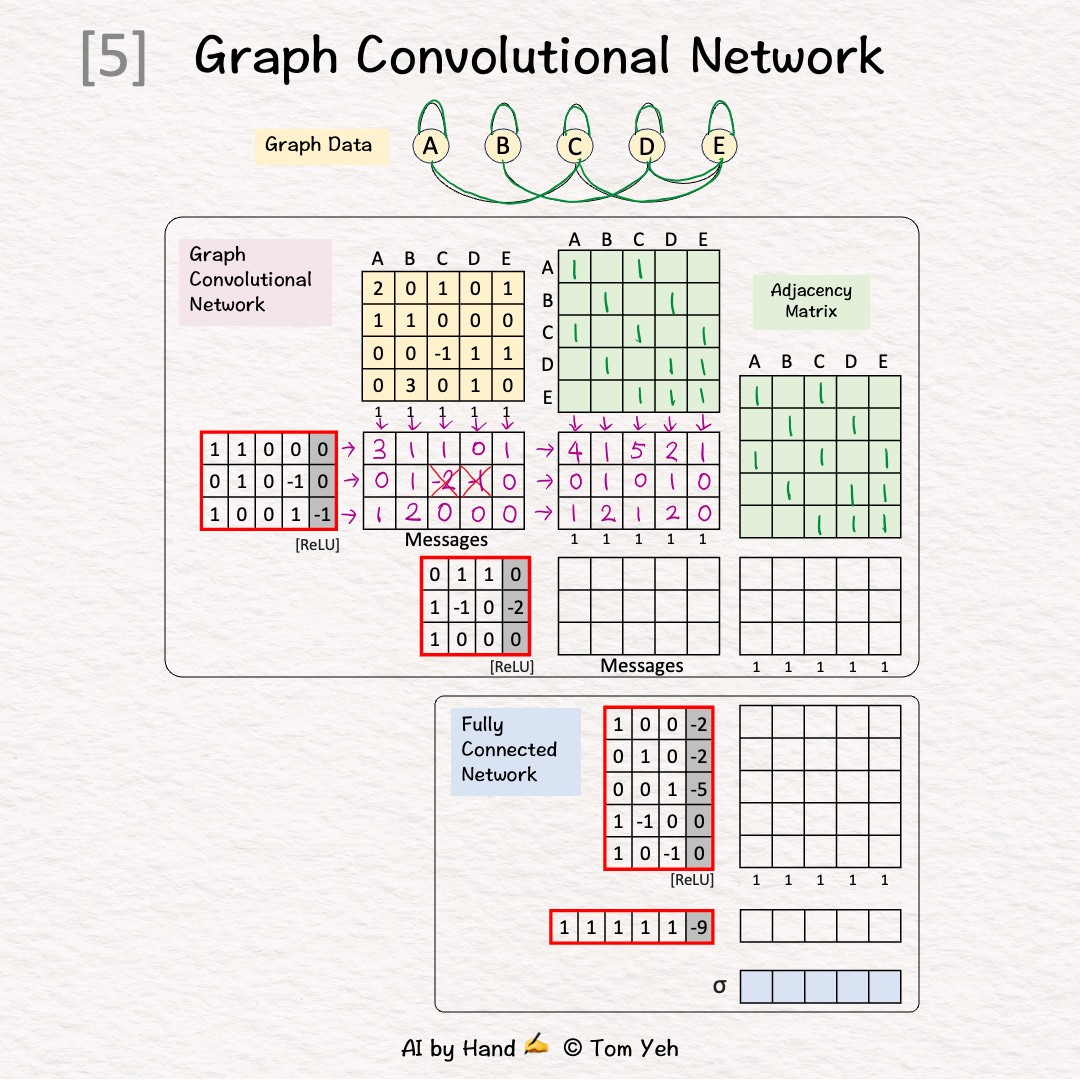

[5] 🟪 GCN1: Pooling

↳ Multiply the messages with the adjacent matrix

↳ The purpose is the pool messages from each node's neighbors as well as from the node itself.

↳ The result is a new feature per node

[6] 🟪 GCN1: Visualize

↳ For node 1, visualize how messages are pooled to obtain a new feature for better understanding

↳ [3,0,1] + [1,0,0] = [4,0,1]

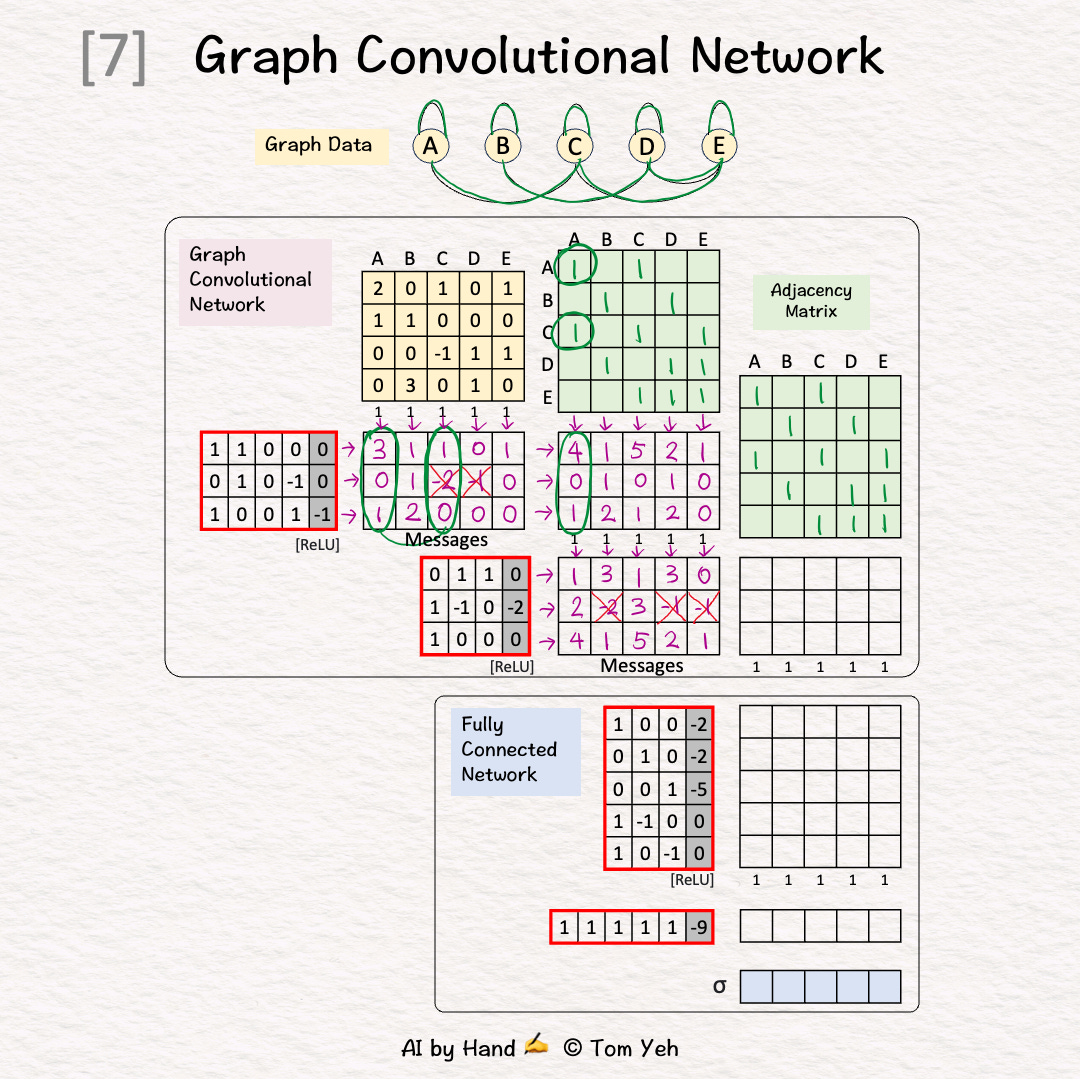

[7] 🟪 GCN2: Messages

↳ Multiply the node features with weights and biases

↳ Apply ReLU (negatives → 0)

↳ The result is one message per node

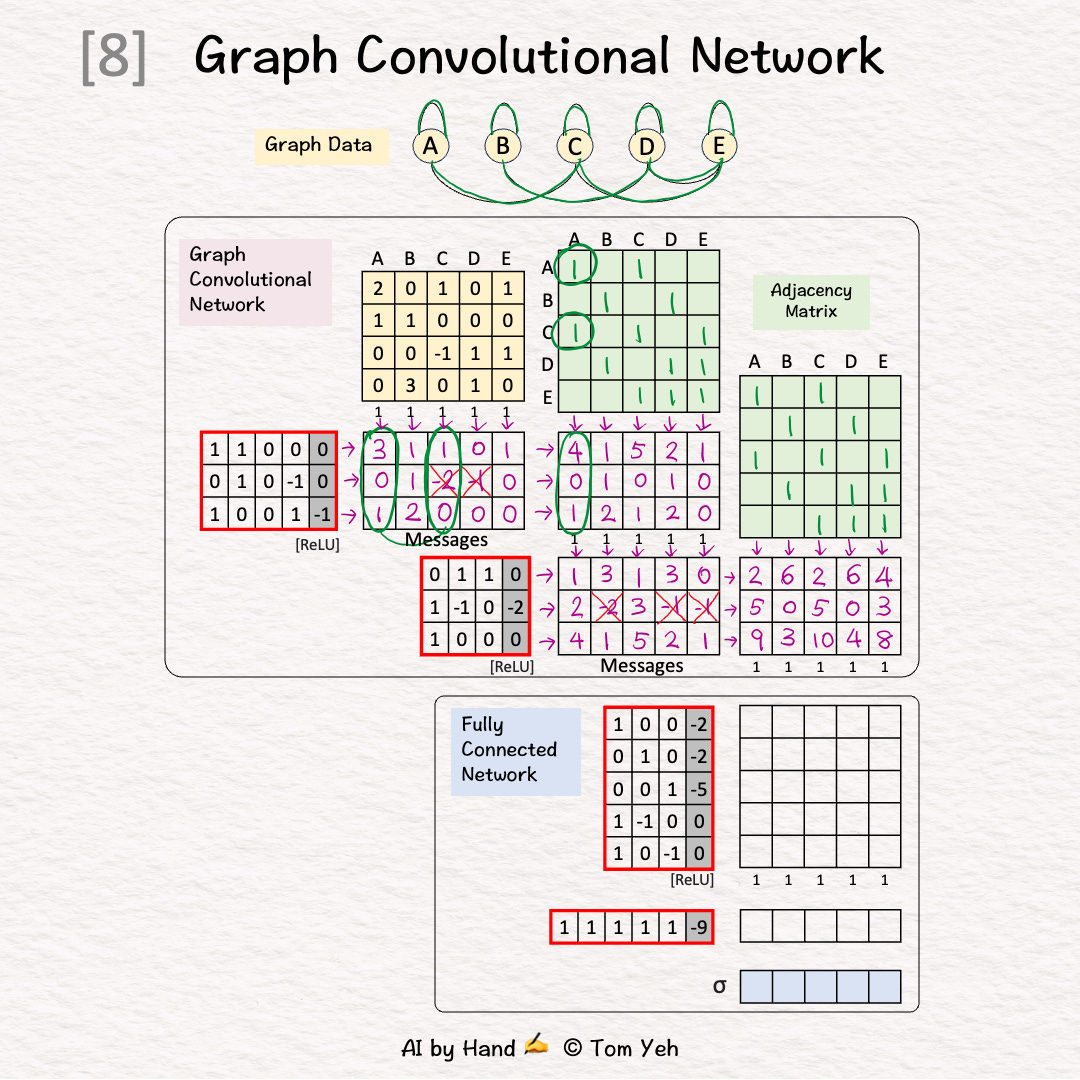

[8] 🟪 GCN2: Pooling

↳ Multiply the messages with the adjacent matrix

↳ The result is a new feature per node

[9] 🟪 GCN2: Visualize

↳ For node 3, visualize how messages are pooled to obtain a new feature for better understanding

↳ [1,2,4] + [1,3,5] + [0,0,1] = [2,5,10]

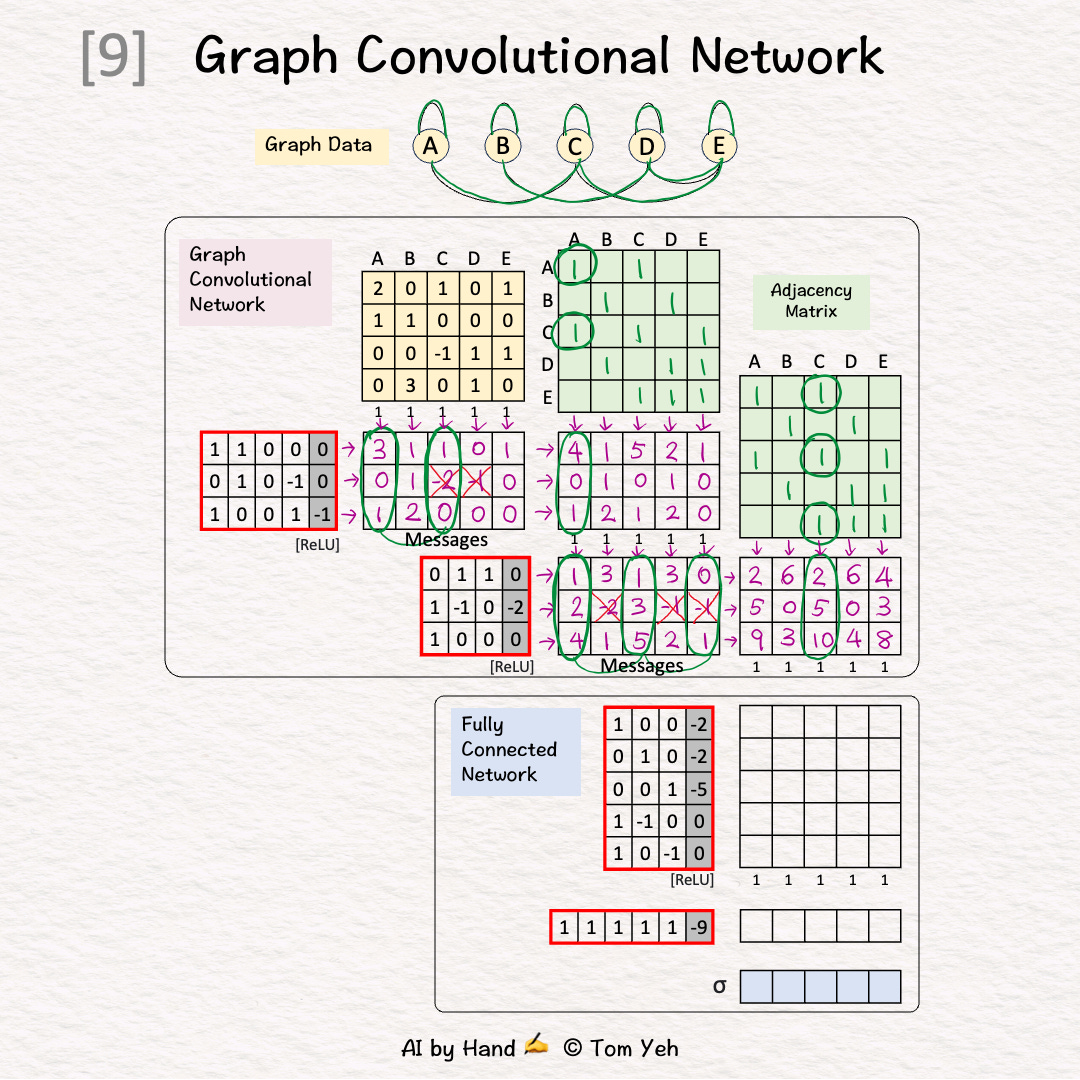

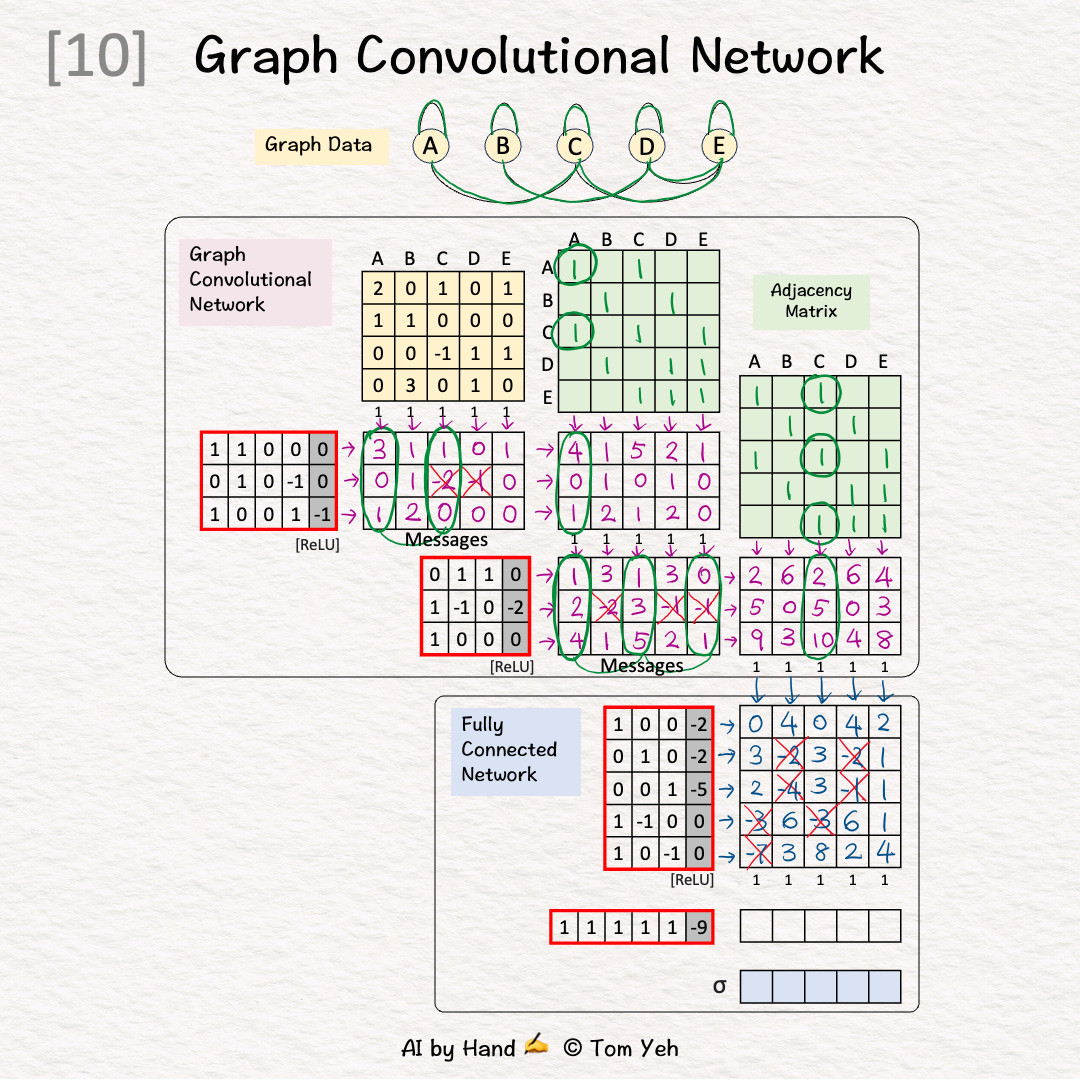

[10] 🟦 FCN: Linear 1 + ReLU

↳ Multiply node features with weights and biases

↳ Apply ReLU (negatives → 0)

↳ The result is a new feature per node

↳ Unlike in GCN layers, no messages from other nodes are included.

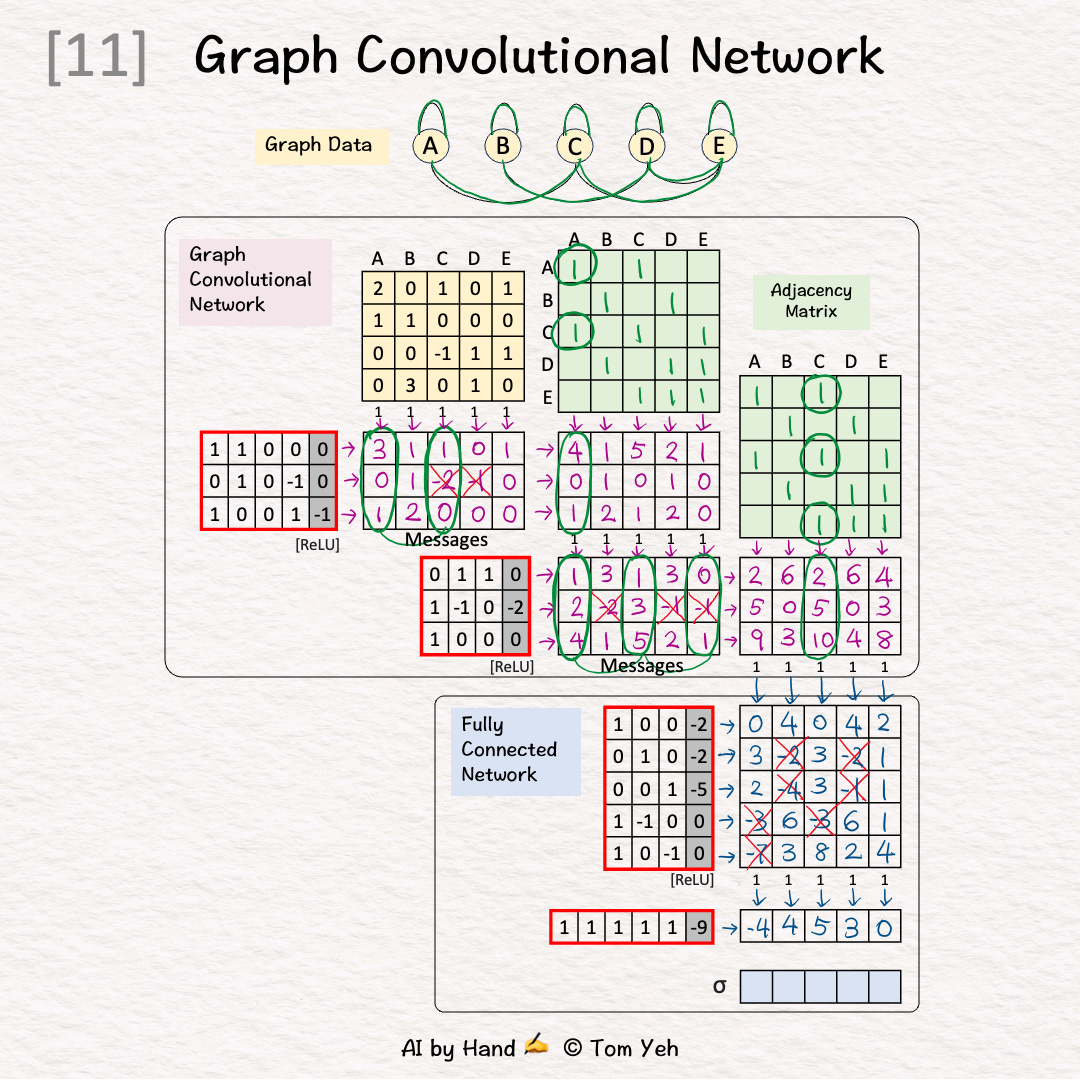

[11] 🟦 FCN: Linear 2

↳ Multiply node features with weights and biases

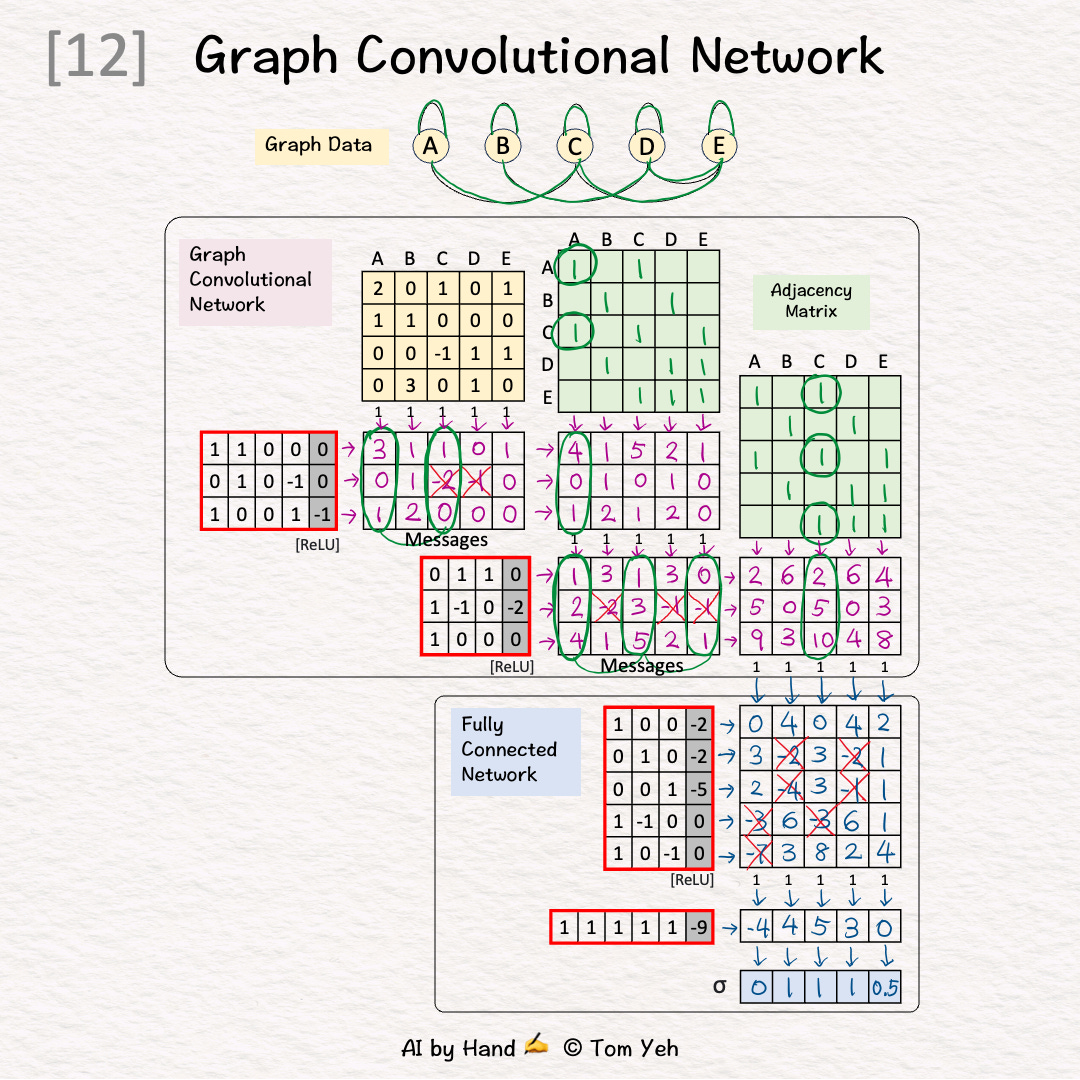

[12] 🟦 FCN: Sigmoid

↳ Apply the Sigmoid activation function

↳ The purpose is to obtain a probability value for each node

↳ One way to calculate Sigmoid by hand ✍️ is to use the approximation below:

• >= 3 → 1

• 0 → 0.5

• <= -3 → 0

-- 𝗢𝘂𝘁𝗽𝘂𝘁𝘀 --

A: 0 (Very unlikely)

B: 1 (Very likely)

C: 1 (Very likely)

D: 1 (Very likely)

E: 0.5 (Neutral)

Hi, thanks for the resource. Is there a typo in the first step?

the second row of the message seem to be [1, -2, 0, -1, 0] no matter how many times i recalculated this...