48-Hour Access: Full Recordings + Workbooks

Foundation → Frontier AI Seminar

This morning, I gave the first Foundation → Frontier seminar of 2026 for the members of the AI by Hand Academy. Thank you to the hundreds of people who showed up live and made the classroom feel full and energized!

As a thank-you to my newsletter subscribers, I’m opening up full access to the seminar recordings and the accompanying Excel workbooks for a 48-hour preview window.

Foundation: Introduction to Gen AI

I gave a big-picture introduction to Generative AI, using the way I teach best: Excel as a giant whiteboard. I walked through four major types of modern AI systems—image generation, text (prompt completion), translation, and speech—and showed how they differ from classical discriminative AI.

We started with how traditional models classify inputs into a single number, then flipped that idea around to see how generative models expand small inputs into rich outputs like images, sentences, or audio. From there, I gradually “popped open the black boxes” to reveal what’s really inside: matrix multiplications, activations, probabilities, and sampling—no magic, just math running very fast.

I also traced how prompt completion works token by token, why responses can vary, and how encoder–decoder architectures power translation and speech. By the end, we even stepped through how audio tokens turn into actual waveforms.

My goal for this seminar—and for all the ones to come—is simple: help you see that modern AI isn’t mystical. With the right mental models, you can understand it, sketch it, and reason about it by hand. ✍️

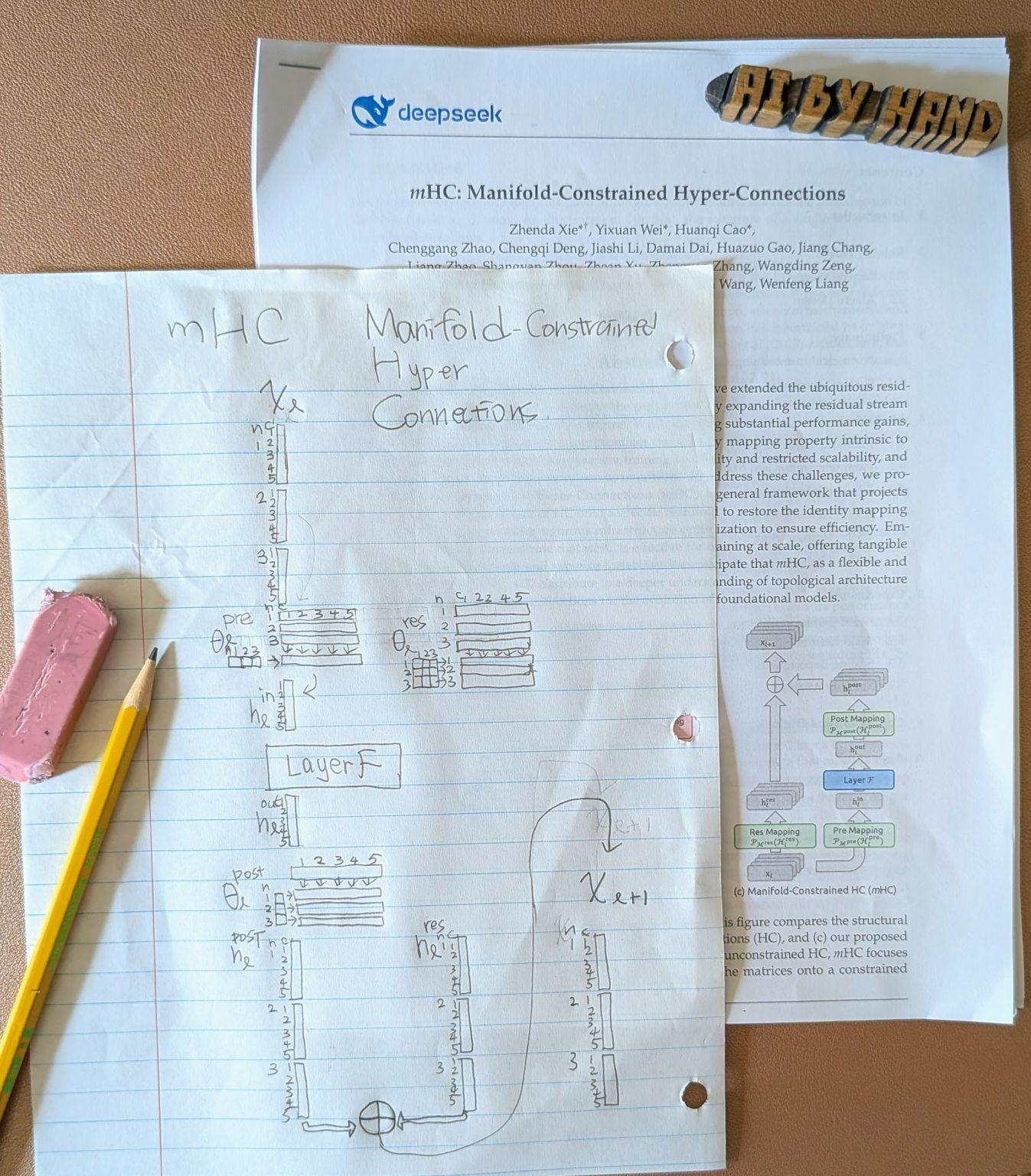

Frontier: Manifold-Constrained Hyper Connections (mHC)

This Frontier Seminar kicked off my commitment to unpacking one frontier paper at a time, focusing on how the algorithm works, not just benchmark results.

This week’s topic was DeepSeek’s mHC (Manifold-Constrained Hyper-Connections)—a paper published last week. This is as “Frontier” as we can get.

The paper is full of intimidating jargon that actually builds on a very familiar idea: residual connections. I started by revisiting why ResNets work so well—layers learn small additive updates instead of full transformations—and how that insight made deep networks possible.

From there, we moved to hyper-connections: extending a single skip connection into multiple interacting streams. Once you do that, the real challenge becomes mapping shapes correctly—merging streams, expanding them back, and mixing them together. I showed how all of this reduces to carefully designed matrix multiplications, with both static and input-dependent (dynamic) mixing.

Finally, we tackled the “manifold-constrained” part: restricting these mixing matrices so they stay stable and well-behaved, rather than arbitrary and noisy. The result is a powerful generalization of residual connections that still ends in the simplest operation of all—addition.

The big message: this paper isn’t magic. Once you break it down, it’s a clean extension of ideas many of us already know.

The full seminar recordings and Excel workbooks are open to newsletter subscribers for the next 48 hours. After the preview window, they’ll return to the AI by Hand Academy for members.

I’ll share details about next week’s Foundation → Frontier seminar soon.