Agent Hallucination

💡Foundation AI Seminar Series

Ever wonder why a huge AI model can sound like a top expert one moment—and then confidently make things up the next?

In this seminar, we stop treating AI like a mysterious black box and look directly at how it actually works. We break down, step by step, the logic and math behind AI agents—and why hallucinations happen.

Key Highlights:

Hallucination: We analyze a real-world agent log to pinpoint exactly where things go wrong. Is it a RAG failure? A tool-calling error? Or a fundamental LLM logic gap?

Mitigation: Learn to implement and calculate mitigation strategies by hand, including:

Self-Consistency Checks: Why asking the same question thrice catches a lie.

Tool Registry Verification: Ensuring your agent doesn’t “invent” APIs that don’t exist.

LLM-as-a-Judge: Using high-reasoning models to audit agentic behavior.

Evolution: A visual breakdown of how we moved from basic LLMs to RAG, and finally to the multi-step Agents of today.

Deep-Dive Math: We peel back the transformer architecture to look at Softmax layers and attention mechanisms. You’ll see exactly how “Sink Tokens” can prevent models from forcing a wrong answer when no right answer exists.

Here’s a preview of the material I prepared for this seminar:

Special thanks to Ofer Mendelevitch and Vectara for hosting this seminar!

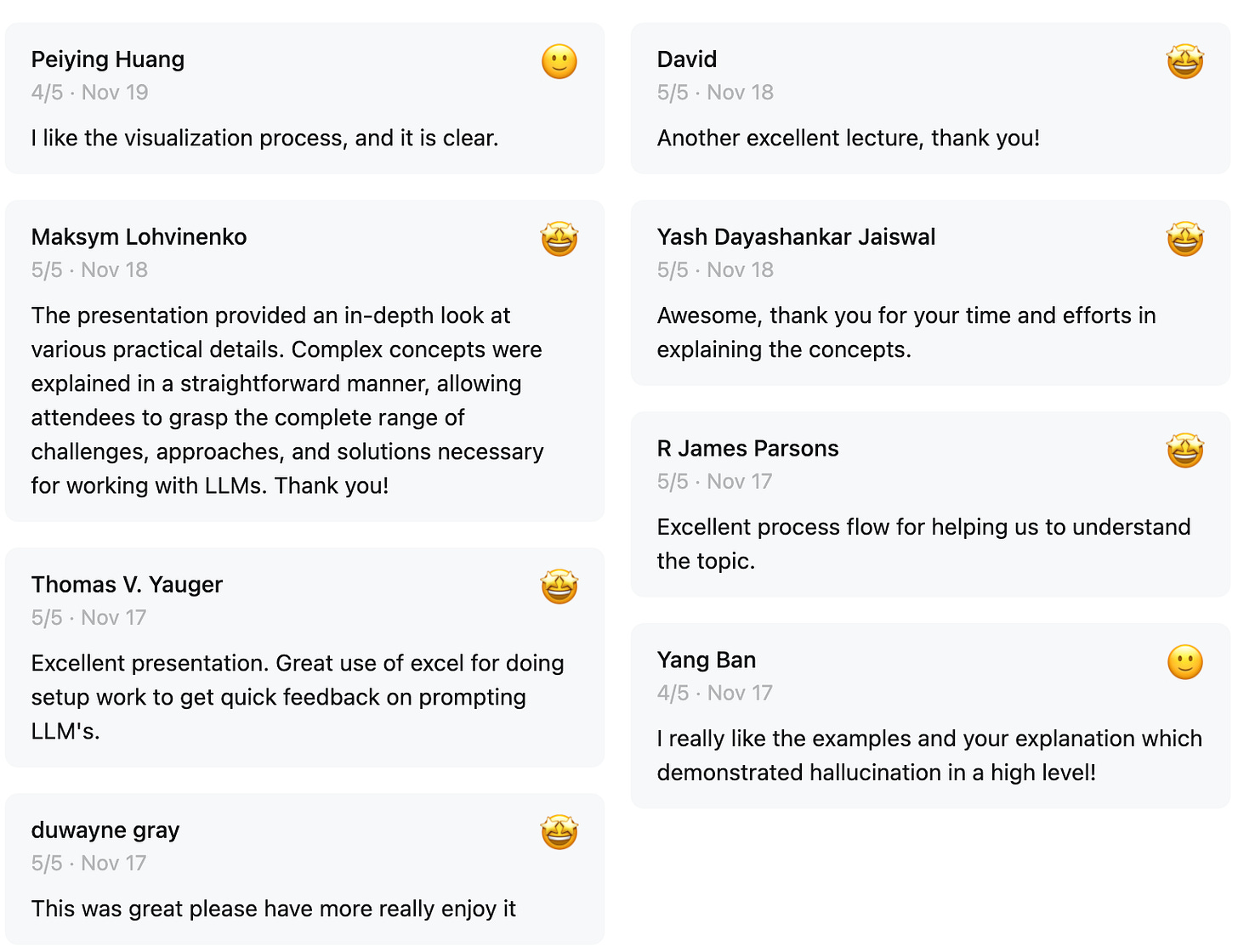

Feedback

Full Recording and Workbook

(free preview for limited time)

The full recording and the associated Excel workbook are available to AI by Hand Academy members. You can become a member via a paid Substack subscription.

Where can I access the recorded lecture, please?

Wow, the part about building a Multi-Agent prototype in Excel by hand really stood out, I'm incredibly curious about how you plan to illustrate the dynamic and iterative nature of agent interactions within a static spreadsheet, considering their inherent comlexity.