9 AI Eval Formulas You Must Know

💡Foundation AI Seminar Series

In today’s seminar, I took on a mission: in 50 minutes, I walked through 9 core math formulas for AI evaluation—by hand—and implemented each one live in Excel.

Thank you for attending live!

Everyone agrees that AI evaluation is important. But far fewer people have actually spent time learning the math underneath it, beyond making library calls.

Most of us encounter evaluation math like this:

loss = torch.nn.CrossEntropyLoss()(logits, targets)Others uses it. It must work, right? We move on.

But what is cross-entropy really measuring? Why does it involve a log? What number is this actually producing—and why should we trust it?

Too often, the math behind AI evaluation is explained abstractly, buried in symbols and notation that feel disconnected from intuition.

Normally, I teach these evaluation metrics separately across different courses, lectures, and seminars. Today was the first time I tried to put them all together, side by side, in one simple, coherent session. I wasn’t sure it would work—but I’m glad I tried.

I’m glad to be joined by Ofer Mendelevitch from Vectara. Ofer and I have collaborated before on a seminar about agent hallucination, and I’m excited to continue that conversation today. Once again, Ofer was generous in sharing his industry insight, fielding questions in the chat and connecting the ideas to real production systems.

Below is a list of the 9 formulas I covered in this seminar. If they feel overwhelming at first glance, that’s exactly why I designed this seminar.

Outline

Perplexity (PPL)

Negative Log-Likelihood

Cross Entropy Loss

KL Divergence

Accuracy

Precision / Recall / F1

ROUGE

BLEU

BERTScore

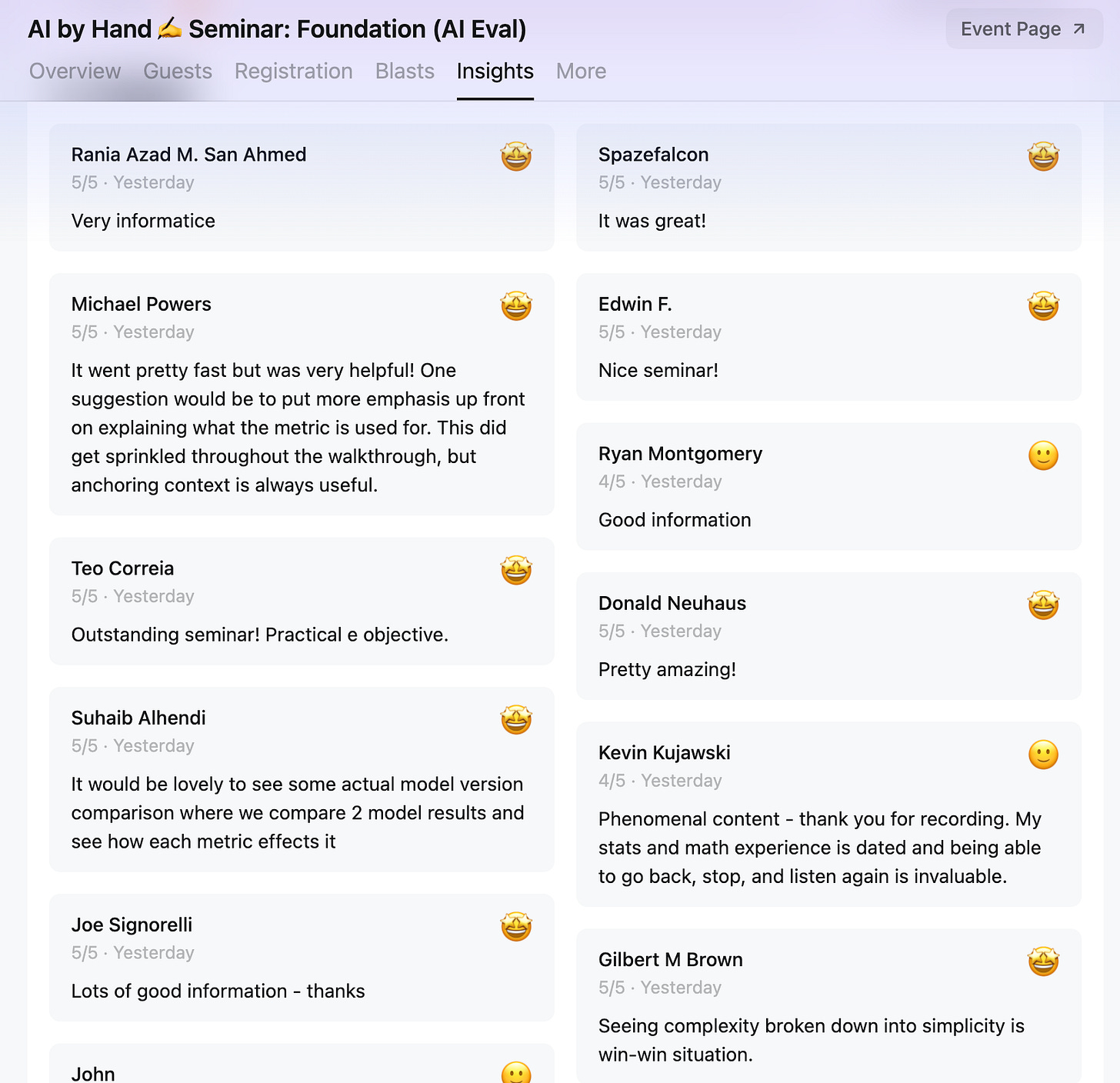

Feedback

Recording

The full recording and the associated Excel workbook are available to AI by Hand Academy members. You can become a member via a paid Substack subscription.