Batch Normalization

Essential AI Math Excel Blueprints

\(\begin{aligned}

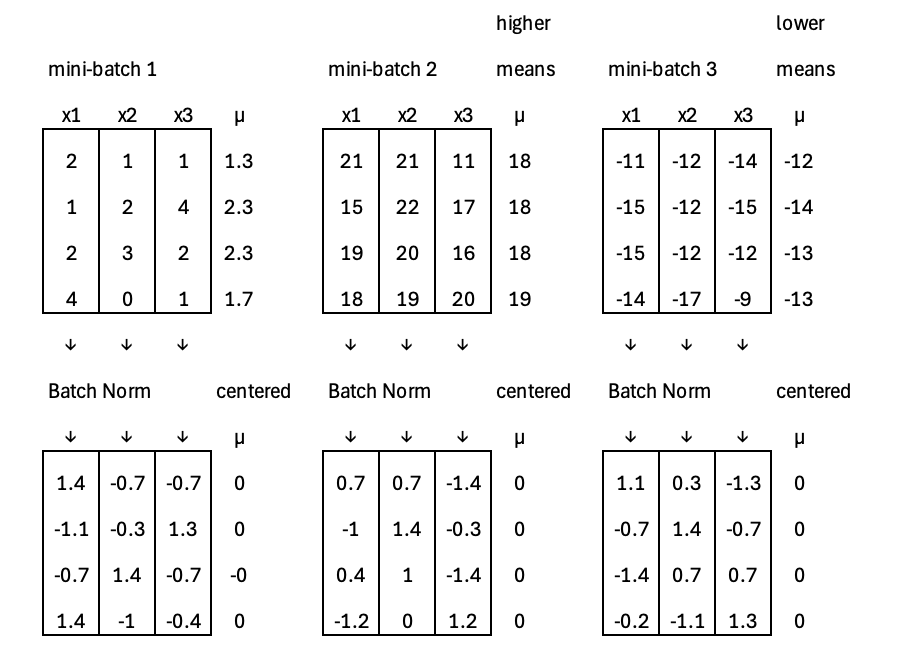

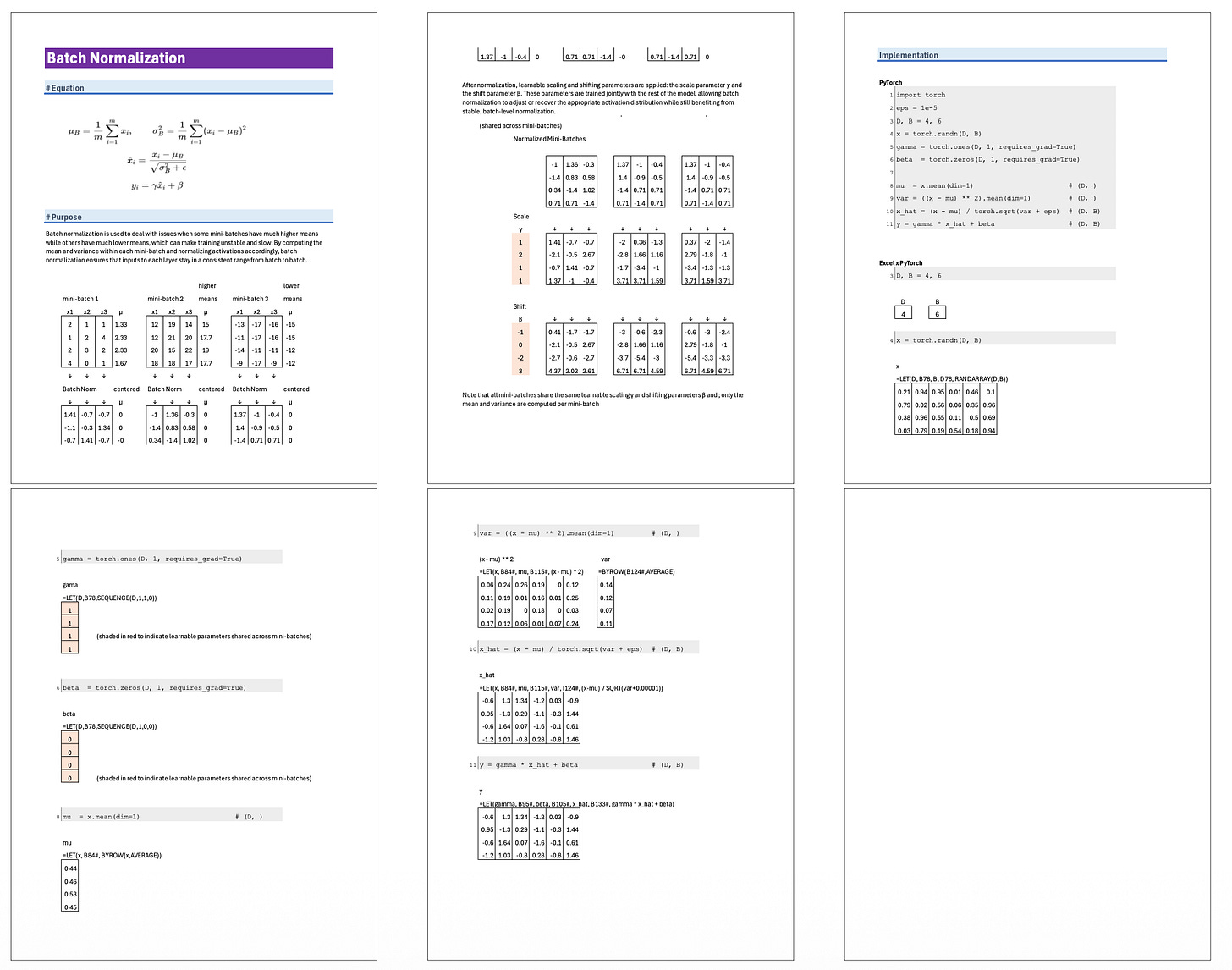

\mu_B = \frac{1}{m} \sum_{i=1}^{m} x_i \\

\sigma_B^2 = \frac{1}{m} \sum_{i=1}^{m} (x_i - \mu_B)^2\\

\hat{x}_i = \frac{x_i - \mu_B}{\sqrt{\sigma_B^2 + \epsilon}}

\\

y_i = \gamma \hat{x}_i + \beta

\end{aligned}

\)

Batch normalization is used to deal with issues when some mini-batches have much higher means while others have much lower means, which can make training unstable and slow. By computing the mean and variance within each mini-batch and normalizing activations accordingly, batch normalization ensures that inputs to each layer stay in a consistent range from batch to batch.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.