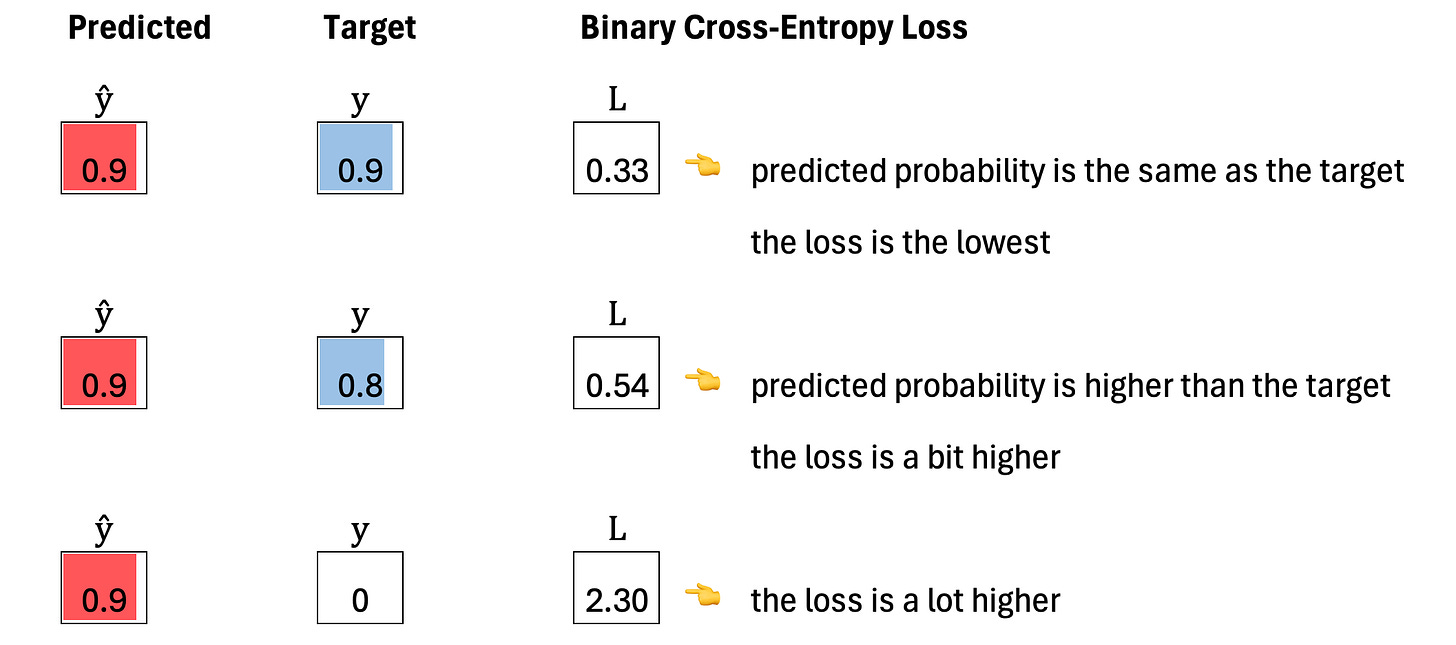

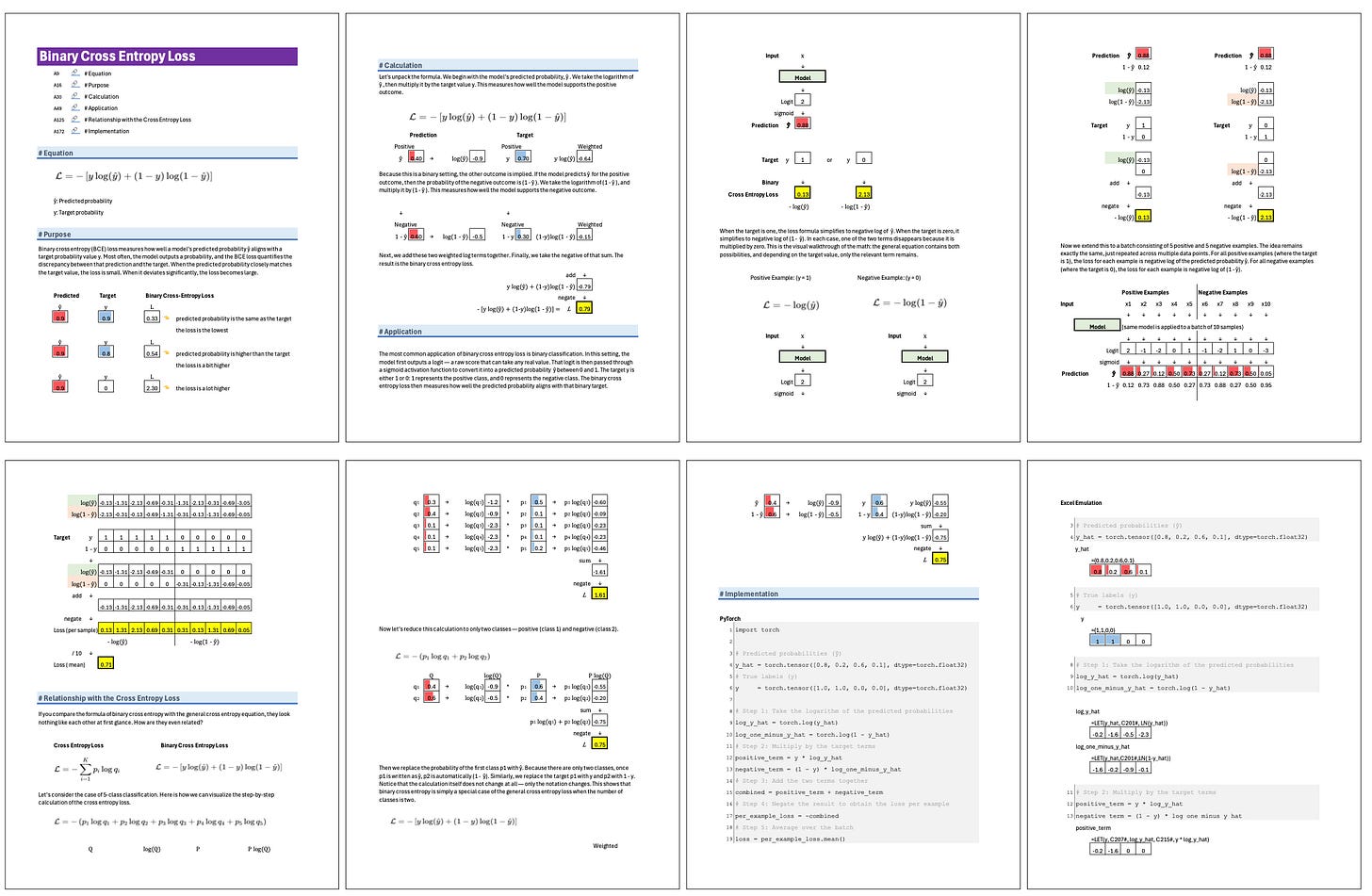

Binary Cross Entropy Loss

Essential AI Math Excel Blueprints

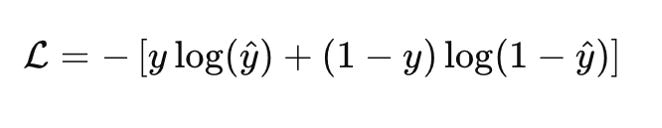

Binary cross entropy (BCE) loss measures how well a model’s predicted probability ŷ aligns with a target probability value y. Most often, the model outputs a probability, and the BCE loss quantifies the discrepancy between that prediction and the target. When the predicted probability closely matches the target value, the loss is small. When it deviates significantly, the loss becomes large.

Calculation

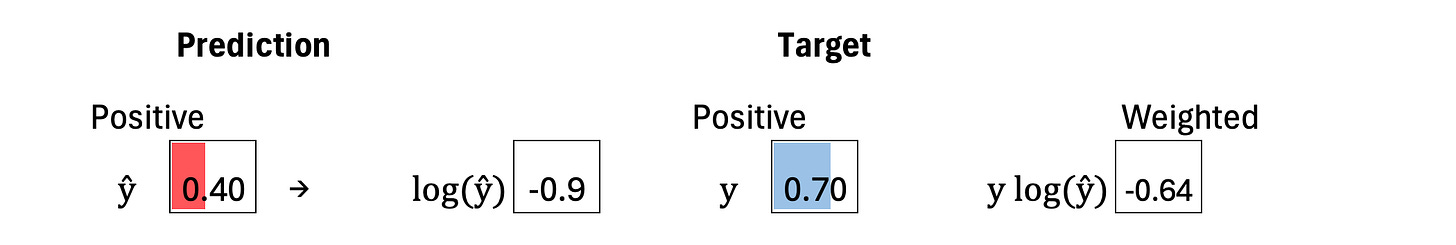

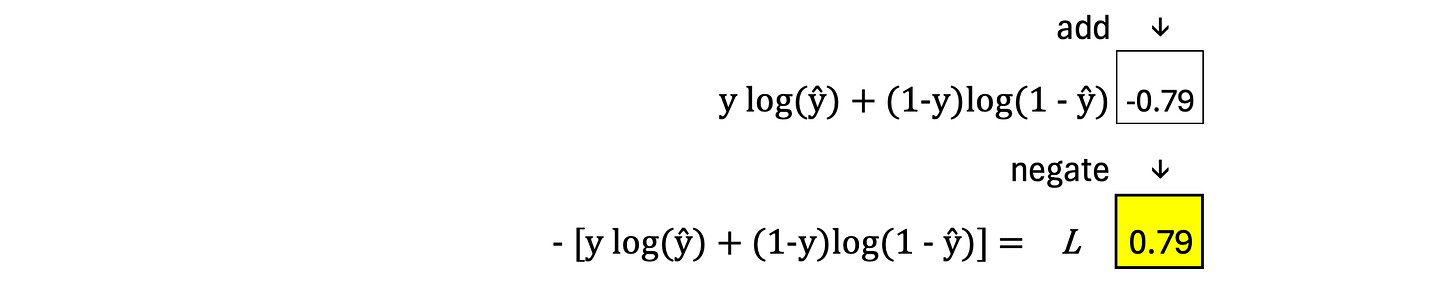

Let’s unpack the formula. We begin with the model’s predicted probability, ŷ . We take the logarithm of ŷ , then multiply it by the target value y. This measures how well the model supports the positive outcome.

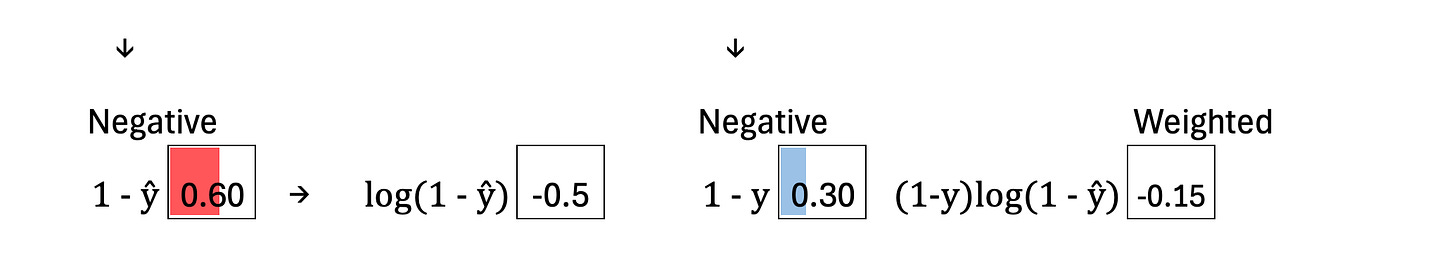

Because this is a binary setting, the other outcome is implied. If the model predicts ŷ for the positive outcome, then the probability of the negative outcome is (1 - ŷ ). We take the logarithm of (1 - ŷ ), and multiply it by (1 - ŷ ). This measures how well the model supports the negative outcome.

Next, we add these two weighted log terms together. Finally, we take the negative of that sum. The result is the binary cross entropy loss.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.