Building Multi-Agents from Paper 📝 by Hand ✍️

From the AI by Hand Research Lab 🔬

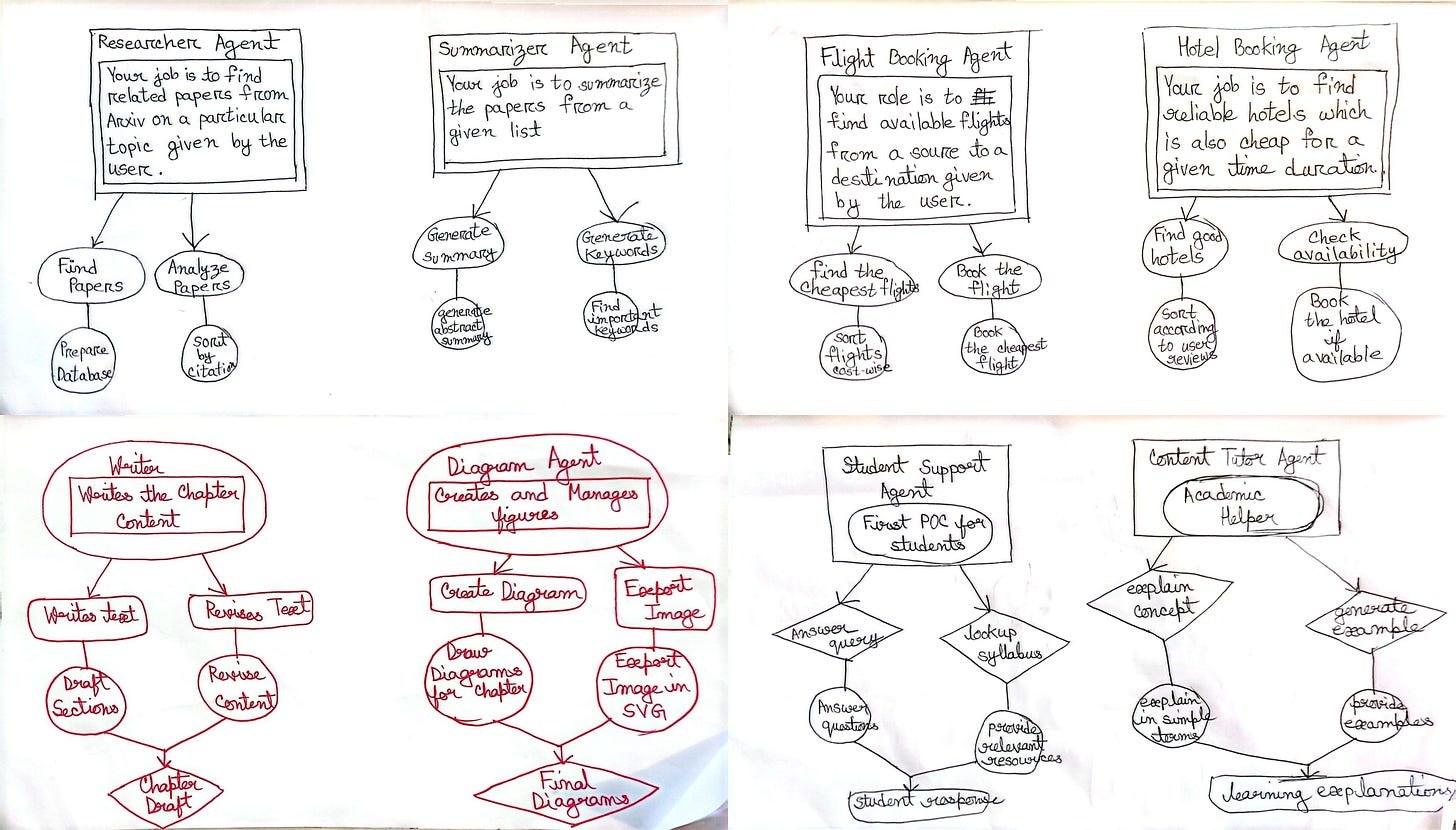

Last October, Mohsena, my PhD student, led a highly successful mini-course series on advanced AI agents, attended by thousands of participants. For each lecture, she created a rich set of hand-drawn diagrams illustrating agentic AI design.

While studying the DeepSeek OCR model to build an Excel Blueprint, I was struck by how powerful modern multimodal OCR models have become. That sparked an idea: what if we could take Mohsena’s drawings directly as input? Could we design a system that transforms her beautiful sketches into a functional multi-agent AI system?

I asked Mohsena to explore this idea and build a prototype. Here are her initial results:

Building a Multi-agent System from Paper 📝 by Hand ✍️

(written by Mohsena)

Following the overwhelmingly positive response from the community, I felt both honored and deeply grateful to see so many people engage with my mini-course series on advanced AI agents last October. The enthusiasm and thoughtful feedback around the lectures—and especially around the hand-drawn diagrams—meant a great deal to me.

When Tom shared an idea inspired by this work and invited me to explore it further as a research project, I was excited to take it on and build a prototype. Here are my initial results:

Do We Think Better When We Draw?

Do we learn faster by reading text? By writing code? Or by illustrating our ideas?

When I was a kid, my mother used matchsticks to help me understand addition. She would place them on the table, group them, move them around—and suddenly numbers made sense.

In high school, when math problems became more complex, I found myself drawing figures in the margins of my notebook—not because the teacher asked me to, but because I needed to see the logic.

Later, in undergrad, I still remember my Algorithms instructor standing in front of the whiteboard, drawing boxes and arrows to explain stacks and queues. He didn’t start with definitions, or any code. He started with drawings. Only then did the ideas click.

We don’t just learn by consuming information. We learn by externalizing our thinking.

Drawing as a Way of Thinking

Drawing slows us down. It forces us to decide what matters. It makes relationships visible. As developers, we already do this instinctively. Before writing code, we sketch ideas on paper. We brainstorm on whiteboards. We draw systems before we build them. Yet somewhere along the way, drawings stopped being part of the actual system. They became explanations—useful, but disposable.

I started wondering: what if drawings weren’t just for communication? What if they were the system itself?

A Simple Scenario

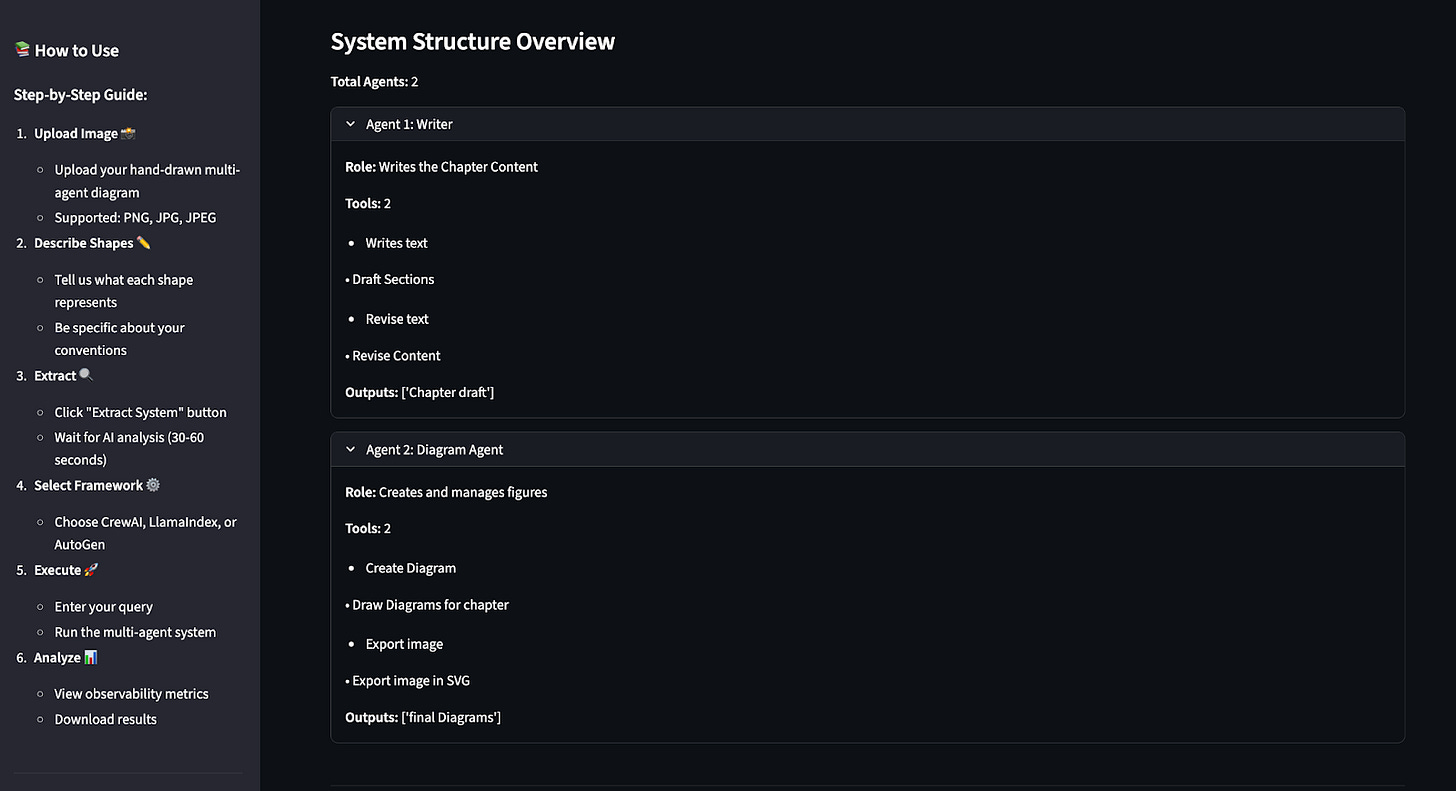

Imagine you are the director of a book publishing company. You want to build a chapter-writing team:

One team writes and revises chapters.

Another team creates diagrams for those chapters.

You sit down with your colleagues and start drawing. You sketch two agents. You draw shapes to show roles, tools, tasks, and outputs.

Everyone in the room understands the system immediately. That drawing is your agentic system.

From Drawing → Structure → Execution

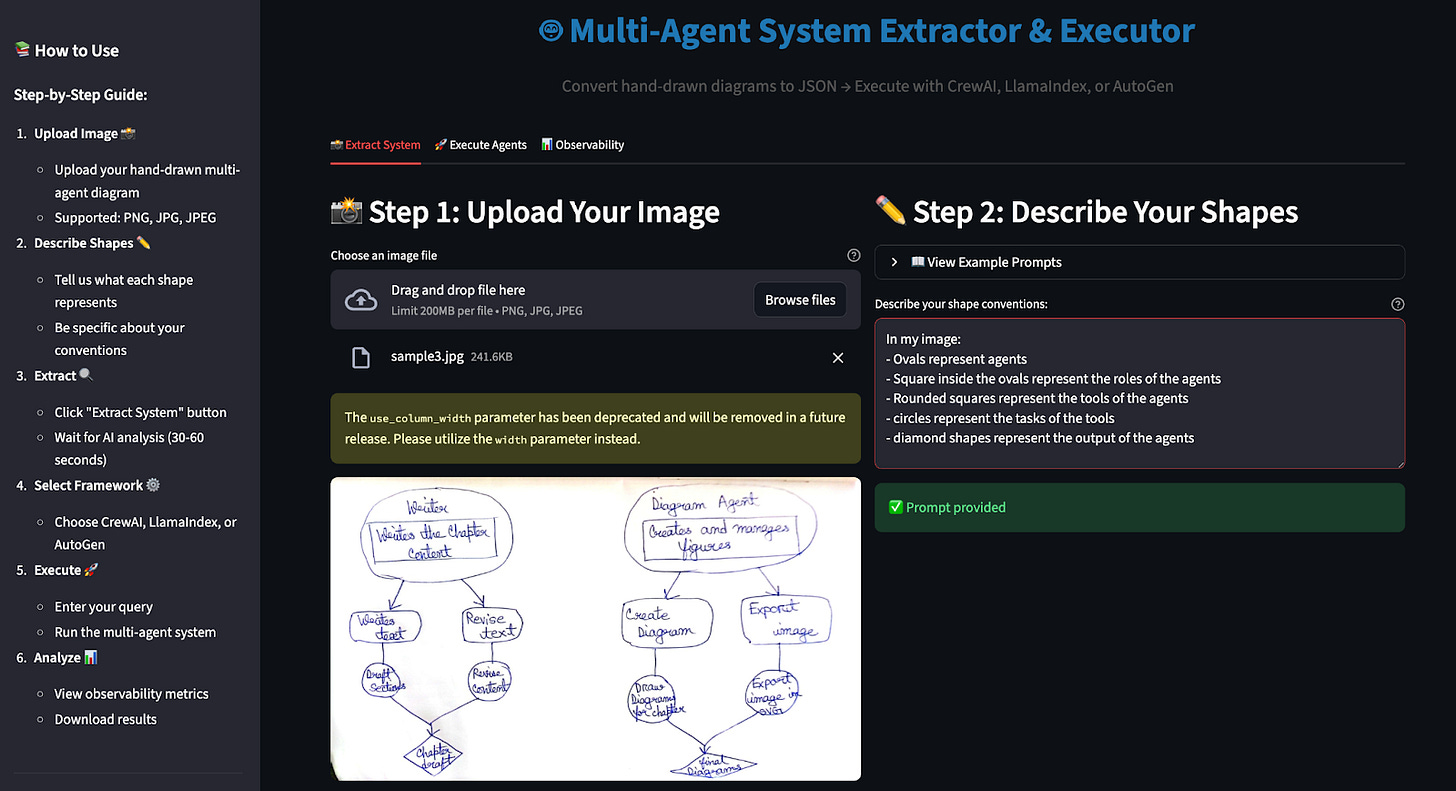

Here’s how we turn that drawing into something real. First, you upload a hand-drawn image of your agentic system. Then, you provide a short explanation of your drawing conventions:

Ovals represent agents,

Rounded rectangles represent tools,

Other shapes represent tasks and outputs.

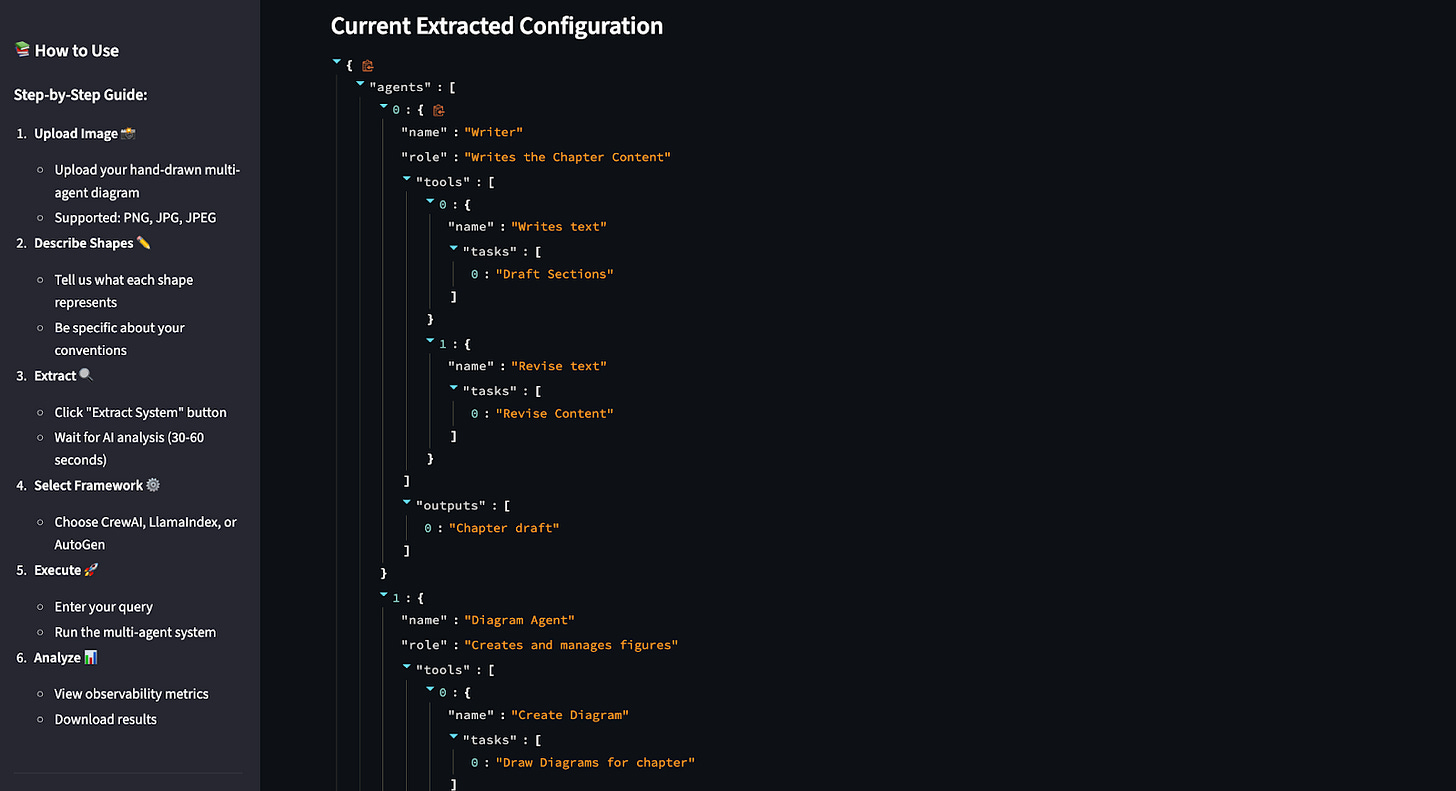

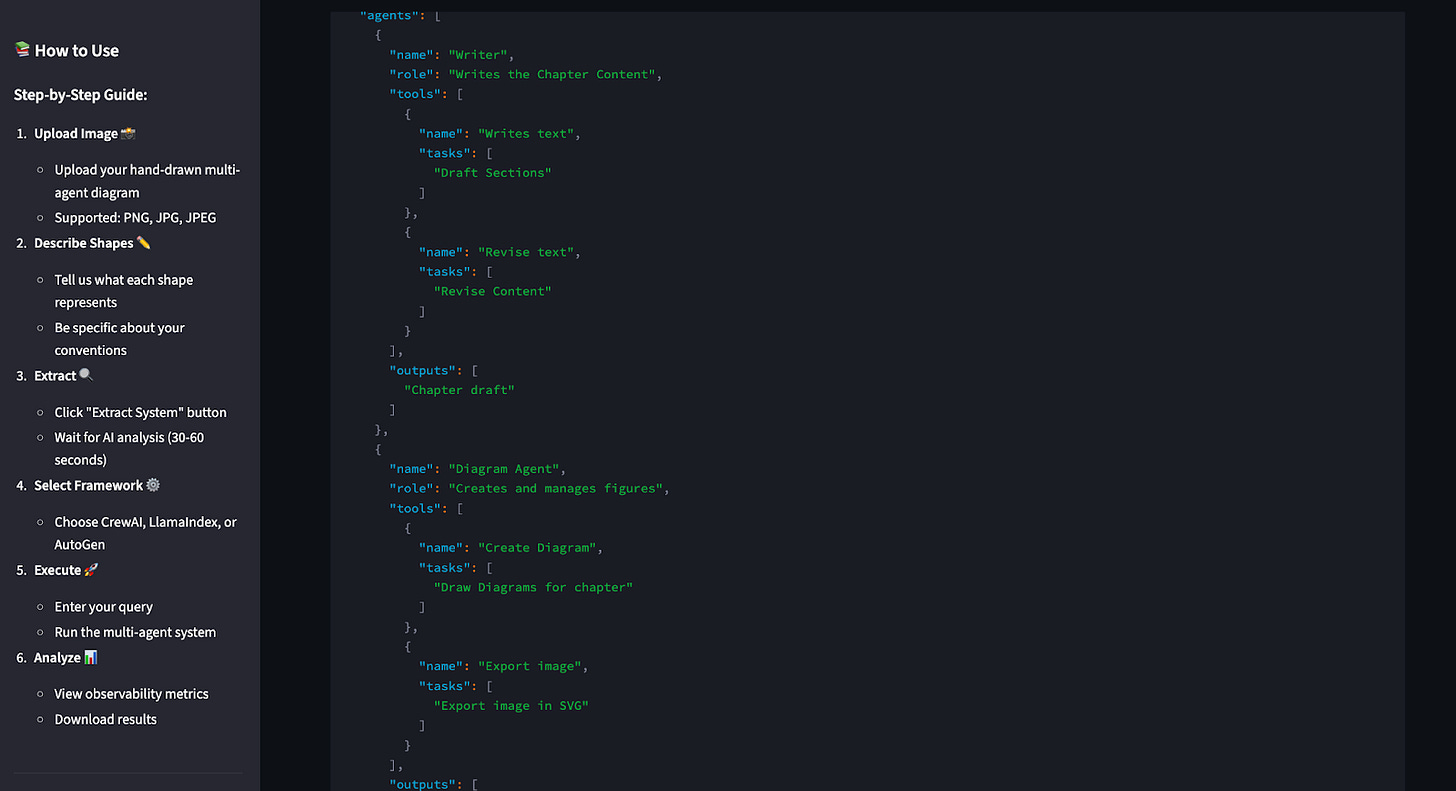

The system analyzes both the image and the instructions together and extracts an exact structured JSON representation of the system. You can inspect the raw JSON file, or a clean, structured view of agents, tools, tasks, and outputs. The drawing becomes data. The idea becomes structure.

One Drawing, Multiple Agent Frameworks

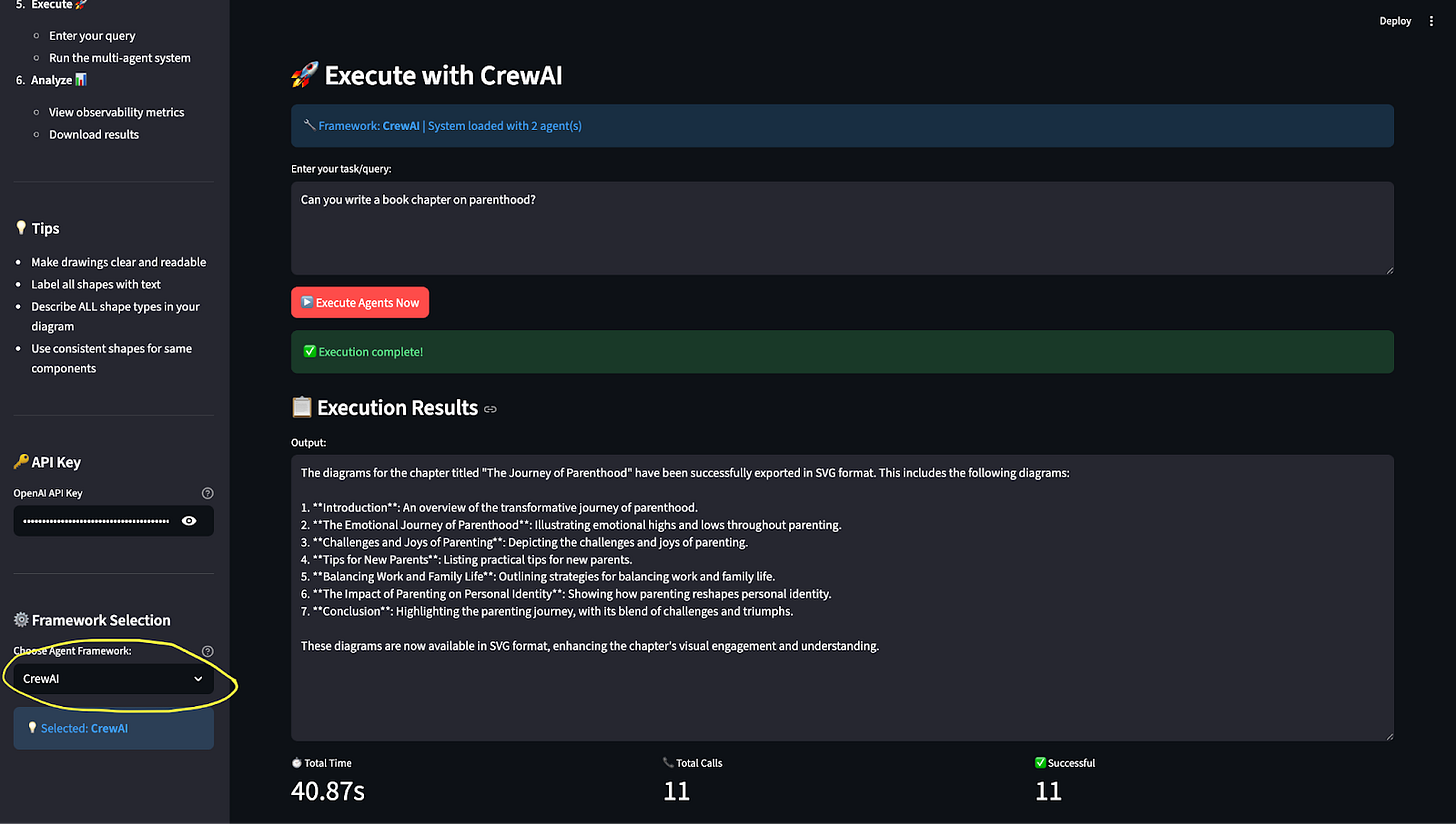

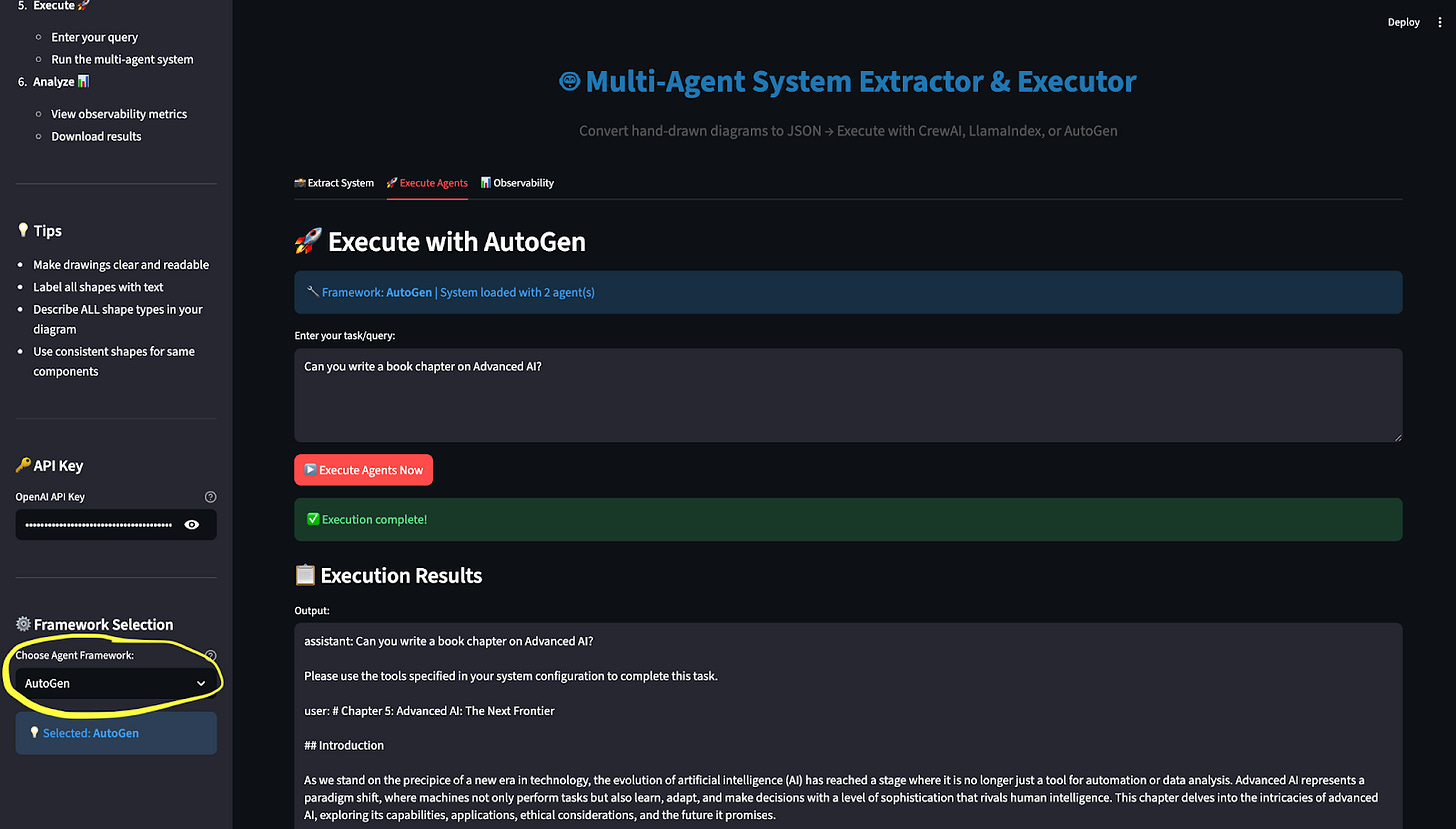

Once the JSON is generated, nothing needs to be rewritten. From a dropdown menu, you can choose how to run the system:

CrewAI

LlamaIndex

AutoGen

The system uses the exact same JSON to build an executable multi-agent system in the selected framework. (Internally, we discovered some compatibility challenges—LlamaIndex currently behaves differently from the other two due to OpenAI version mismatches—but the core idea remains intact.)

Running and Observing the System

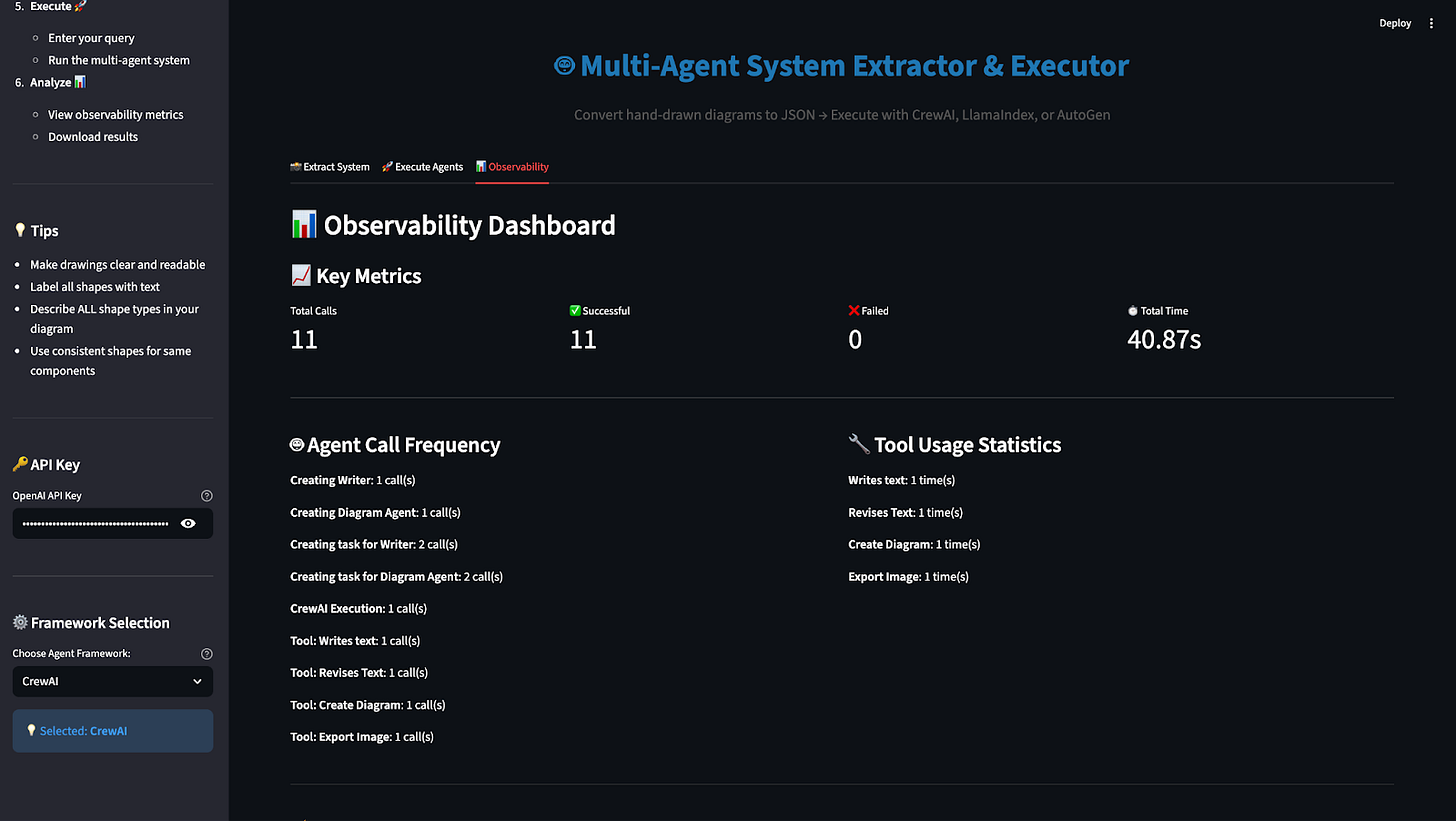

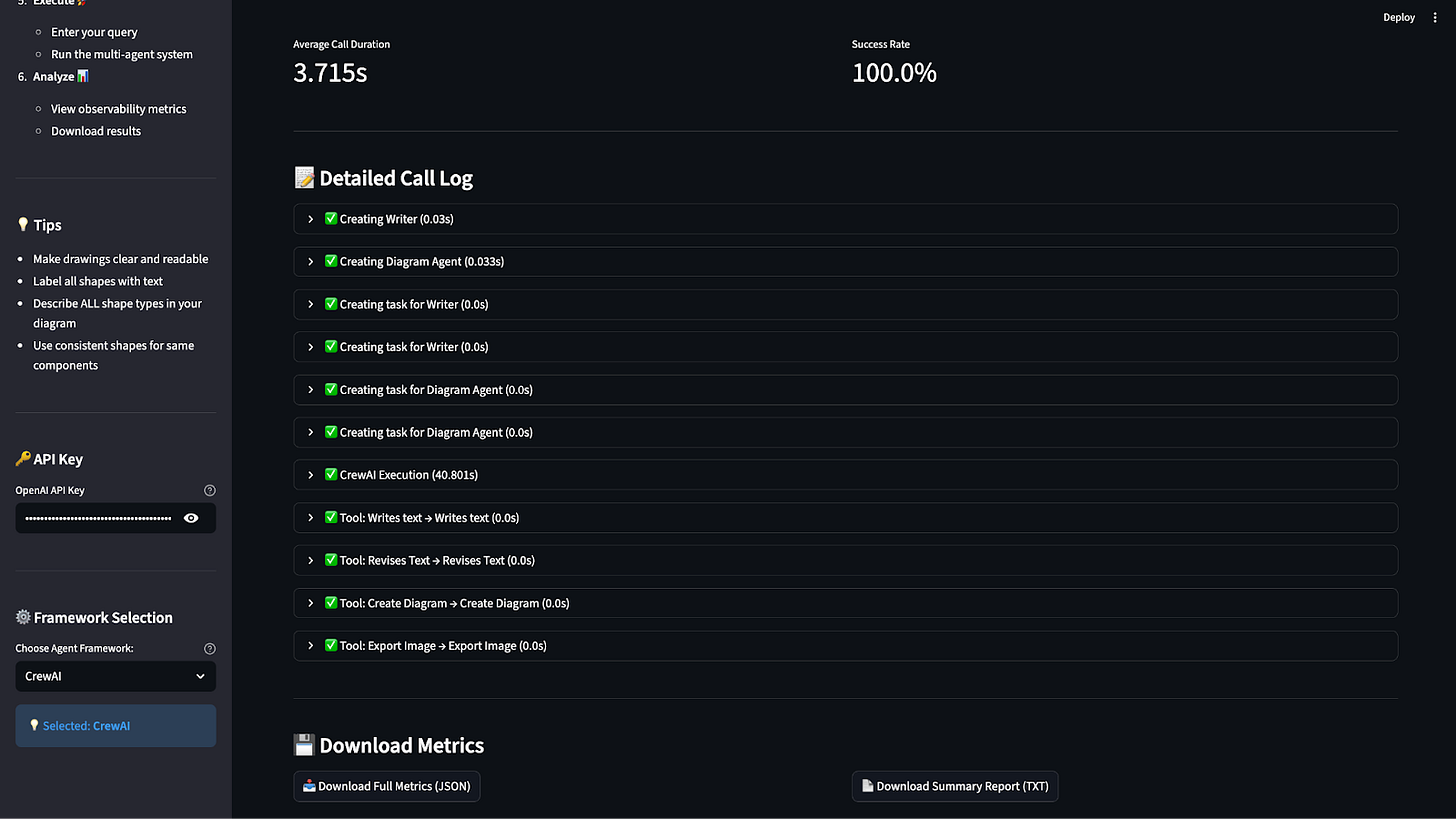

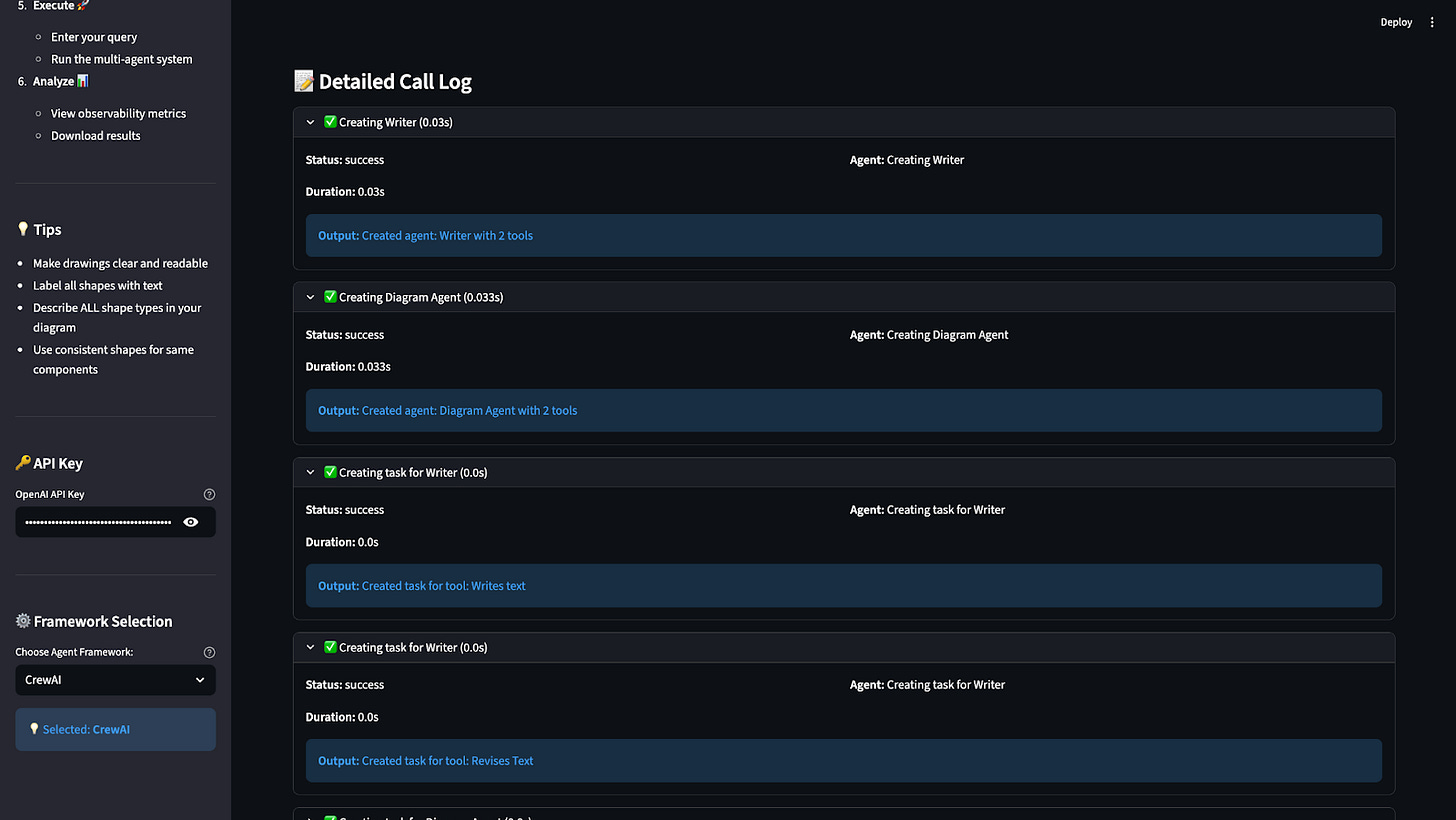

Once the system is built, you can execute it with a user query. For now, the output is only text-based. But more important than the output is what happens behind the scenes. After execution, you can open the observability tab and see:

which agents were called

which tools were used

how many times each component ran

success and failure counts

total execution time

You can even download a summary. The agentic system is no longer a black box. It becomes something you can inspect and reason about.

This is still in the developmental phase, and there’s more ahead.

We plan to generate framework-specific code instead of executing systems directly, reducing compatibility issues.

We want to build PDF-style templates, where users fill in shapes like a form—without needing to explain shape semantics.

We’re exploring QR-code-based templates, allowing users to start from predefined agentic patterns instantly.

A Closing Thought

Tools often ask humans to adapt. This work tries to reverse that. By letting people draw first—and treating those drawings as first-class inputs—we’re not just making agentic AI easier to build. We’re preserving the thinking process that leads to good systems in the first place.

Sometimes, understanding doesn’t start with code; it starts with a drawing.