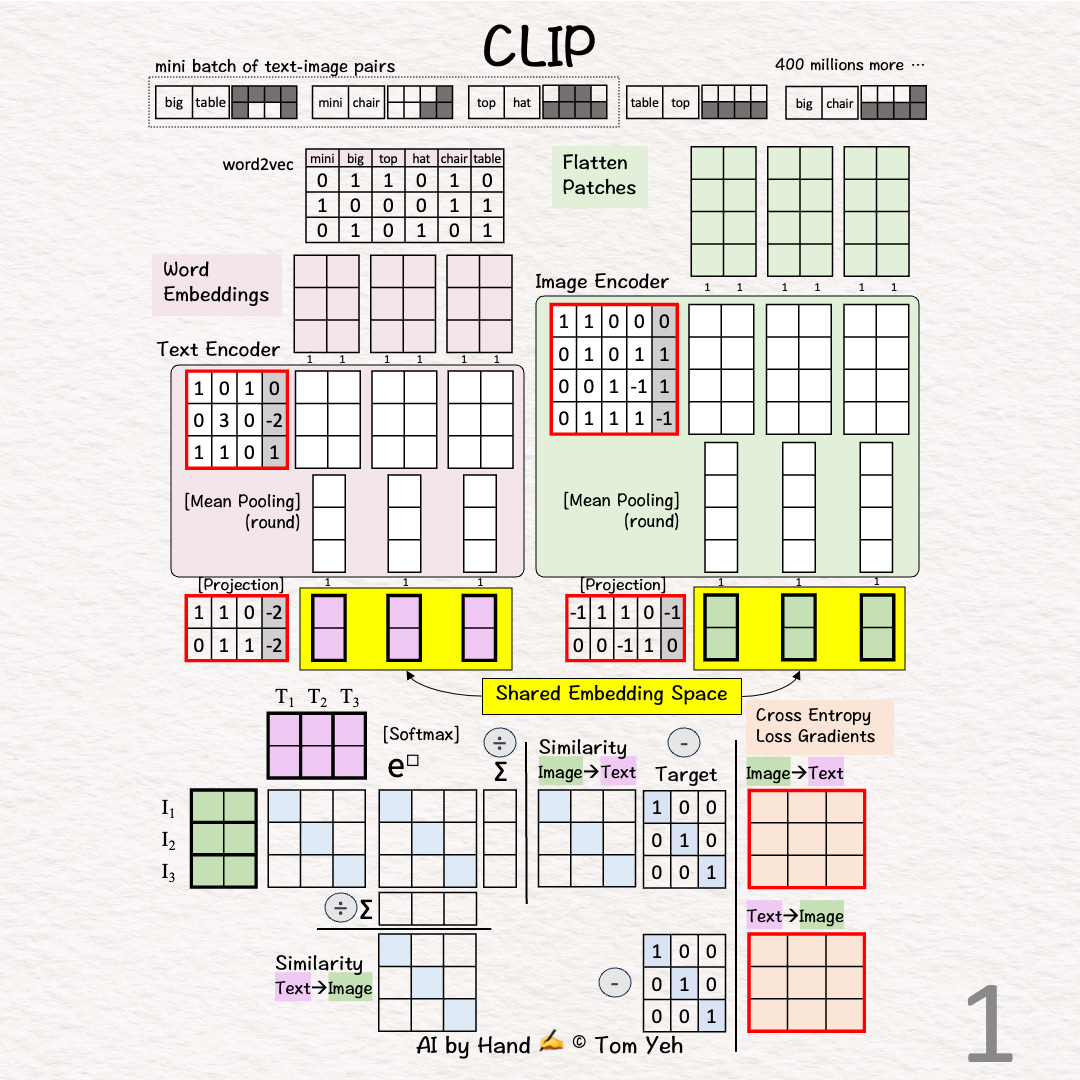

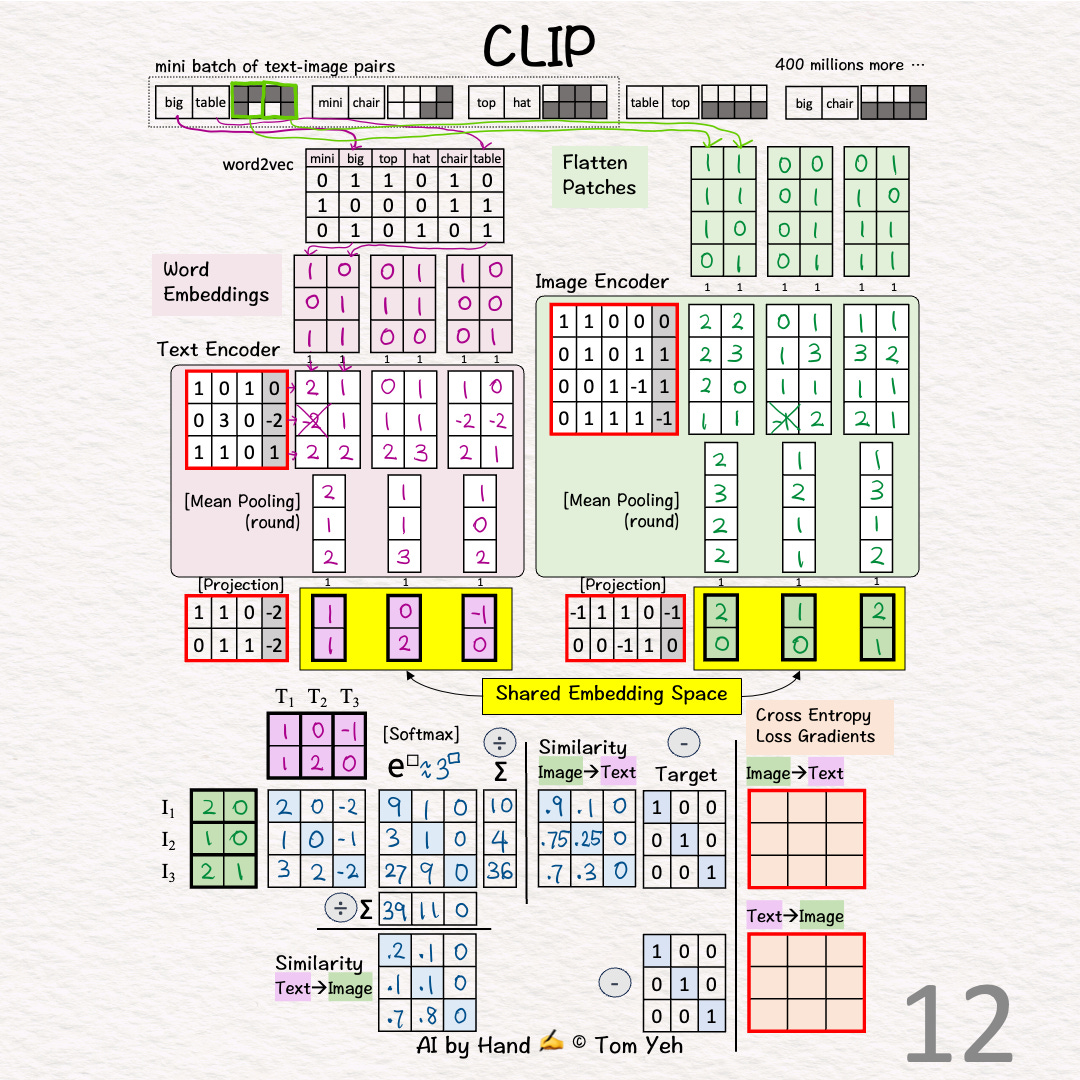

CLIP by Hand ✍️

The CLIP (Contrastive Language–Image Pre-training) model, a groundbreaking work by OpenAI, redefines the intersection of computer vision and natural language processing. It is the basis of all the multi-modal foundation models we see today.

How does CLIP work?

Walkthrough

Goal: 🟨 Learn a shared embedding space for text and image

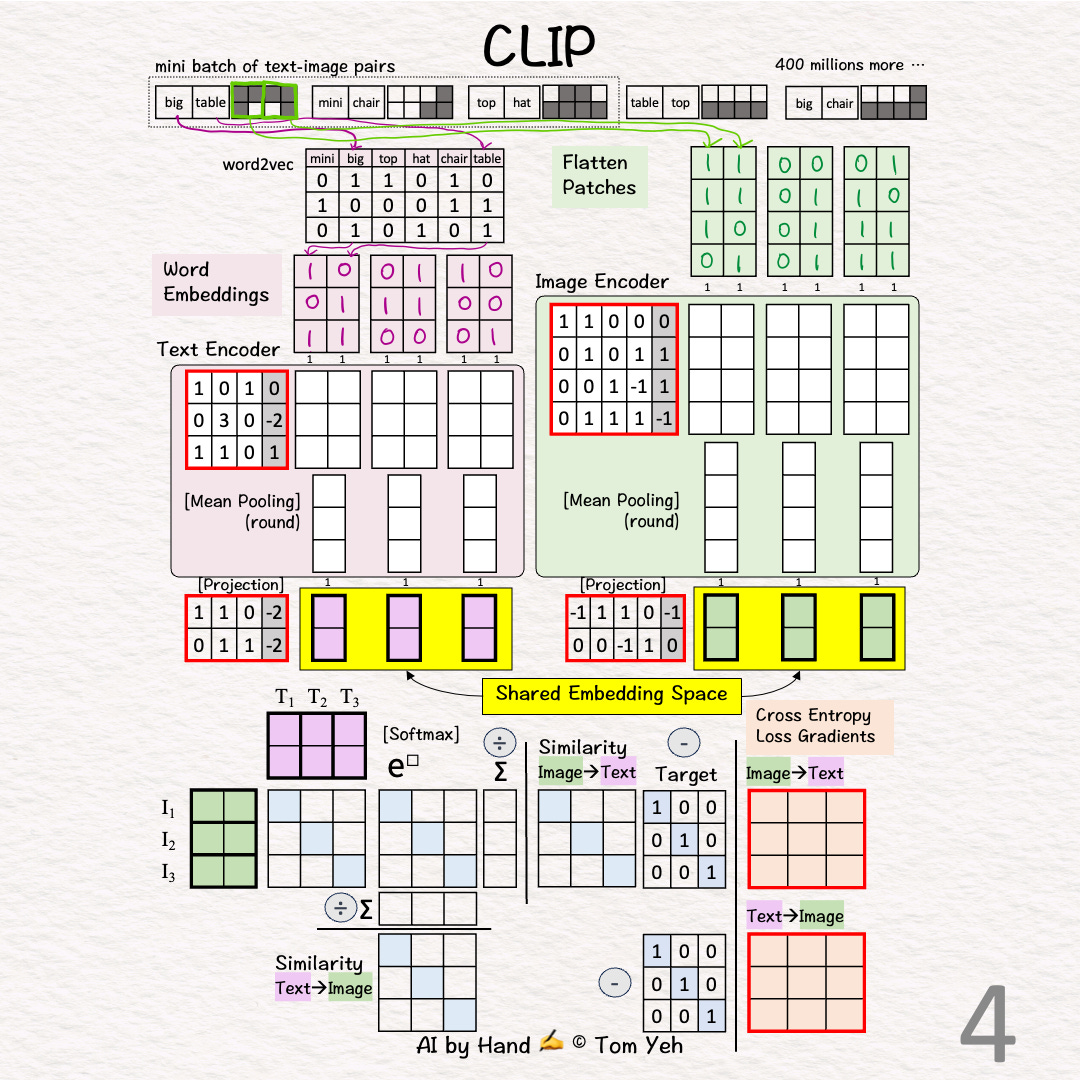

[1] Given

↳ A mini batch of 3 text-image pairs

↳ OpenAI used 400 million text-image pairs to train its original CLIP model.

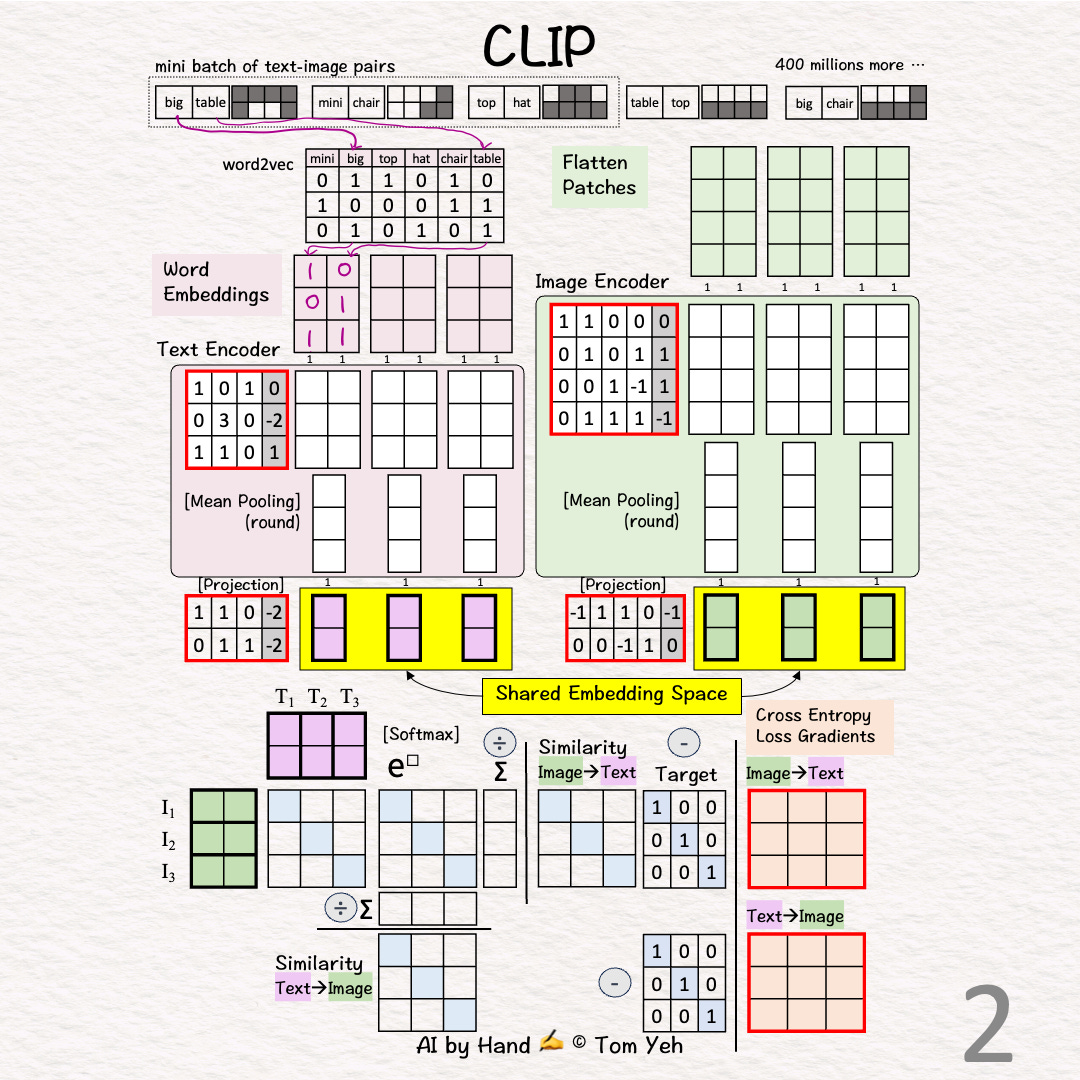

Process 1st pair: "big table"

[2] 🟪 Text → 2 Vectors (3D)

↳ Look up word embedding vectors using word2vec.

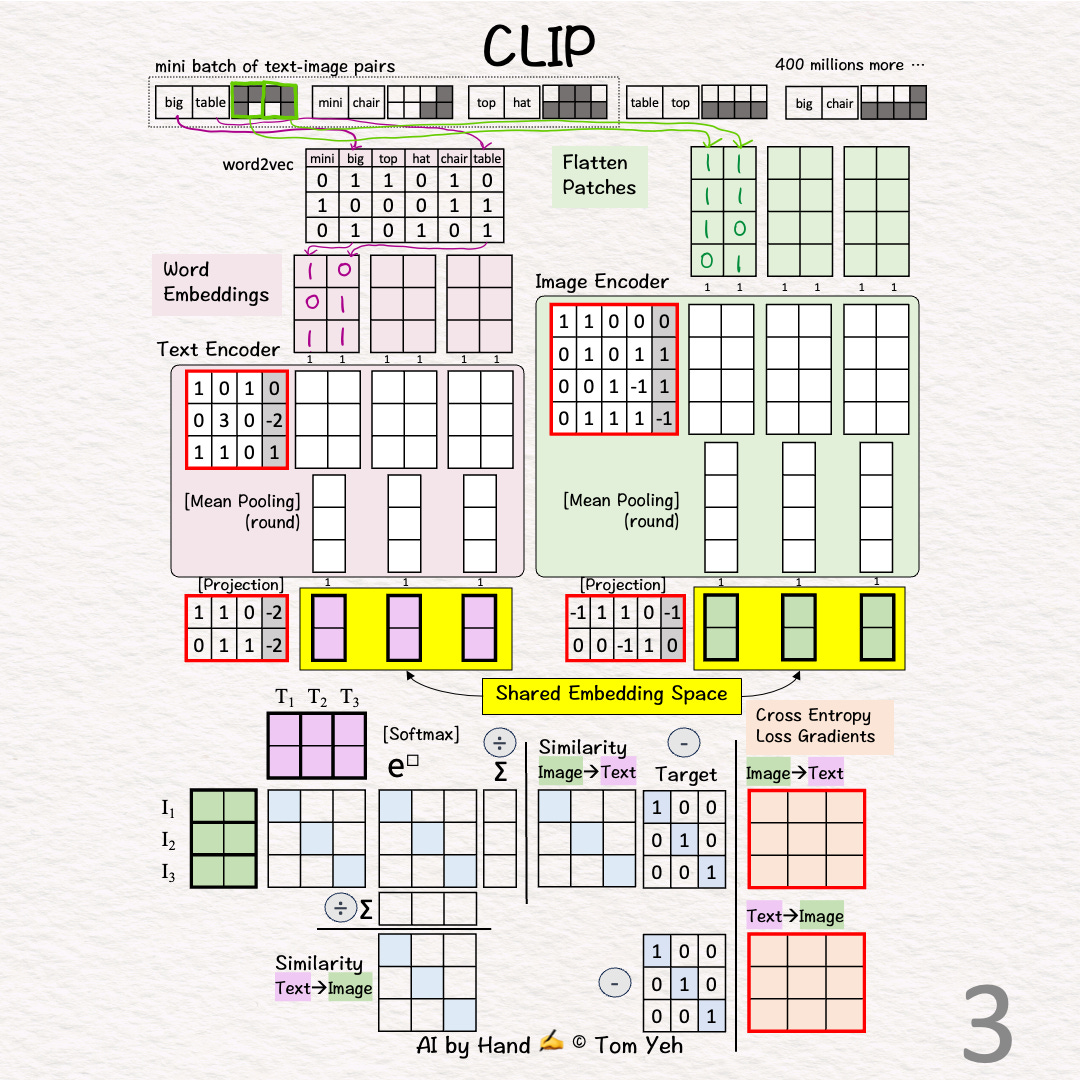

[3] 🟩 Image → 2 Vectors (4D)

↳ Divide the image into two patches.

↳ Flatten each patch

[4] Process other pairs

↳ Repeat [2]-[3]

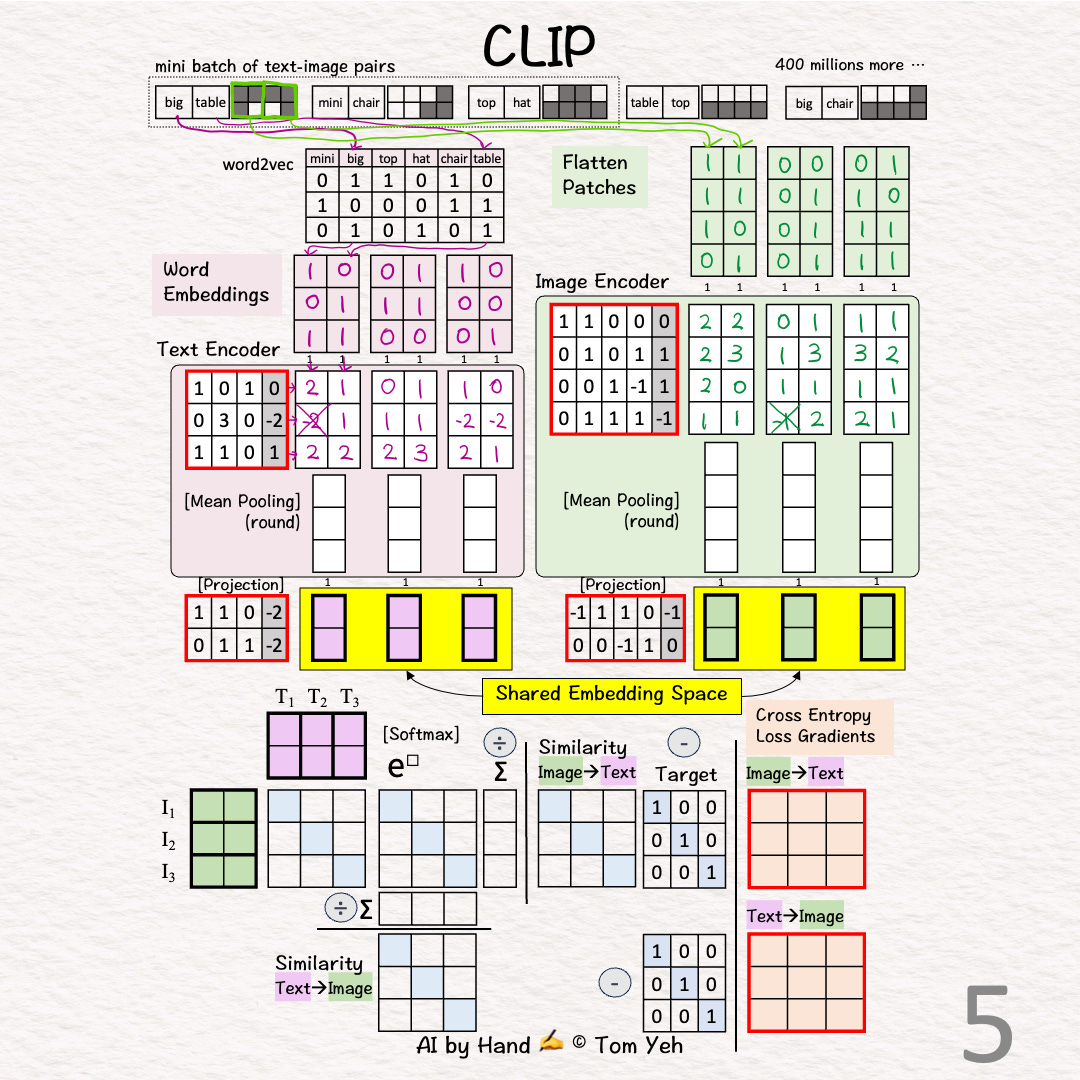

[5] 🟪 Text Encoder & 🟩 Image Encoder

↳ Encode input vectors into feature vectors

↳ Here, both encoders are simple one layer perceptron (linear + ReLU)

↳ In practice, the encoders are usually transformer models.

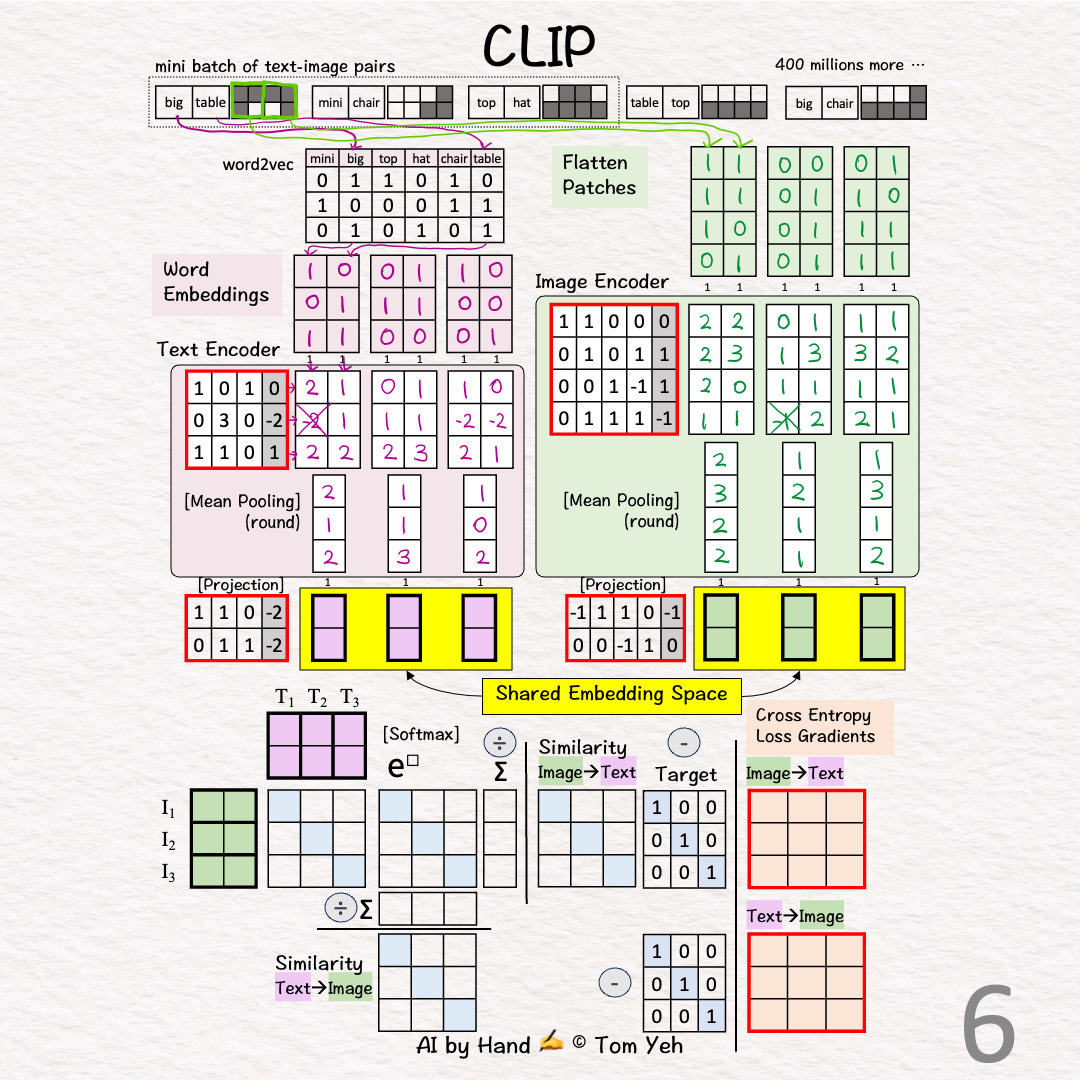

[6] 🟪 🟩 Mean Pooling: 2 → 1 vector

↳ Average 2 feature vectors into a single vector by averaging across the columns

↳ The goal is to have one vector to represent each image or text

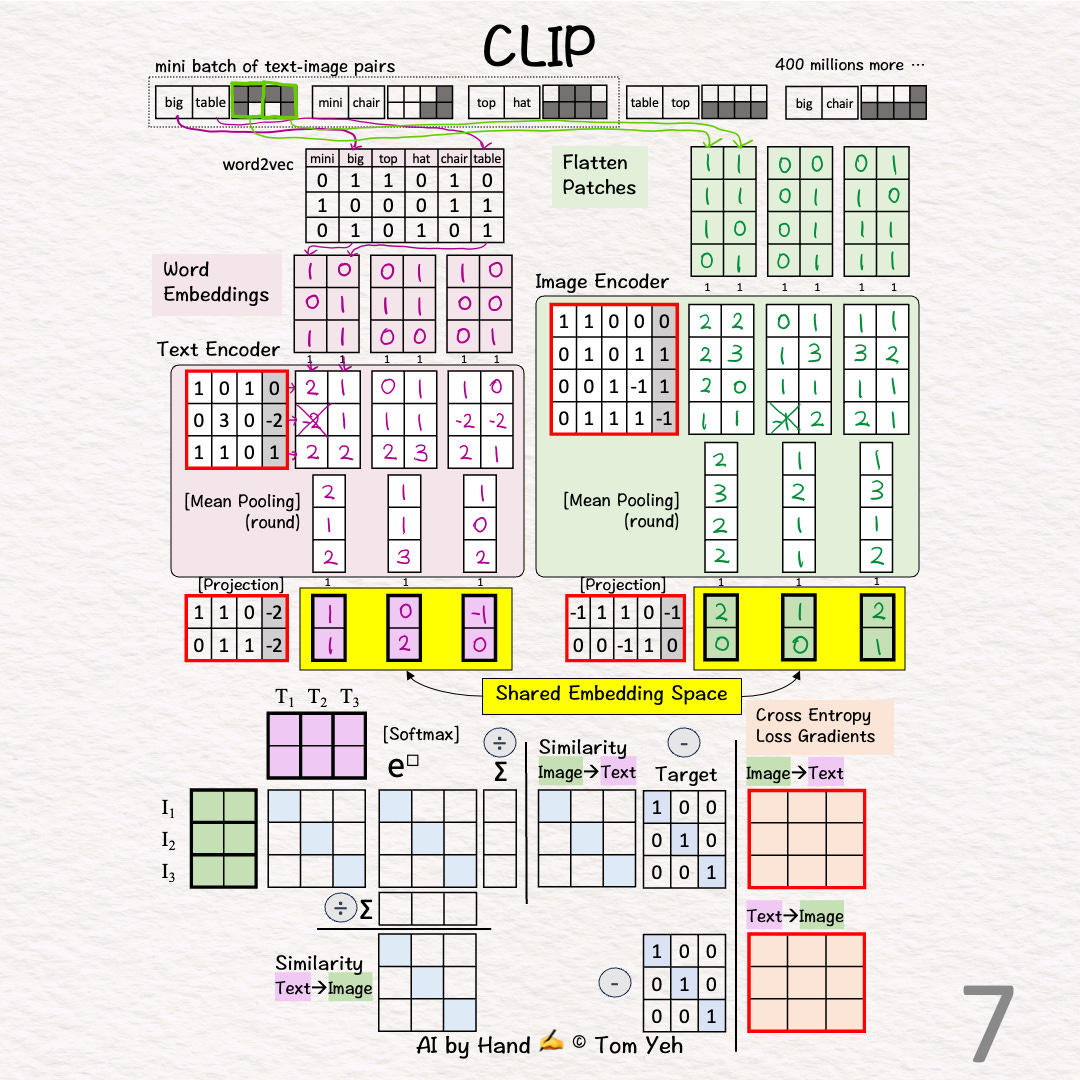

[7] 🟪 🟩 -> 🟨 Projection

↳ Note that the text and image feature vectors from the encoders have different dimensions (3D vs. 4D).

↳ Use a linear layer to project image and text vectors to a 2D shared embedding space.

🏋️ Contrastive Pre-training 🏋️

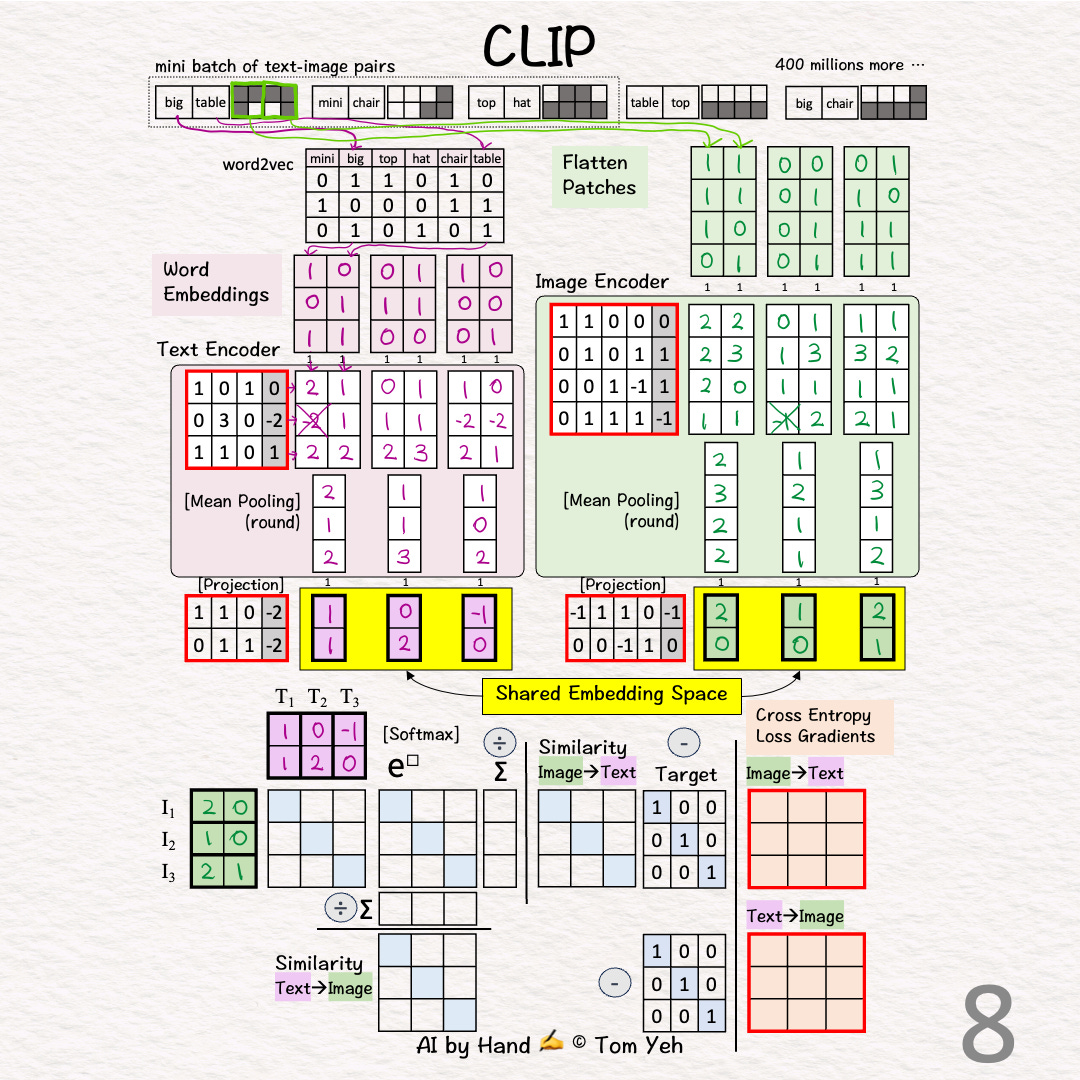

[8] Prepare for MatMul

↳ Copy text vectors (T1,T2,T3)

↳ Copy the transpose of image vectors (I1,I2,I3)

↳ They are all in the 2D shared embedding space.

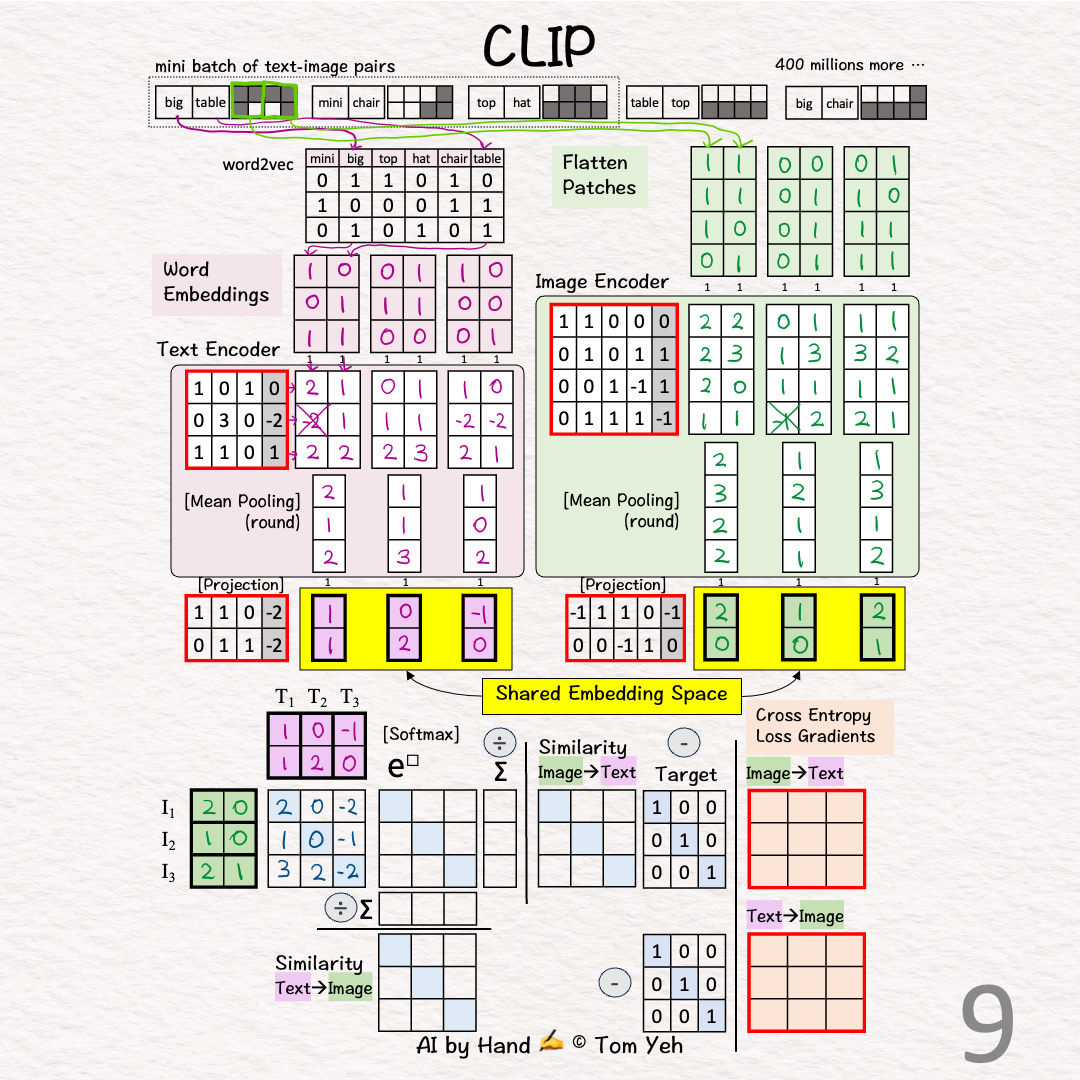

[9] 🟦 MatMul

↳ Multiply T and I matrices.

↳ This is equivalent to taking dot product between every pair of image and text vectors.

↳ The purpose is to use dot product to estimate the similarity between a pair of image-text.

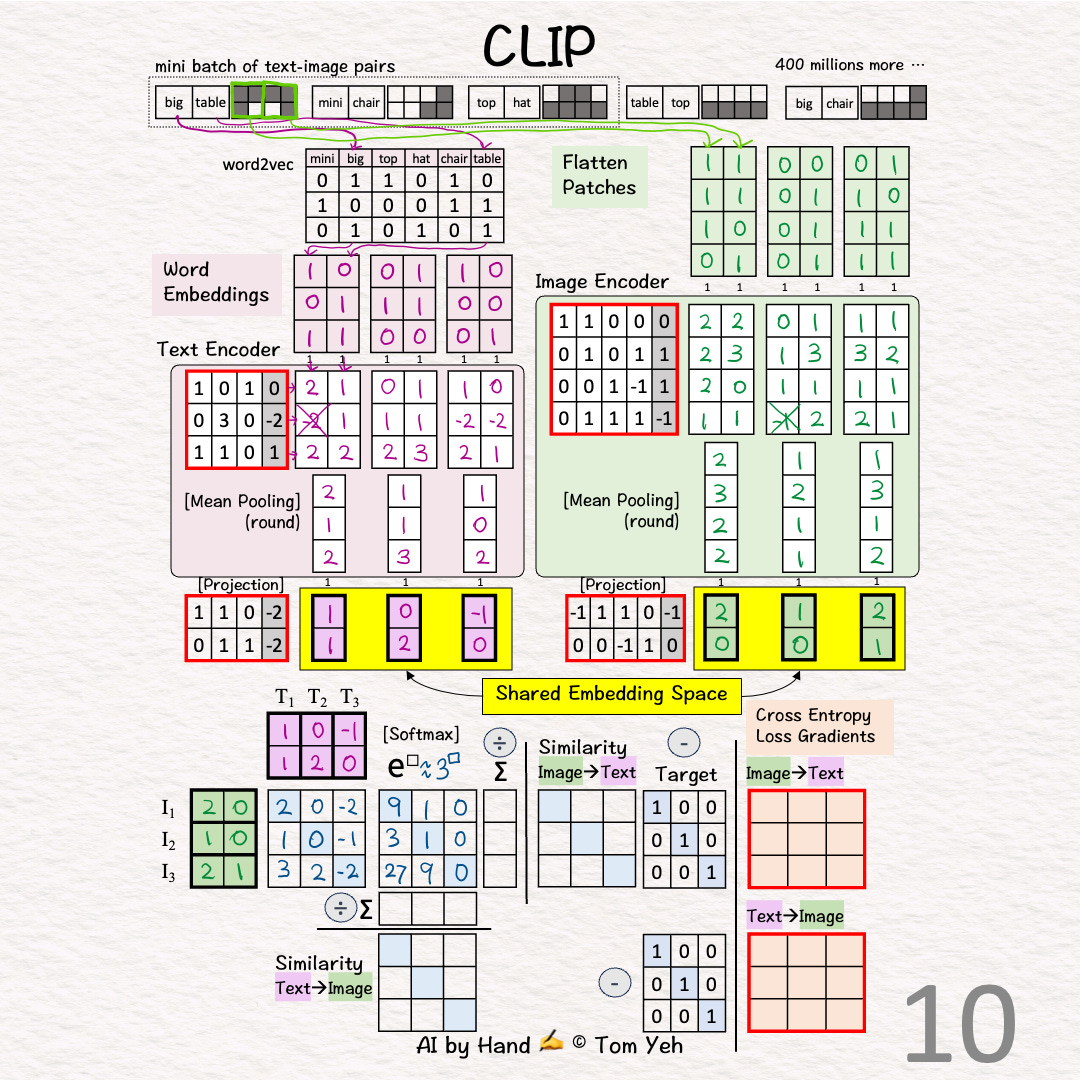

[10] 🟦 Softmax: e^x

↳ Raise e to the power of the number in each cell

↳ To simplify hand calculation, we approximate e^□ with 3^□.

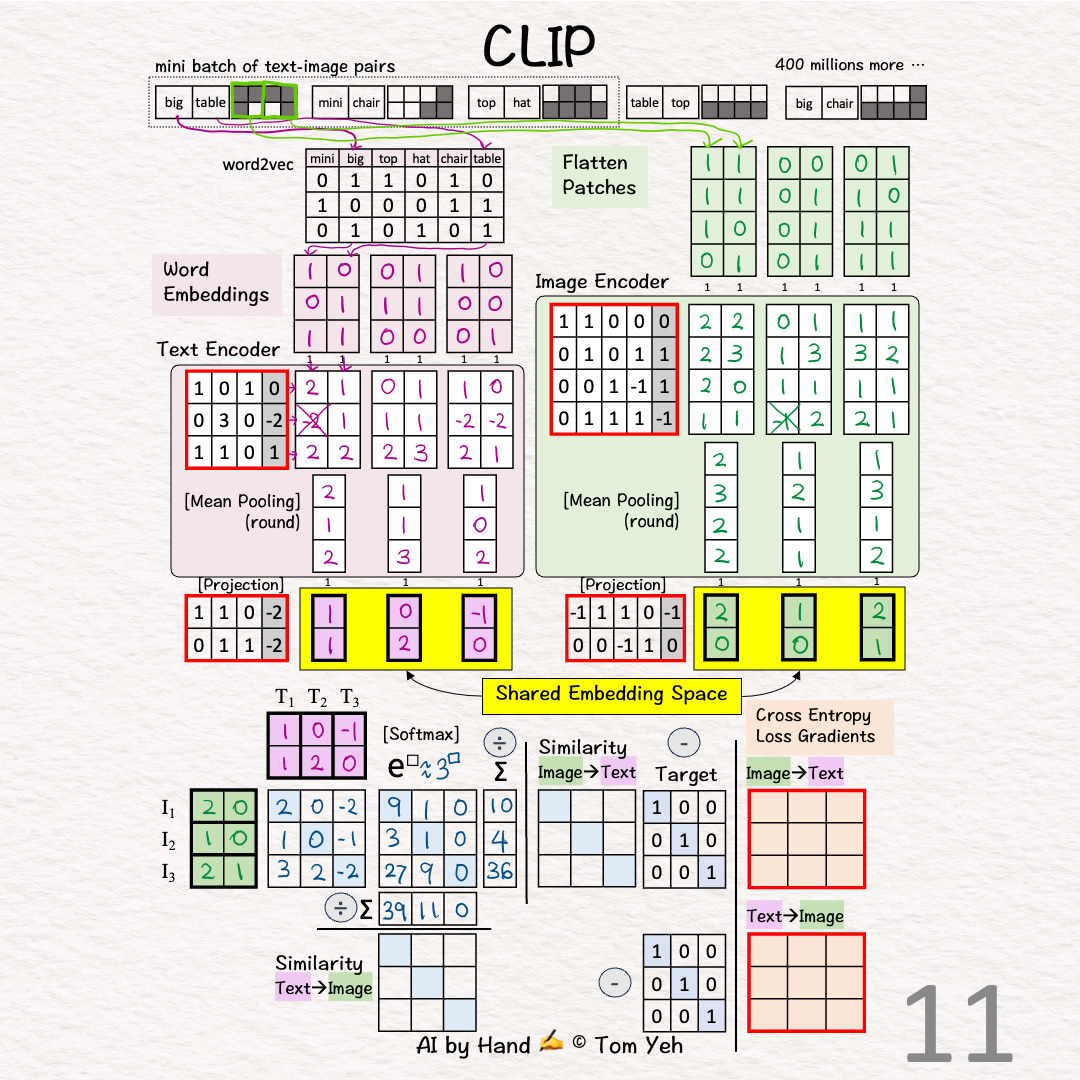

[11] 🟦 Softmax: ∑

↳ Sum each row for 🟩 image→🟪 text

↳ Sum each column for 🟪 text→ 🟩 image

[12] 🟦 Softmax: 1 / sum

↳ Divide each element by the column sum to obtain a similarity matrix for 🟪 text→🟩 image

↳ Divide each element by the row sum to obtain a similarity matrix for 🟩 image→🟪 text

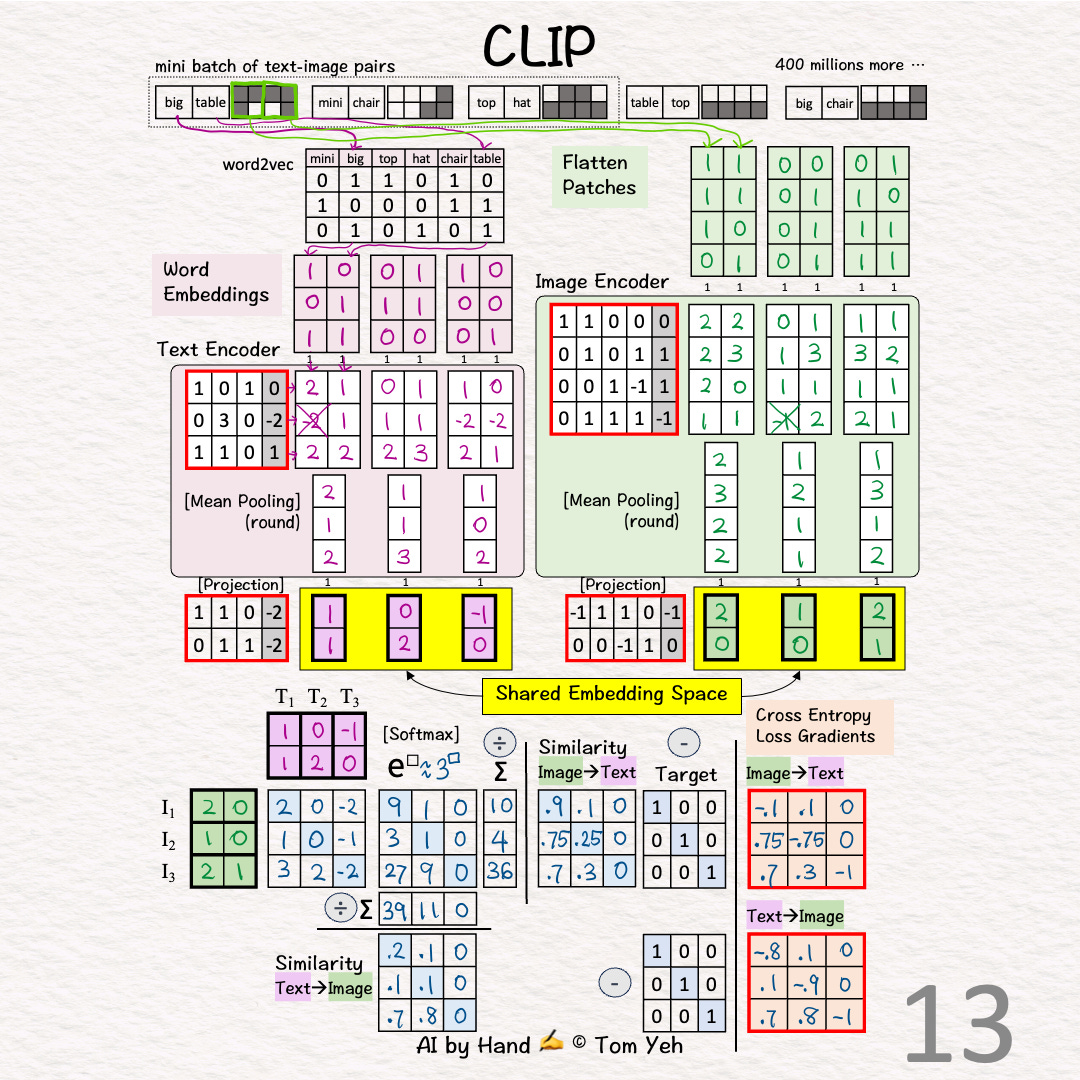

[13] 🟥 Loss Gradients

↳ The "Targets" for the similarity matrices are Identity Matrices.

↳ Why? If I and T come from the same pair (i=j), we want the highest value, which is 1, and 0 otherwise.

↳ Apply the simple equation of [Similarity - Target] to compute gradients of for both directions.

↳ Why so simple? Because when Softmax and Cross-Entropy Loss are used together, the math magically works out that way.

↳ These gradients kick off the backpropagation process to update weights and biases of the encoders and projection layers (red borders).

Download

The mean pooling of the first image should be [2 3 1 1]