Cross Entropy Loss

Essential AI Math Excel Blueprints

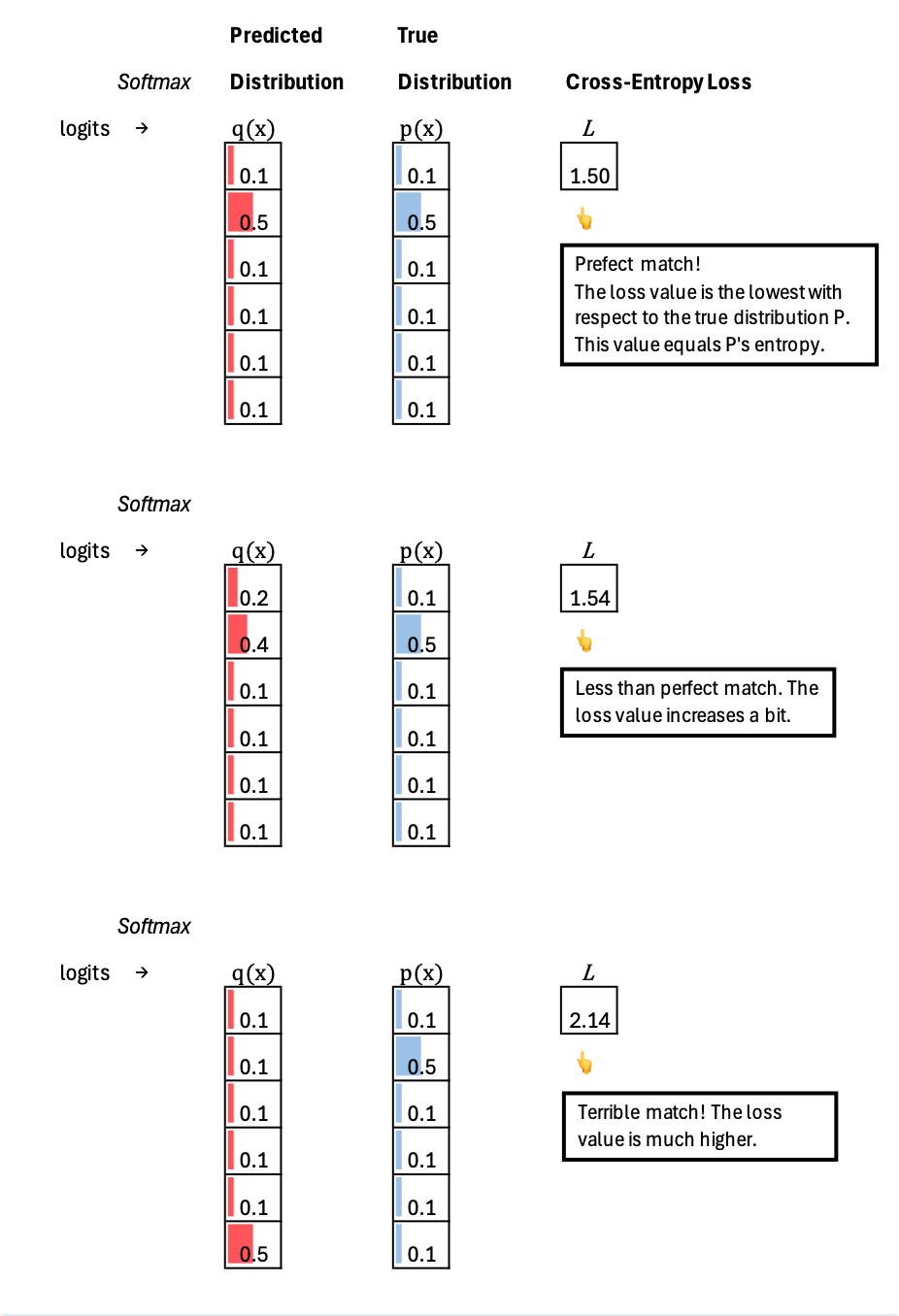

Cross Entropy Loss measures how well the model’s predicted distribution Q matches the true distribution P. The predicted model distribution usually comes from a softmax applied to the logits (the raw scores produced by the model), while the true distribution usually comes from labeled data. A low cross entropy value means the model is assigning high probability to what actually occurs in the ground truth, indicating close alignment between the predicted and true distributions. A high cross entropy value means the model is assigning low probability to what actually occurs, indicating a large mismatch between the predicted distribution and the true distribution.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.