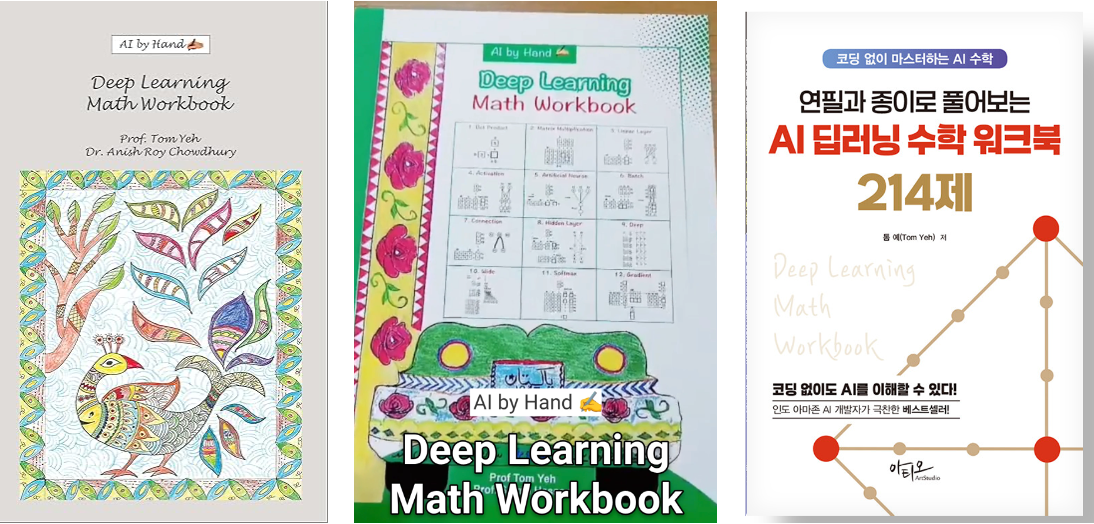

Deep Learning Math Workbook

🔥 Now Available on Amazon [link] — published through Packt

I’ve been self-publishing this Deep Learning Math Workbook since the beginning of 2025. Since then, this workbook has been sold more copies than I can handle and localized in three countries: India, Pakistan, and South Korea.

I’m excited to partner with Packt Publishing, who will take over the publication logistics so this workbook can be available for all the other countries through multiple distribution channels. It also means I can finally retire my routine trips to the local post office.

What inspired the creation of this workbook rather than a conventional deep learning text?

I’ve seen myself and my students struggle with the huge gap between abstract symbolic math and the code that actually runs the model. Traditional texts lean heavily on equations with greek symbols. They’re beautiful, but for most learners they’re hard to connect to implementation.

Masking—filling in the blanks—has been one of the most powerful ideas in deep learning. And ironically, it’s been underused in how we teach deep learning math.

As an ESL learner, I mastered English through endless fill-in-the-blank exercises. That pattern forced me to engage, not skim. Deep learning math is also a language. So I thought: why not use the same method to teach the “grammar” of deep learning? That’s what this workbook tries to do.

What makes this workbook different from other deep learning resources?

It’s the opposite of a textbook. No long explanations. Instead, the workbook is built entirely on patterns, context clues, and fill-in-the-blank prompts that force you to trace, reason, and reconstruct the mechanics yourself.

And like good deep learning, it uses many training examples. You don’t learn by reading one explanation—you learn by doing ten slightly different versions of the same structure until the pattern clicks. It’s not passive learning. You can’t skim through it. You have to interact.

Why do beginners, students, and practitioners need the underlying math—not just the code?

Because without the math, everything feels like magic. For me, when things feel like magic, I feel like an imposter.

Understanding the math—even at a foundational level—changes your posture. You feel empowered. You can inspect a model, reason about its behavior, and debug with confidence.

The code is just an expression of the math. If you understand the math, the code stops being intimidating.

How my background in HCI research influence this book?

HCI taught me that learning is an interaction problem, not a content problem. If the interaction is wrong, even the best content won’t land. That’s why the workbook is built around active engagement.

And my work on the societal impact of AI reminds me that transparency matters. Ethics isn’t just a set of principles—it requires understanding what’s inside the systems we deploy. The math is part of that transparency.

What motivated me to write this book now?

Right now, every time we learn a new AI tool, it becomes obsolete the next week when an even newer one comes out. The pace is exciting, but it also creates anxiety—people feel like they’re always behind.

Foundational math is the opposite of that. It’s evergreen. It doesn’t go out of date.

If we focus on the core principles—the mechanics of attention, gradients, optimization, representations—we stay grounded no matter what tool or framework comes along.

As AI gets better at writing its own architecture code, our role shifts toward defining the fundamentals, verifying correctness with small, understandable examples, and then letting the model scale it up.

If you understand the simple case by hand, the large case generated by the AI is no longer mysterious. The fundamentals remain relevant even as the tooling churns around us.

How does the AI by Hand methodology change the way students understand neural networks?

AI by Hand forces you to slow down and trace what each component is doing. When you literally compute dot product, linear layer, or softmax on paper, your brain starts to see the structure, not just the equation or code.

Once you understand the small version by hand, the large version is just scaling. And the ideas stick. They become intuitive instead of memorized.

How is the role of foundational math evolving as AI advances?

As models get larger, AI is getting better at writing its own architecture code. That actually makes foundational math more important, not less.

Our job is shifting toward defining the principles, verifying correctness with small examples, and letting the model scale it. It’s inductive reasoning: if it works cleanly in the small case you understand by hand, you can trust the scaled-up version generated by the AI.

Foundational math becomes the anchor—the part humans still need to own.

Any electronic versions of it, or any online companion, if we buy the book? thanks!

Prof. Yeh, what do you recommend as a next learning step after finishing this workbook?