ELU (Exponential Linear Unit)

Essential AI Math Excel Blueprints

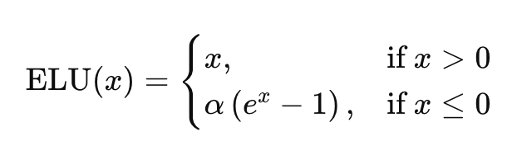

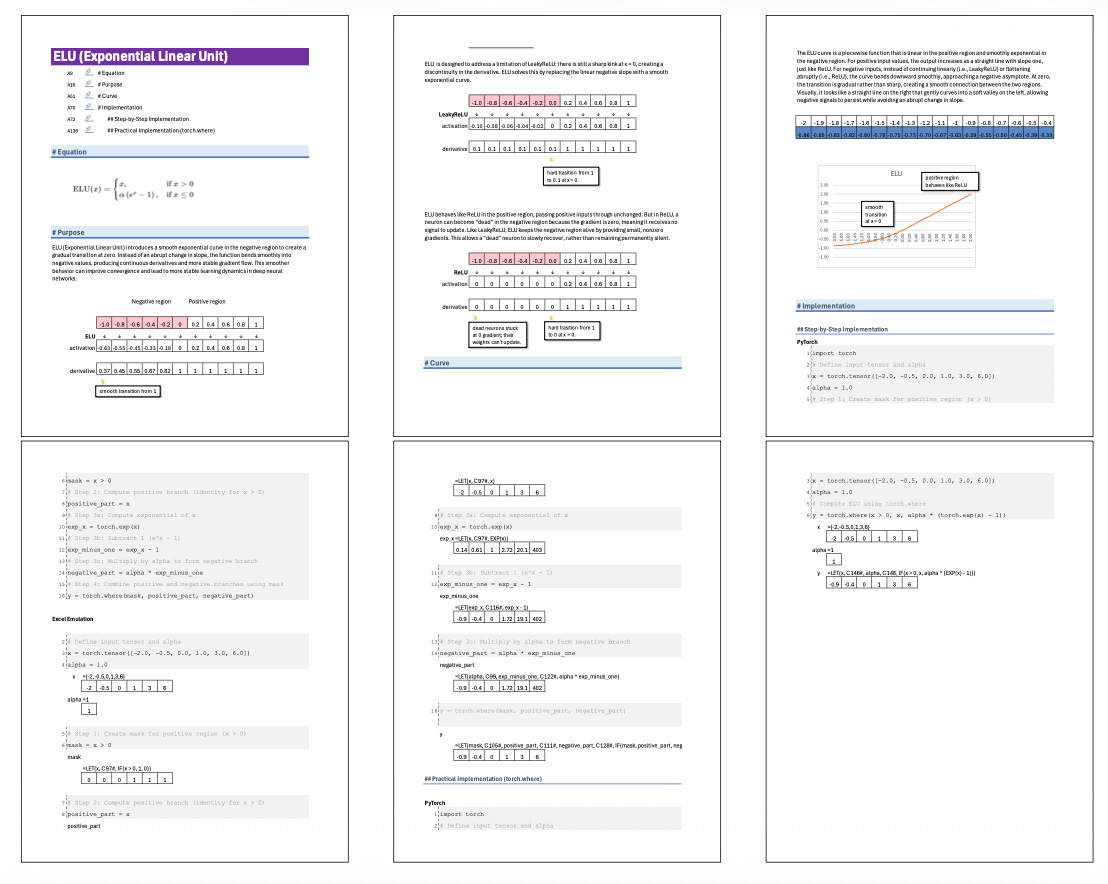

ELU (Exponential Linear Unit) introduces a smooth exponential curve in the negative region to create a gradual transition at zero. Instead of an abrupt change in slope, the function bends smoothly into negative values, producing continuous derivatives and more stable gradient flow. This smoother behavior can improve convergence and lead to more stable learning dynamics in deep neural networks.

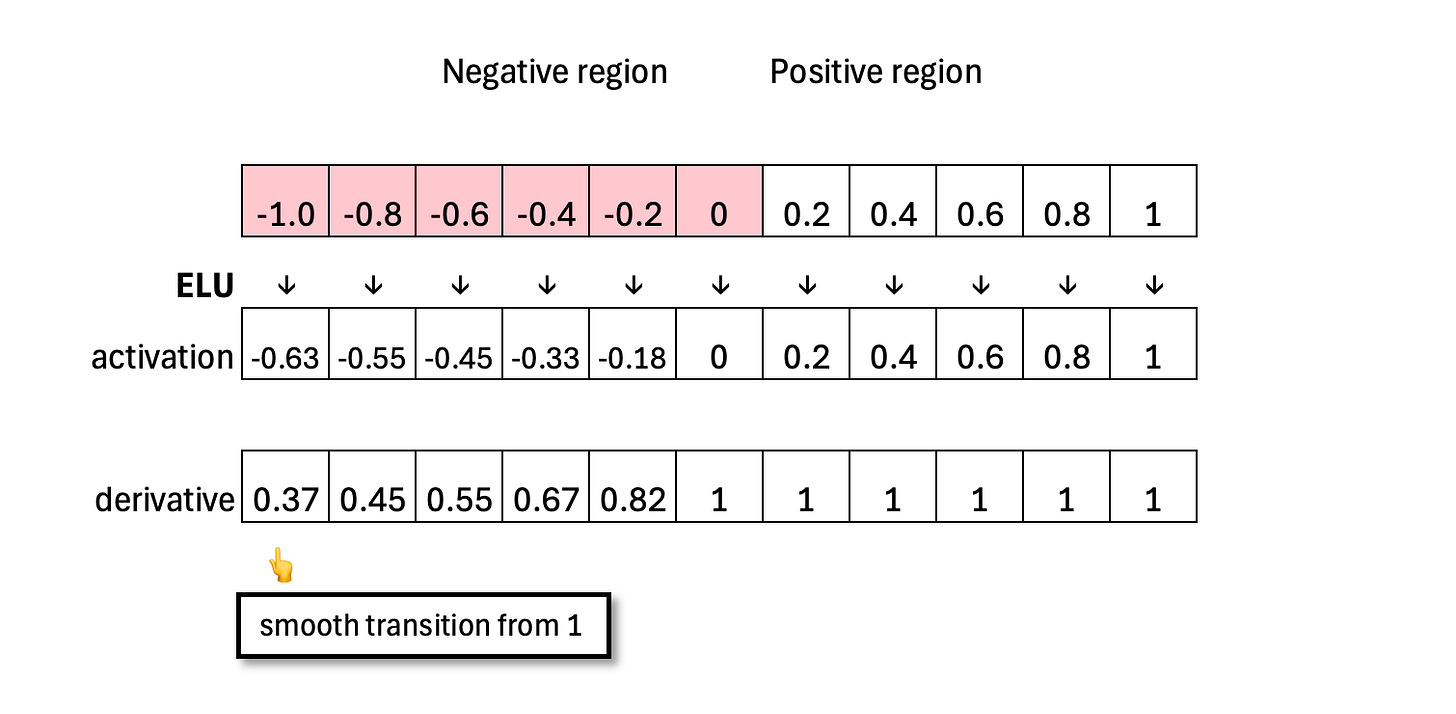

ELU is designed to address a limitation of LeakyReLU: there is still a sharp kink at x = 0, creating a discontinuity in the derivative. ELU solves this by replacing the linear negative slope with a smooth exponential curve.

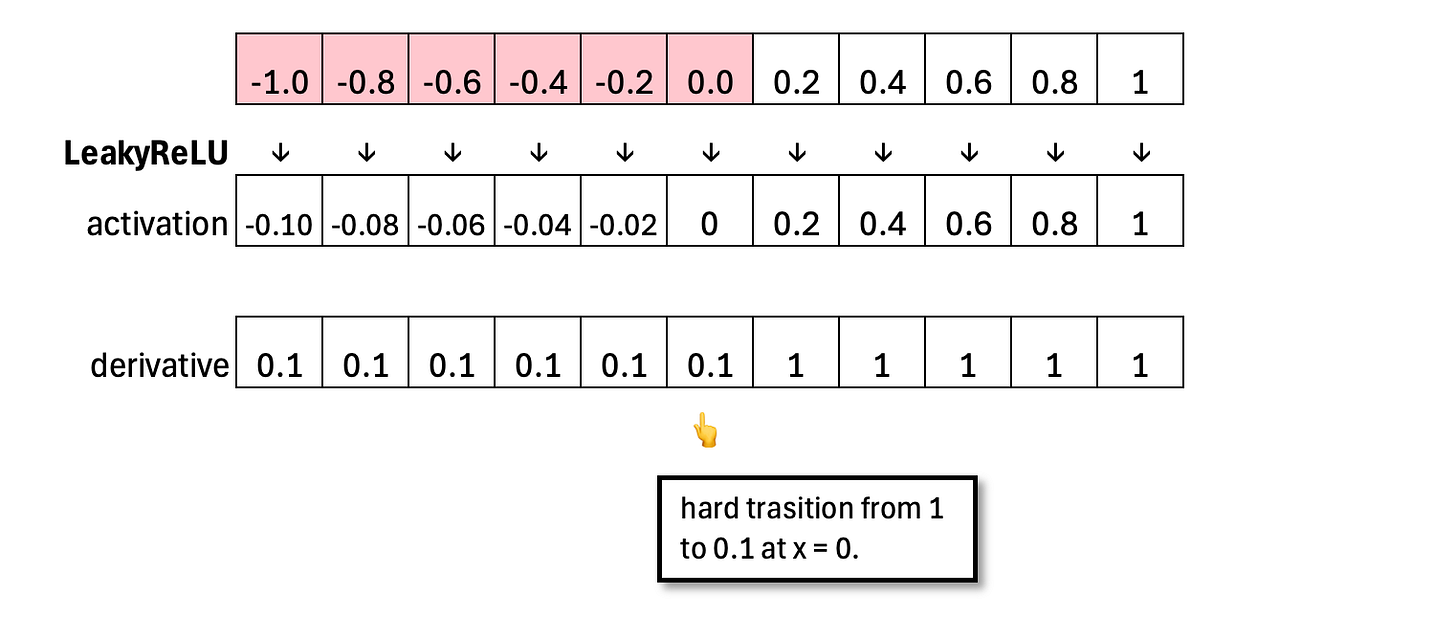

ELU behaves like ReLU in the positive region, passing positive inputs through unchanged. But in ReLU, a neuron can become “dead” in the negative region because the gradient is zero, meaning it receives no signal to update. Like LeakyReLU, ELU keeps the negative region alive by providing small, nonzero gradients. This allows a “dead” neuron to slowly recover, rather than remaining permanently silent.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.