GELU (Gaussian Error Linear Unit)

Essential AI Math Excel Blueprints

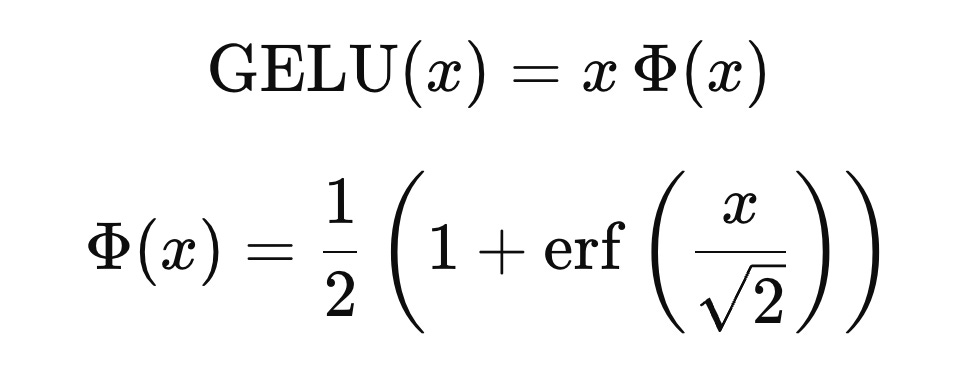

The GELU (Gaussian Error Linear Unit) activation function is fundamentally similar to Swish (SiLU), in that both apply a smooth, input‑dependent gate to the linear signal x, to achieve the effect of suppressing negative values toward zero while allowing positive values to pass through, but in a soft, probabilistic manner. This gentle attenuation of negatives preserves useful gradient information and improves learning, unlike the hard cutoff of ReLU.

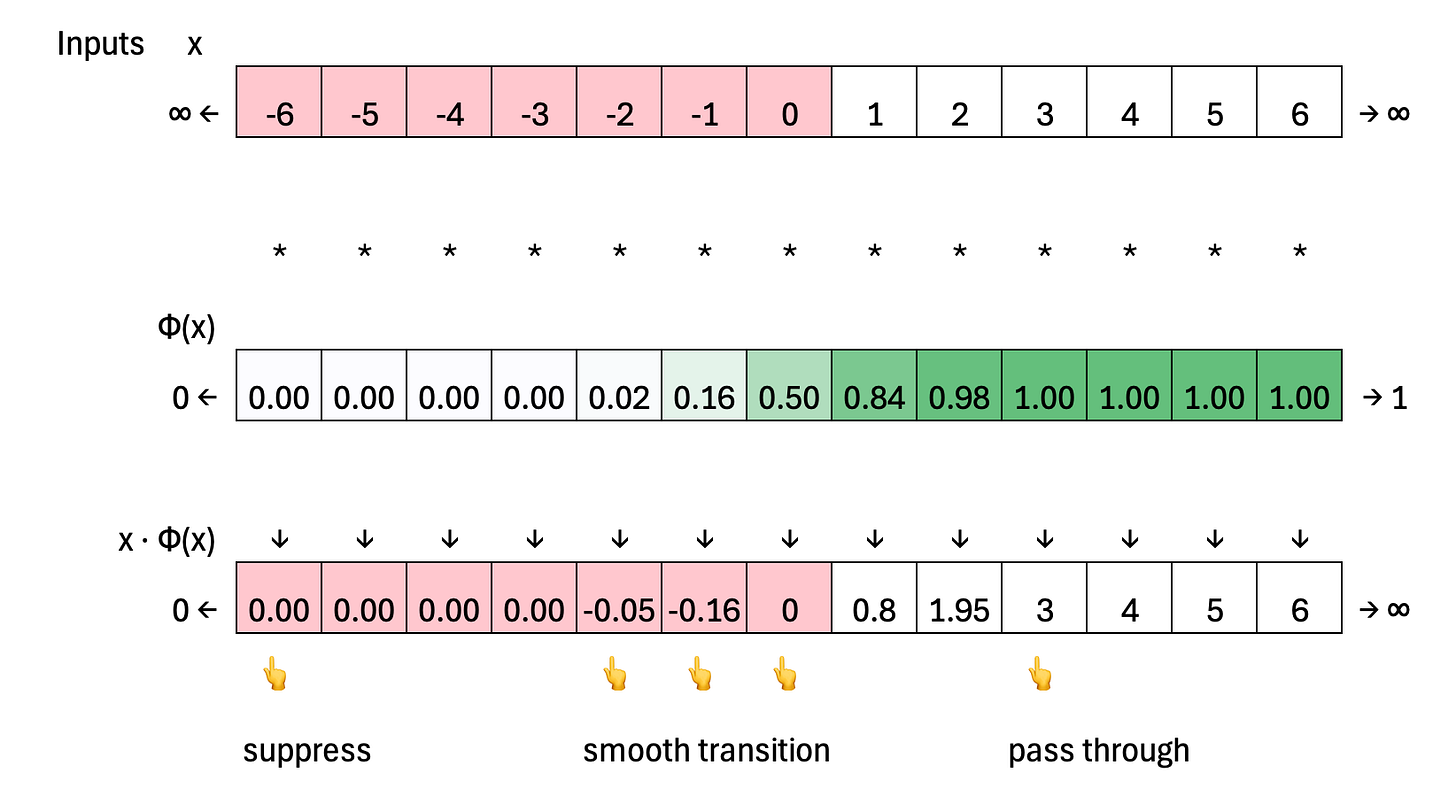

The core difference between GELU and Swish lies in how their “gates” transition from closed to open. GELU uses the Gaussian error function Φ(x) as its gate, which operates in a narrower band roughly between -3 and 3. In contrast, the Swish gate uses the sigmoid function σ(x), which has a wider band roughly from -6 to 6.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.