GLU (Gated Linear Unit)

Essential AI Math Excel Blueprints

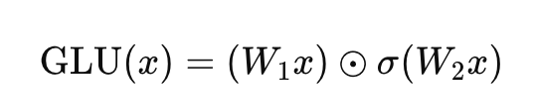

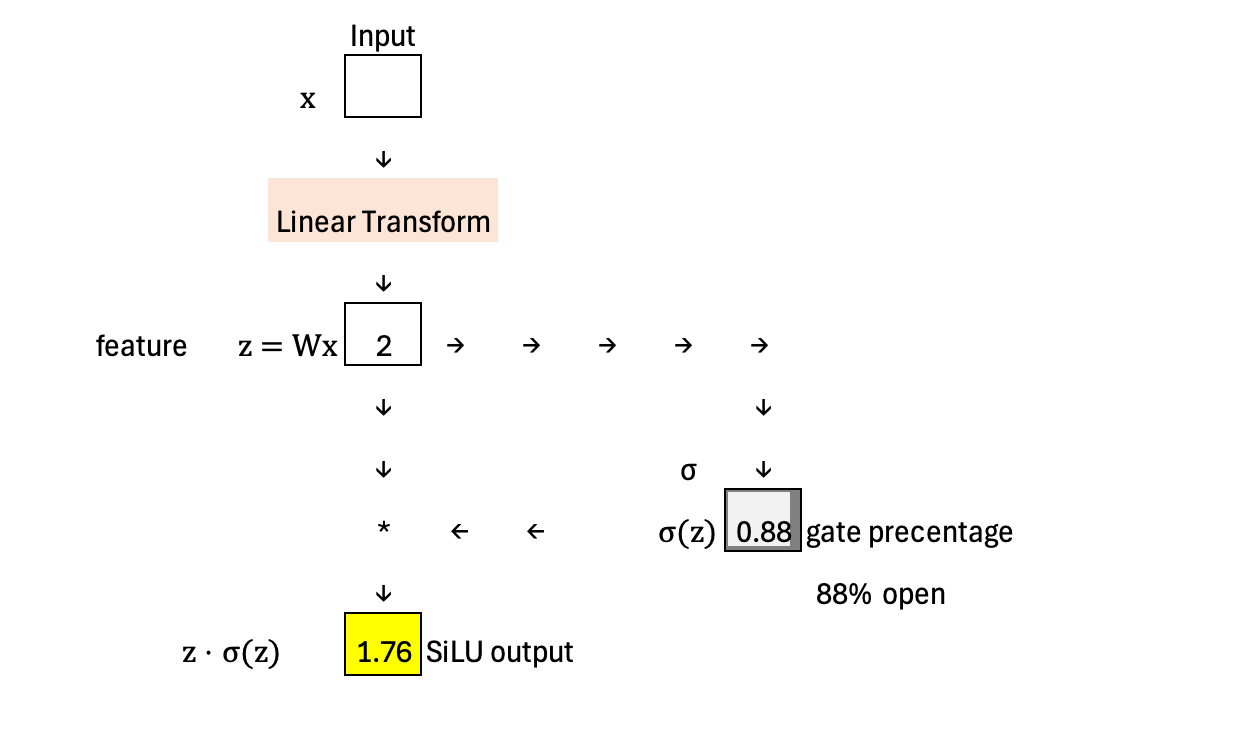

Gated Linear Units (GLU) marked a breakthrough in activation design by introducing a truly dynamic gating mechanism — meaning the gate is predicted from the input itself rather than defined by a fixed, predefined function. GLU projects the input through two parallel linear transformations: one produces a feature value, and the other produces a gate logit. The gate logit passes through a sigmoid to produce a value between 0 and 1, which determines how “open” the gate is and how much (percentage) of the feature value is allowed to pass through.

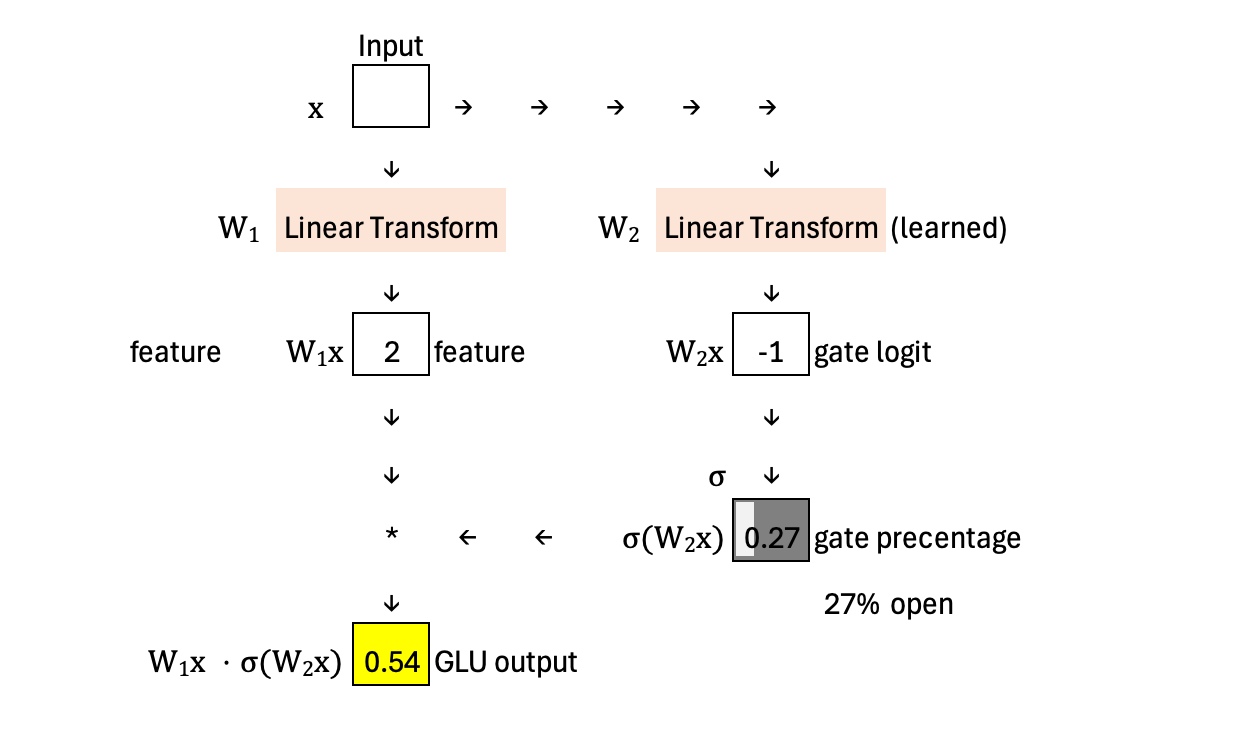

Below is the visuliation of the computation of SiLU for comparison. You can notice the key difference. In SiLU the sigmoid gate depends directly on the same projected feature value (z = Wx), meaning the feature and the gate come from the same linear transformation. In contrast, GLU-style gating predicts the gate using a separate linear transformation, so the gate is not tied to the feature itself.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.