India Edition, ResNet, Fundamentals vs Tools

What's New? (5/9)

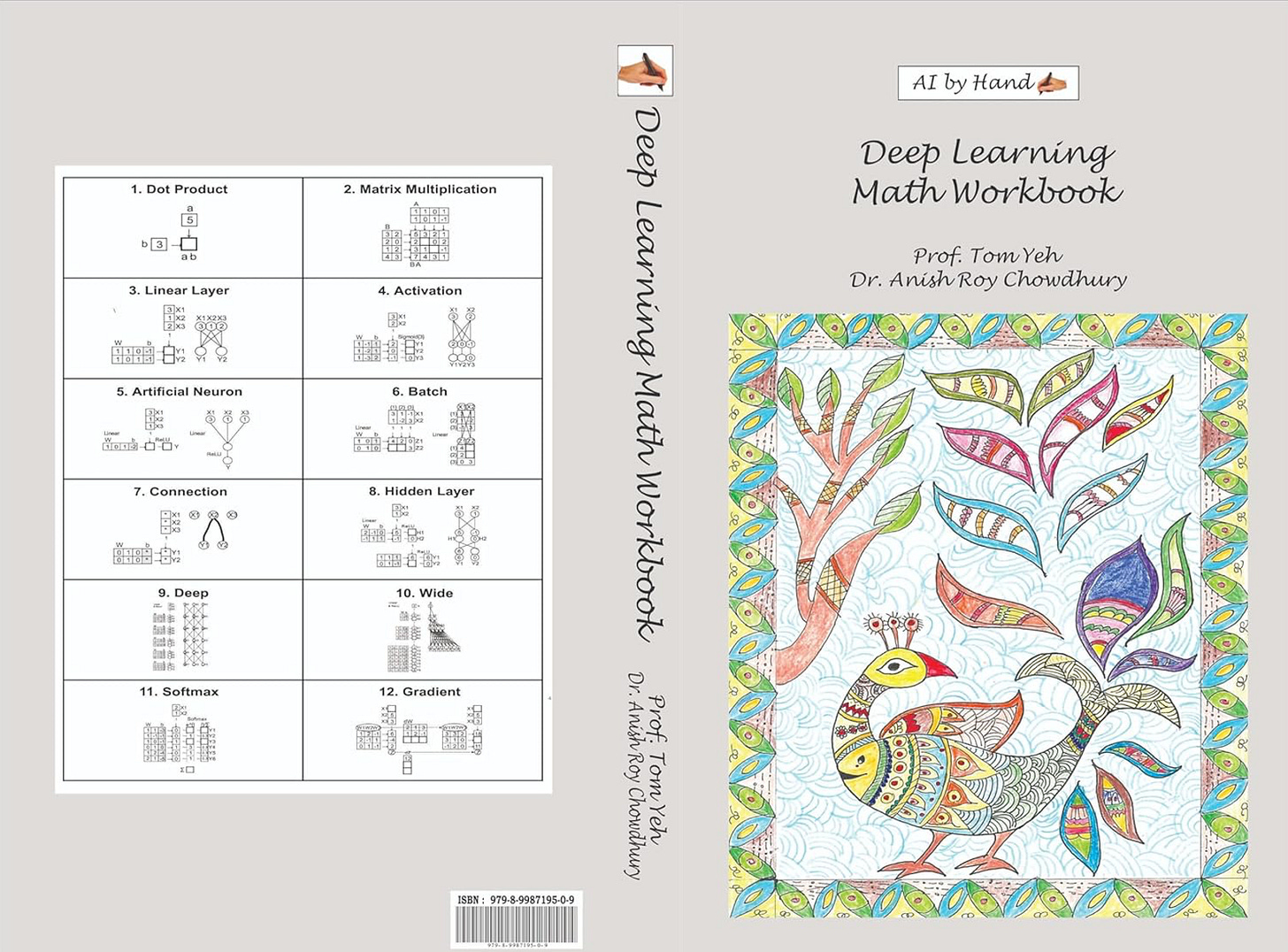

Deep Learning Math Workbook India Edition

3 years ago, I made a controversial decision in my AI course: I ditched all the Jupyter notebooks for whiteboard writing and drawing by hand. ✍️ Fast forward to today, I published a Deep Learning Math Workbook in India.

The person in this photo is Anubhav Tiwari, who runs Bookline, a rare book bookstore in Kolkata started by his father more than 30 years ago.

His dad, Brijesh Tiwari, once said in an interview: "For me to bring a book here, it has to amaze me, make me curious" I feel deeply honored to collaborate with them on this "rare" book.

Back to my university 3 years ago,

I remember some students initially pushed back. "Where's the coding?" they complained in my course evaluation.

But my Indian international students? They excelled. They brought their own paper notebook, followed my whiteboard drawing step by step by hand. That's how they learned in India - paper first, computers later.

No fancy hardware is required (except a pencil). Even in a rural school without a modern computer lab, people can still learn AI. That's the beauty of learning the fundamentals.

Despite the pushback, some of my students' positive responses at that time encouraged me to continue down this controversial path of teaching AI by hand. ✍️

Since then, more and more students began to appreciate my unorthodox approach.

Recently, several former students reached out to tell me how those foundational math concepts we worked through by hand became their assets and competitive advantage among the AI/ML team in their companies. Why? Because while APIs change, libraries got deprecated, and SOTA models come and go, the math fundamentals remain the same and never expire.

In 3 years, no one will be talking about Llama 4 or GPT 4.5. But I expect people will still read and write on my Deep Learning Math Workbook to learn and practice dot-product, matrix multiplication, linear layer, Softmax, gradient...etc.

Finally, I want to thank my dear friend Dr. Anish Roychowdhury, Ph.D. for collaborating on this book and turning it into reality!

Check out the book on Amazon.in:

ResNet

What’s the most important deep learning paper most people have never heard of? It’s 𝘙𝘦𝘴𝘕𝘦𝘵: 𝘋𝘦𝘦𝘱 𝘙𝘦𝘴𝘪𝘥𝘶𝘢𝘭 𝘓𝘦𝘢𝘳𝘯𝘪𝘯𝘨 𝘧𝘰𝘳 𝘐𝘮𝘢𝘨𝘦 𝘙𝘦𝘤𝘰𝘨𝘯𝘪𝘵𝘪𝘰𝘯, the most cited computer science paper of all time, by Kaiming He.

ResNet solved a critical issue in deep learning: why deeper neural networks actually performed worse.

The breakthrough?

Skip connections

It is a simple but genius trick. They let the input skip over layers and get added directly to the output. It’s that line in architecture diagrams that jumps ahead and ends in a little "+" sign.

🧑🏫 In my lecture, I drew skip connections, explained the underlying logic, and calculated the addition by hand. ✍️

Also, skip connections help gradients flow more easily, making deep networks actually trainable without exploding or vanishing.

🧑🏫 In my lecture, I used a highlighter to trace the gradient flows and compare wiith and without skip connections, by hand. ✍️

Before ResNet, deeper meant worse accuracy. After ResNet, deeper meant better accuracy.

Before ResNet, 20 layers was considered deep. After ResNet? 100, 1000, even more became trainable.

ResNet kicked off the modern era of deep learning.

From DALLE to Stable Diffusion

From GPT to Llama to DeepSeek

The ResNet paper is one of my favorite papers. This lecture is my best effort to share why it matters. I hope you find it just as fascinating and inspiring as I do!

Fundamentals vs Tools

With the threat of layoffs everywhere, my students asked me: "How do we make ourselves invaluable on an AI/ML team?" 🤔 I told them three things:

Hint: It's not coding. It's not knowing the latest API frameworks either. Here's the real answer that will keep you employed and even get you a promotion…

𝟭. 𝗠𝗮𝘁𝗵 ✍️

Not just being able to string together model architectures with libraries, but developing true math intuition. When the models break down, when the libraries fail us, it's this intuition that helps us debug at a fine-grained level. I've seen teams spend weeks stuck on problems that a little math insight could have solved in hours.

𝟮. 𝗪𝗵𝗶𝘁𝗲𝗯𝗼𝗮𝗿𝗱 🟰

The best AI systems I've ever seen started as simple diagrams on a whiteboard. It's how you ensure that every single person on the team understands the architecture, the data flow, the edge cases. It's how I train my own students.

𝟯. 𝗣𝗲𝗼𝗽𝗹𝗲 🤝

Getting AI projects right is as much about understanding people as it is about understanding machines. The best AI/ML professionals are masters of both.

Tools change.

Fundamentals don't.

Surprised I didn't mention PyTorch or the latest LLM APIs? That's exactly the point 😉

Mayukh here, Just ordered my copy thank you.