Leaky ReLU

Essential AI Math Excel Blueprints

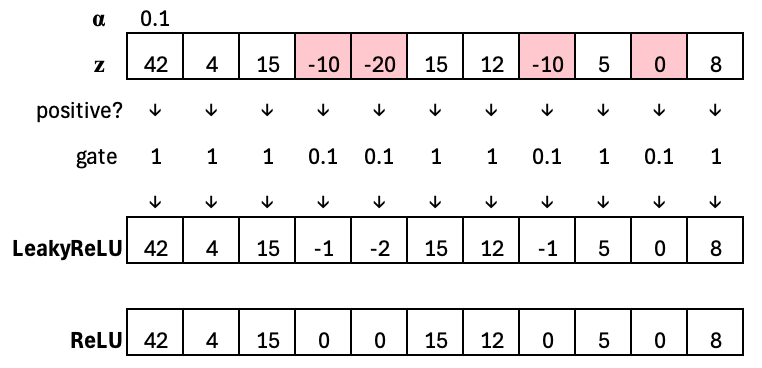

\(\mathrm{LeakyReLU}(z) =

\begin{cases}

z, & z > 0 \\

\alpha z, & z \le 0

\end{cases}

\)

Leaky ReLU is designed to address a key limitation of ReLU—neurons becoming permanently inactive when they receive only negative inputs. Instead of outputting exactly zero for all negative values, Leaky ReLU allows a small, nonzero slope in the negative region. This keeps gradients flowing even when activations are negative, reducing the risk of “dead” neurons while preserving the simplicity and efficiency that make ReLU effective in deep networks.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.