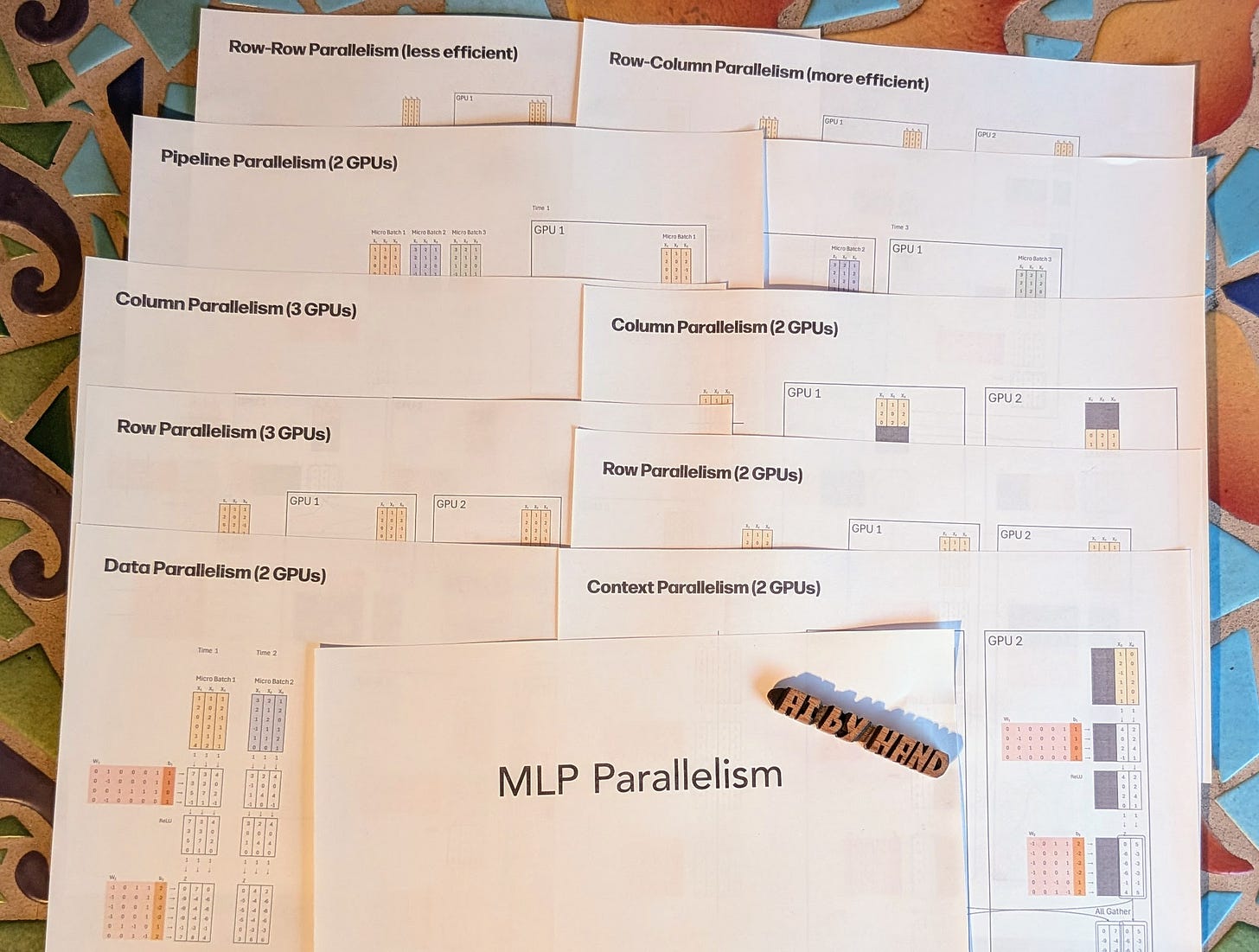

10. MLP Parallelism: Data, Context, Row, Column, Pipeline

Frontier AI Drawings by Hand ✍️

I was helping an AI company review their code for parallelism in the feedforward layer, which is a multilayer perceptron (MLP) with two linear layers. I spotted a problem with sharding. This issue does not cause incorrect results, but it is inefficient because of significant extra communication that can be completely avoided if the sharding is done properly.

The problem was that the weight matrices of the two linear layers were split in the same way. Ideally, one should be split by rows and the other should be split by columns.

Confusing? You are not alone. I felt the same way when I first studied this topic, until I implemented all types of parallelism in Excel by hand ✍️ to really understand the process.

Why did this mistake happen, even at a reputable AI company? Their intention was good. They wanted to roll their own fine-tuning + inference stack, rather than blindly downloading and running other people’s code from GitHub. But parallelism algorithms are very hard to understand. I was glad I could help them work out the math and achieve an immediate win.

Fixing this problem led to 30–50% faster performance in the feedforward layer.

More than the performance gain, the bigger win was the clarity their engineers gained in how parallelism really works. 💡

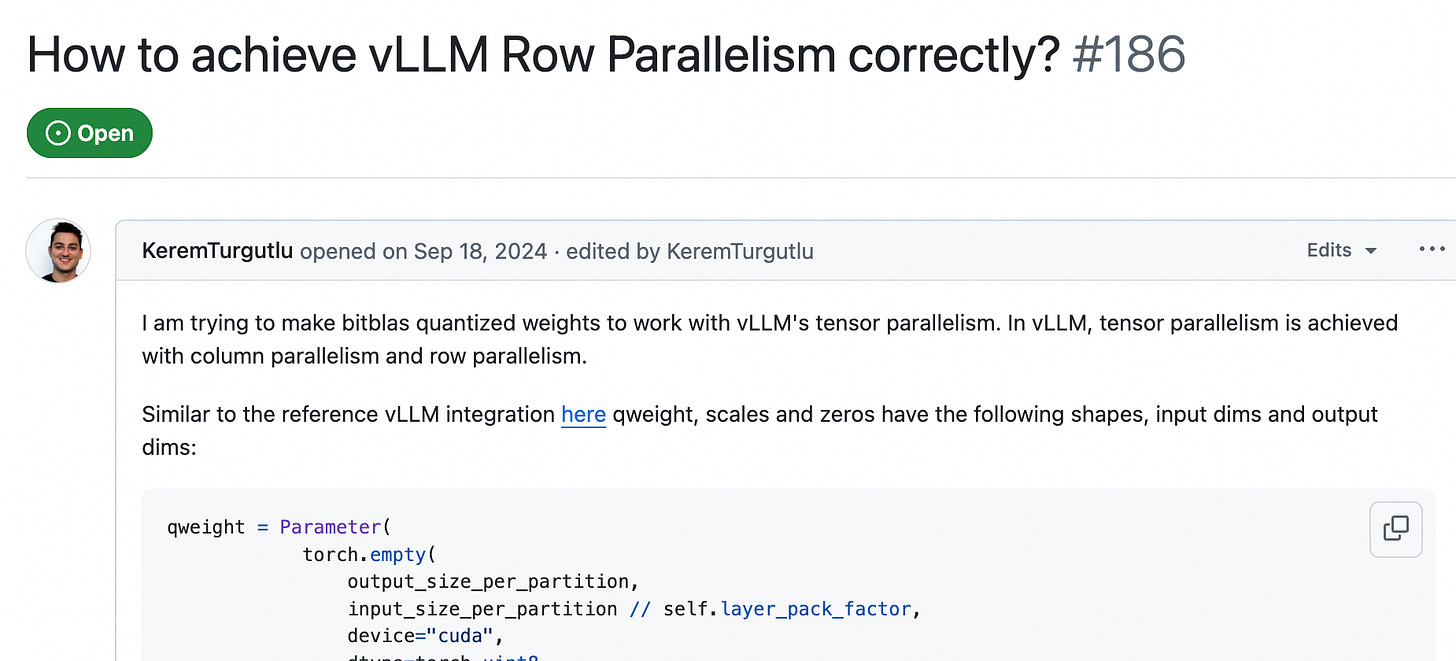

Since I cannot share more details about this case under NDA, here is a public example to illustrate how common this mistake is. It comes from an open GitHub issue about implementing parallelism correctly in vLLM, which has remained unresolved for almost a year:

Just to push this pain point further, llama.cpp once had a feature request that essentially aimed to fix this same problem to improve performance.

Source: https://github.com/ggml-org/llama.cpp/issues/9086

Here’s a quote from the feature request explaining that the expected result is that only one ‘all-reduce’ operation is needed.

Thus, the entire FFN module only requires one 'all reduce', meaning that with properly tailored split implementation, even with multiple matmul operations, only one 'all reduce' operation may be needed.

Drawings

For this week’s issue, I created six new drawings to provide a comprehensive overview of the major types of parallelism for MLPs (self-attention will come later):

Data Parallelism

Context Parallelism

Row Parallelism

Column Parallelism

Pipeline Parallelism

Row-Row vs. Row-Column Parallelism

You can see how sharding is applied along different dimensions. You can trace the computation to confirm it produces the same result as the non-sharded version. As you trace, you will also encounter All-Gather and All-Reduce in action, which will give you a solid intuition for what they do and when they are (or are not) needed.

Lastly, you will see only one ‘all reduce’ operation may be needed!

Download

The Frontier AI drawings are available to AI by Hand Academy members. You can become a member via a paid Substack subscription.