ReLU

Essential AI Math Excel Blueprints

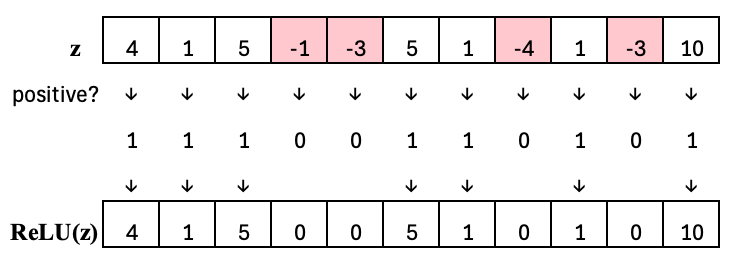

\(\mathrm{ReLU}(z) =

\begin{cases}

0, & z \le 0 \\

z, & z > 0

\end{cases}

\)

ReLU is a simple nonlinear function used in neural networks to decide which signals should pass through and which should be shut off. By outputting zero for negative inputs and leaving positive inputs unchanged, ReLU acts like a switch: it suppresses unhelpful signals while allowing useful ones to flow forward.

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.