Sharding

Frontier AI Excel Blueprint

Last week, you may have heard the news that Google is negotiating with Meta to supply TPUs for its new data centers—an investment in the billions. And recently, in many conversations I’ve had with Google DeepMind engineers about optimization and scaling, the same word keeps coming up: sharding.

GPU and CUDA expects you to think in terms of threads—a very compute-centric mindset. So you talk about how to fuse ops into threads.

TPUs and JAX, on the other hand, encourage a more data-centric way of thinking: how to break up tensors across cores, how to shard them, and how to bring everything back together at the end. So you talk about how to shard data into pieces.

As I see it, TPUs lift the abstraction level from kernels to whole tensors.

Even though the field is still dominated by PyTorch developers and GPUs, many of the recent breakthrough models that generated huge excitement—like Nano Banana and Gemini 3—were trained at Google using TPUs and JAX. And as we reach the limits of simple scaling laws, the focus is shifting toward new algorithmic breakthroughs. In that world, today’s GPU-PyTorch dominance may not hold forever.

As I tell my students: you must be able to fuse, and you must be able to shard.

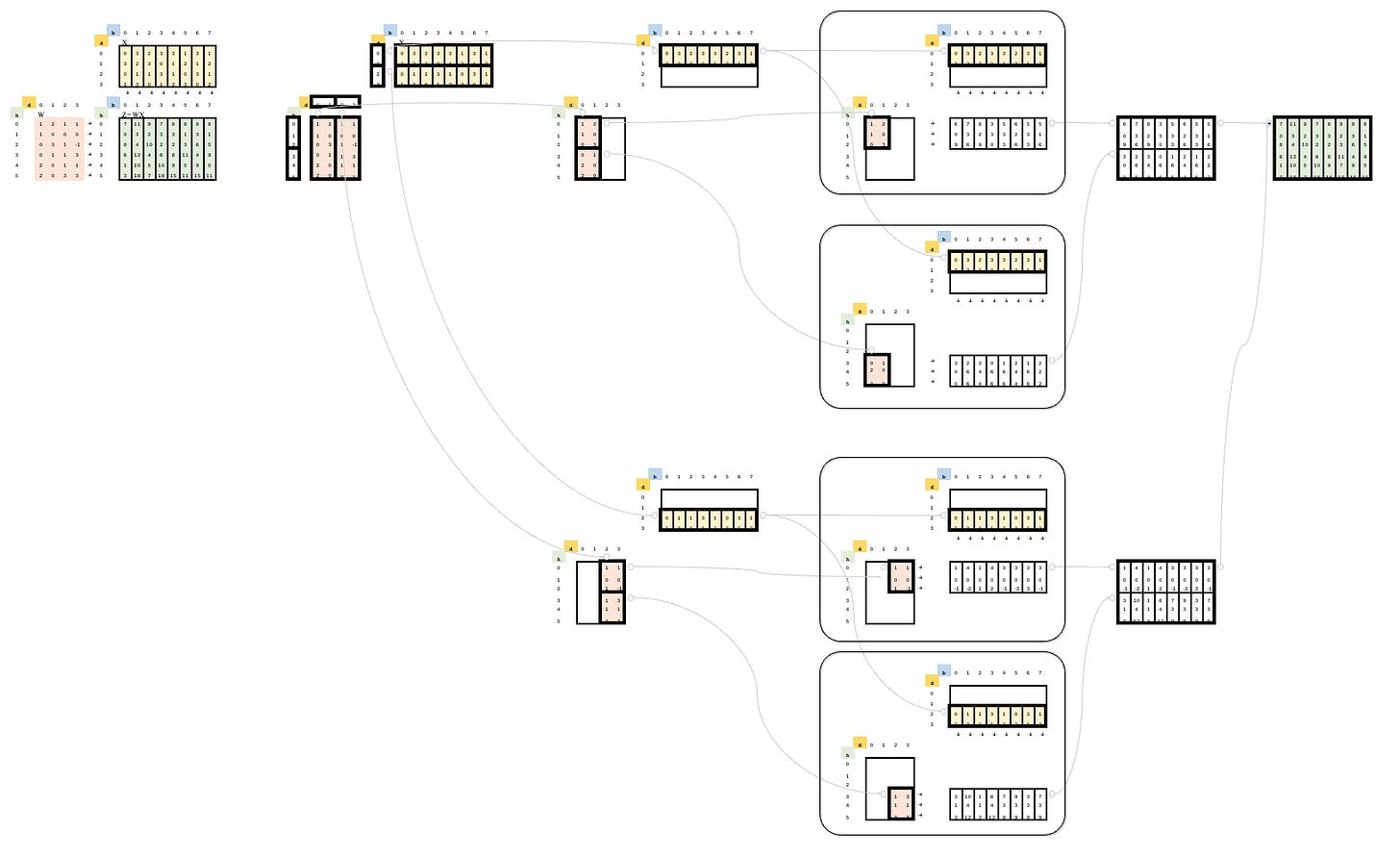

For this week’s Frontier issue, I’m sharing my Excel implementation of various sharding scenarios as a Blueprint to help you trace exactly how the math works. Along the way you’ll also encounter the key communication ops behind it—like all-gather and all-reduce.

Table of Content

Matrix Multiplication

Batch Dimension Sharding (Data Parallelism)

Out-Dimension Sharding (Tensor Parallelism)

In-Dimension Sharding

Out-Dimension x In-Dimension Sharding (2 x 2)

Out-Dimension x In-Dimension Sharding (2 x 2) - Compact Form

Out-Dimension x In-Dimension Sharding (3 x 2) - Compact Form

Out-Dimension x In-Dimension x Batch-Dimension Sharding (3 x 2 x 2) over 8 cores

Out-Dimension x In-Dimension x Batch-Dimension Sharding (3 x 2 x 2) over 4 cores

Out-Dimension to In-Dimension Sharding (Row Parallel -> Column Parallel)

Out-Dimension to Out-Dimension Sharding (Row Parallel -> Row Parallel)

Fully Sharded Data Parallelism (FSDP)

Download

This Frontier AI Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.