Swish (SiLU)

Essential AI Math Excel Blueprints

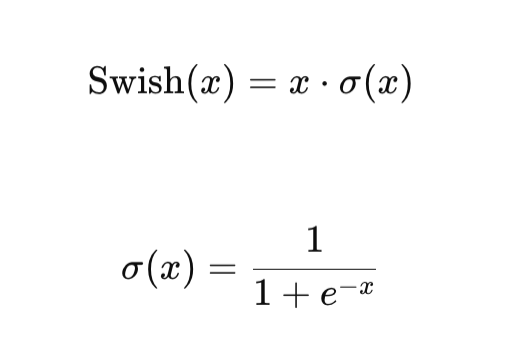

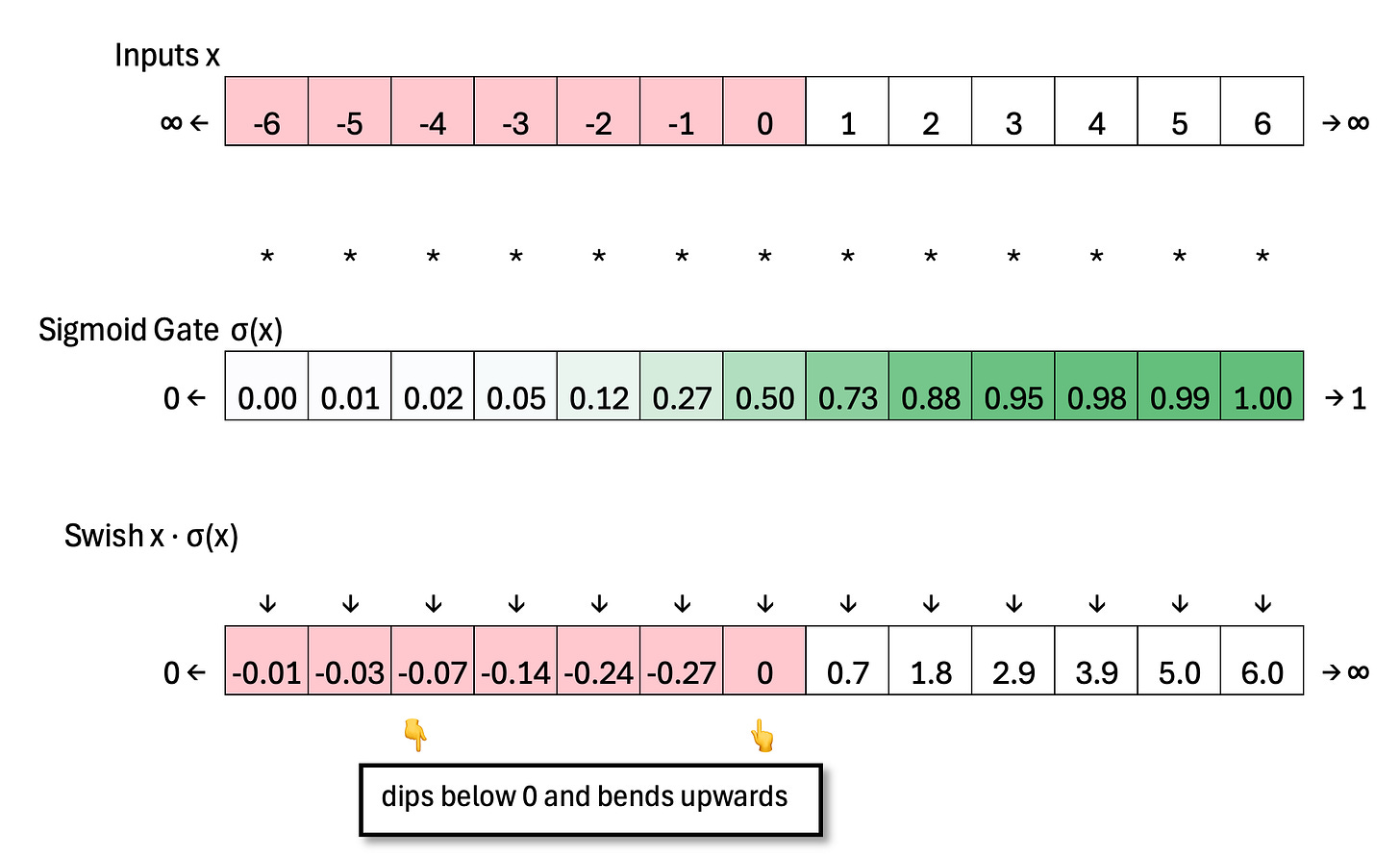

Swish, also known as Sigmoid Linear Unit (SiLU), is designed to introduce a smooth, self-gated activation mechanism. Instead of abruptly cutting off negative inputs like ReLU, Swish multiplies the input x by a sigmoid gate σ(x) that softly scales the signal between 0 and 1. For large positive values, the gate approaches 1 and the function behaves like a linear pass-through. For large negative values, the gate approaches 0, gradually suppressing the signal.

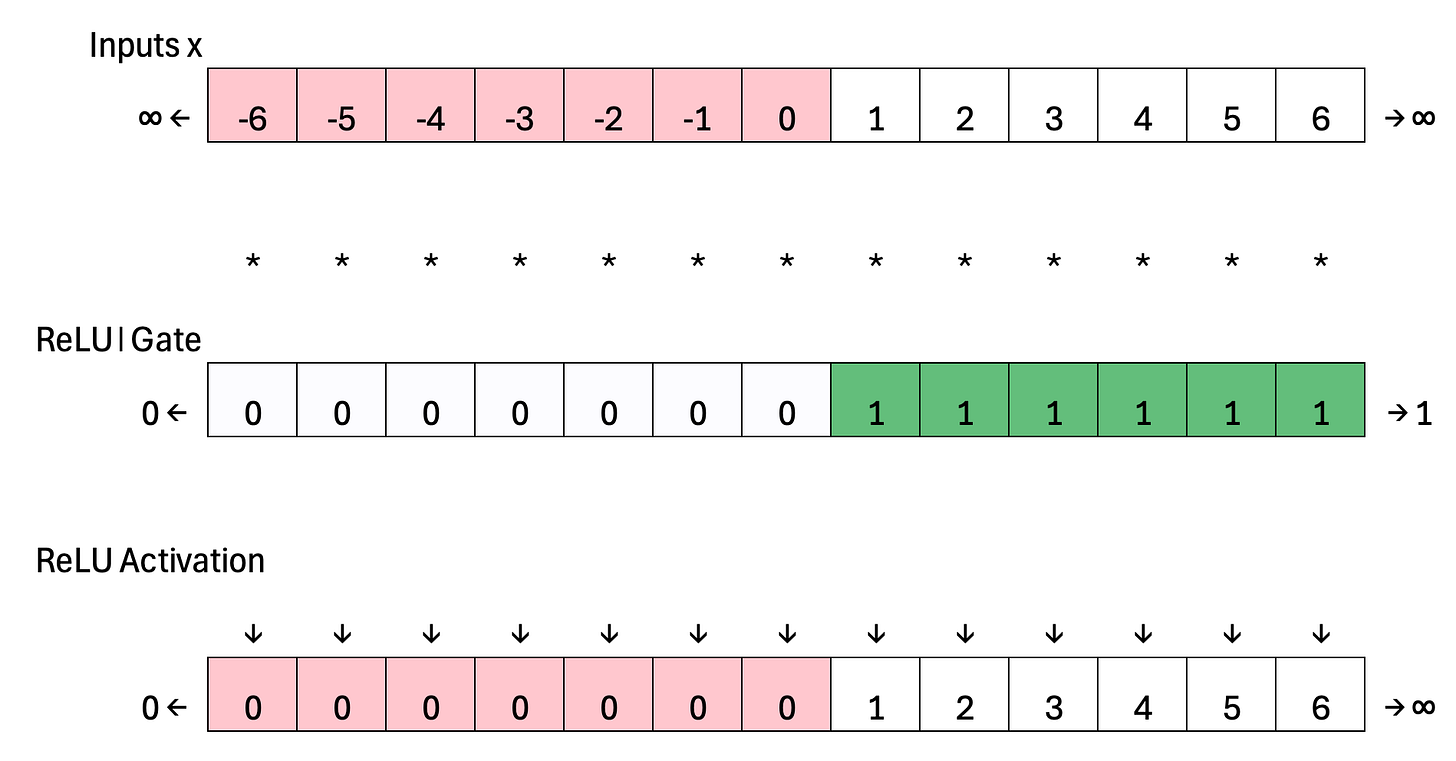

Below is the ReLU activation for comparison. You can think of ReLU as using a hard gate: the gate value is 0 when x <= 0, and 1 when x > 0. This creates a sharp transition at x = 0. Swish replaces this sharp transition with a smooth “swish” transition (pun intended).

Excel Blueprint

This Excel Blueprint is available to AI by Hand Academy members. You can become a member via a paid Substack subscription.