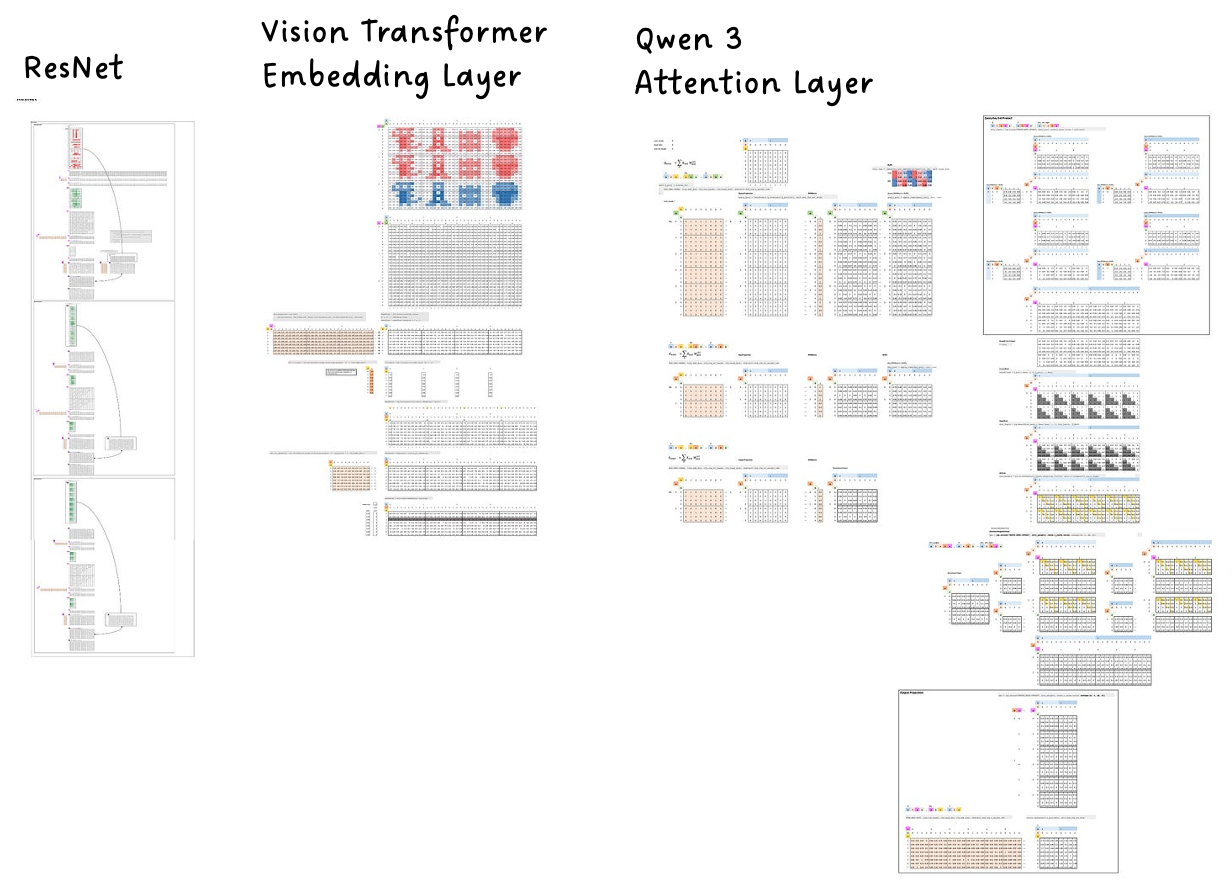

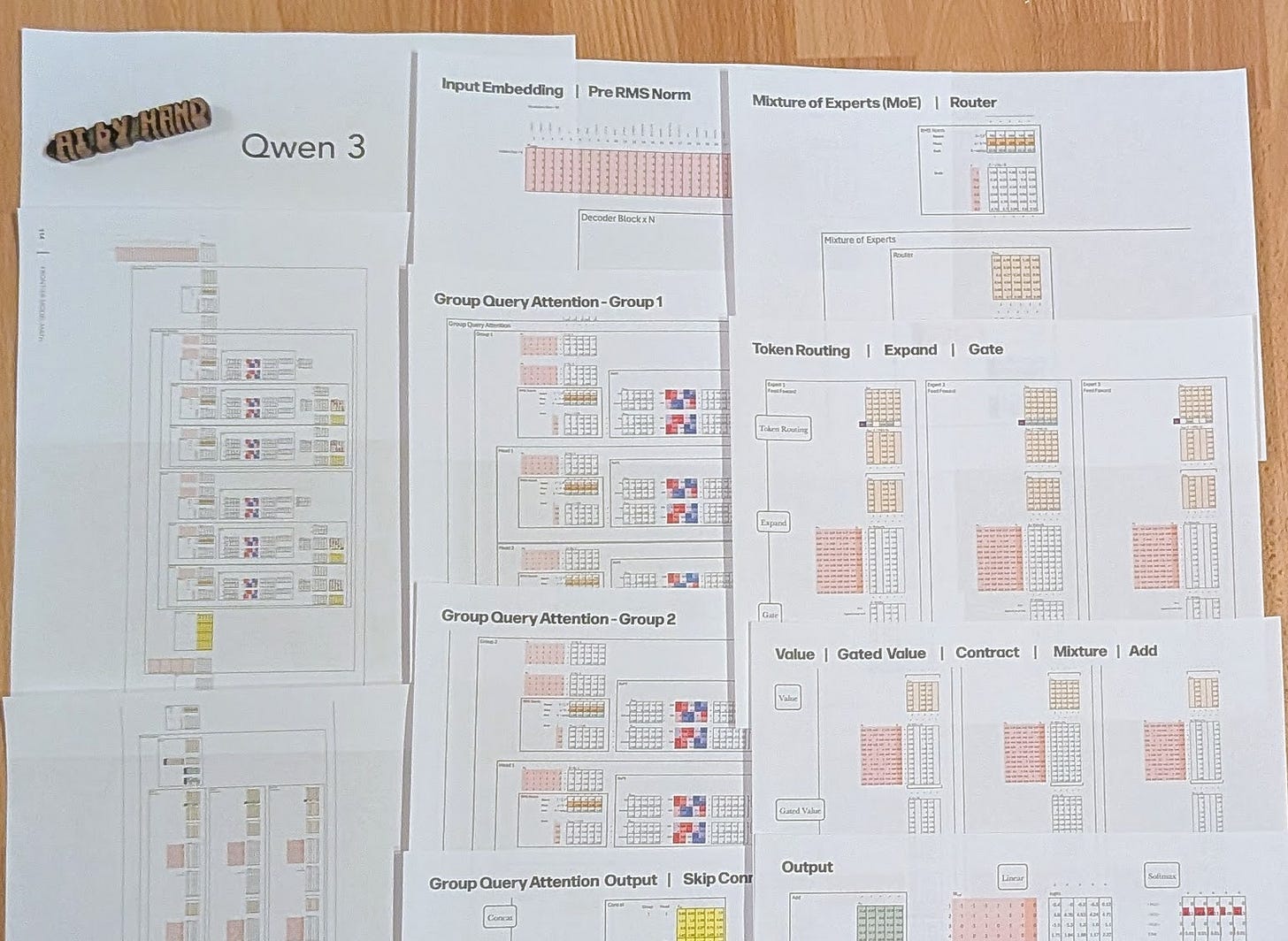

ResNet, ViT, Qwen3 Blueprint (Google Bonsai)

Frontier AI Excel Blueprint

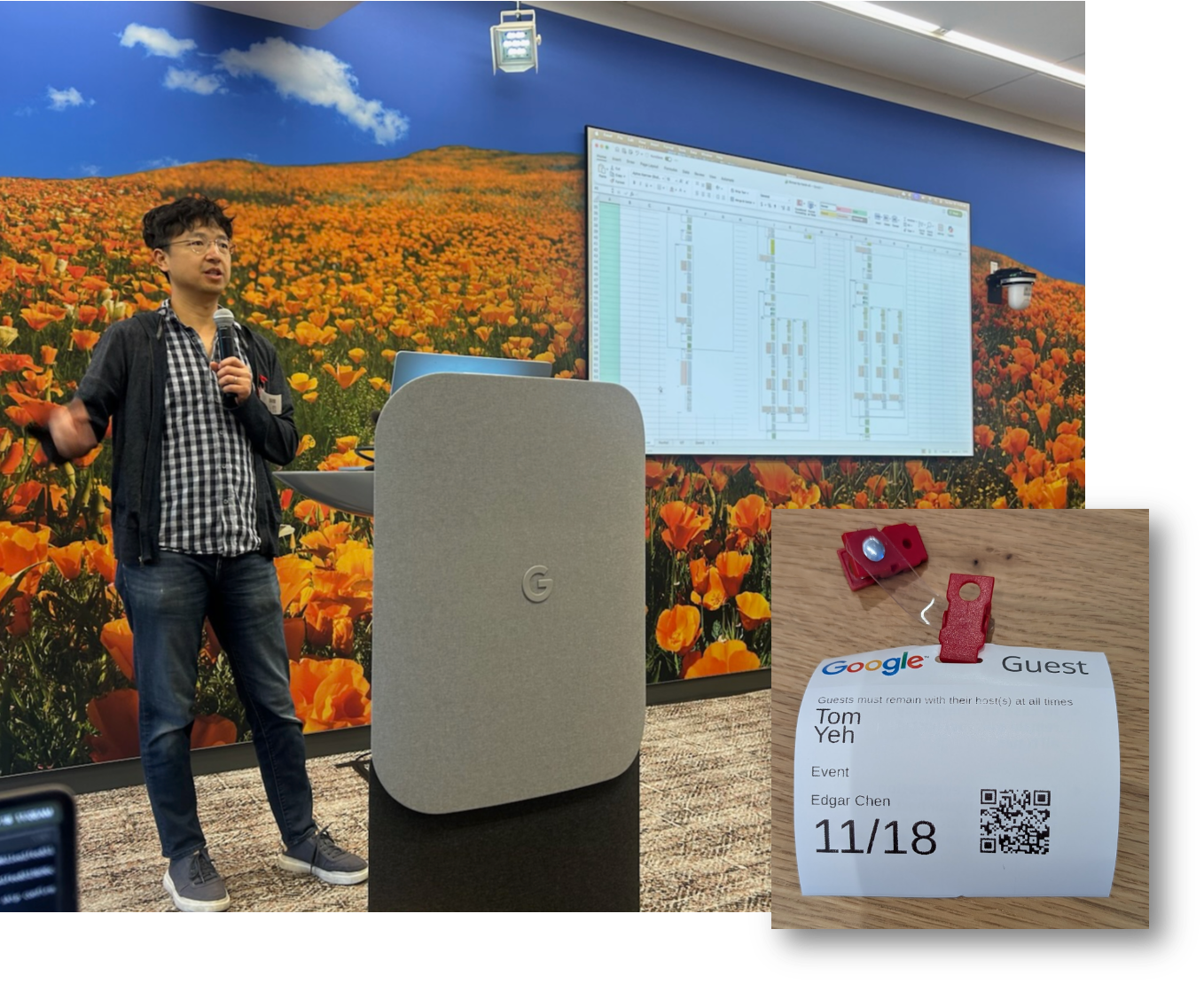

Today Google announced Gemini 3, and I “coincidentally” happened to be invited to Google to give a presentation on a new set of Excel Blueprints I built for ResNet, Vision Transformer (ViT), and Qwen 3 Attention—mapping every tensor operation directly to its production-grade JAX implementation in Google’s Bonsai repo.

I’m glad I can share with you these Blueprints on this very day.

Bonsai is a minimal, lightweight JAX implementation of popular models. A big thank-you to Jen Ha and James Chapman of the Bonsai Team at Google for making this collaboration possible.

Please take a moment to check out Bonsai (https://github.com/jax-ml/bonsai) and give it a star ⭐️!

Gemini 3 is proprietary, of course—but its core building blocks are almost certainly shared with other frontier models like Qwen 3, and grounded in classic architectures like ResNet, Transformer, and Vision Transformer. That’s why these Blueprints are so useful: they help engineers actually understand the math and implementation details inside the black box.

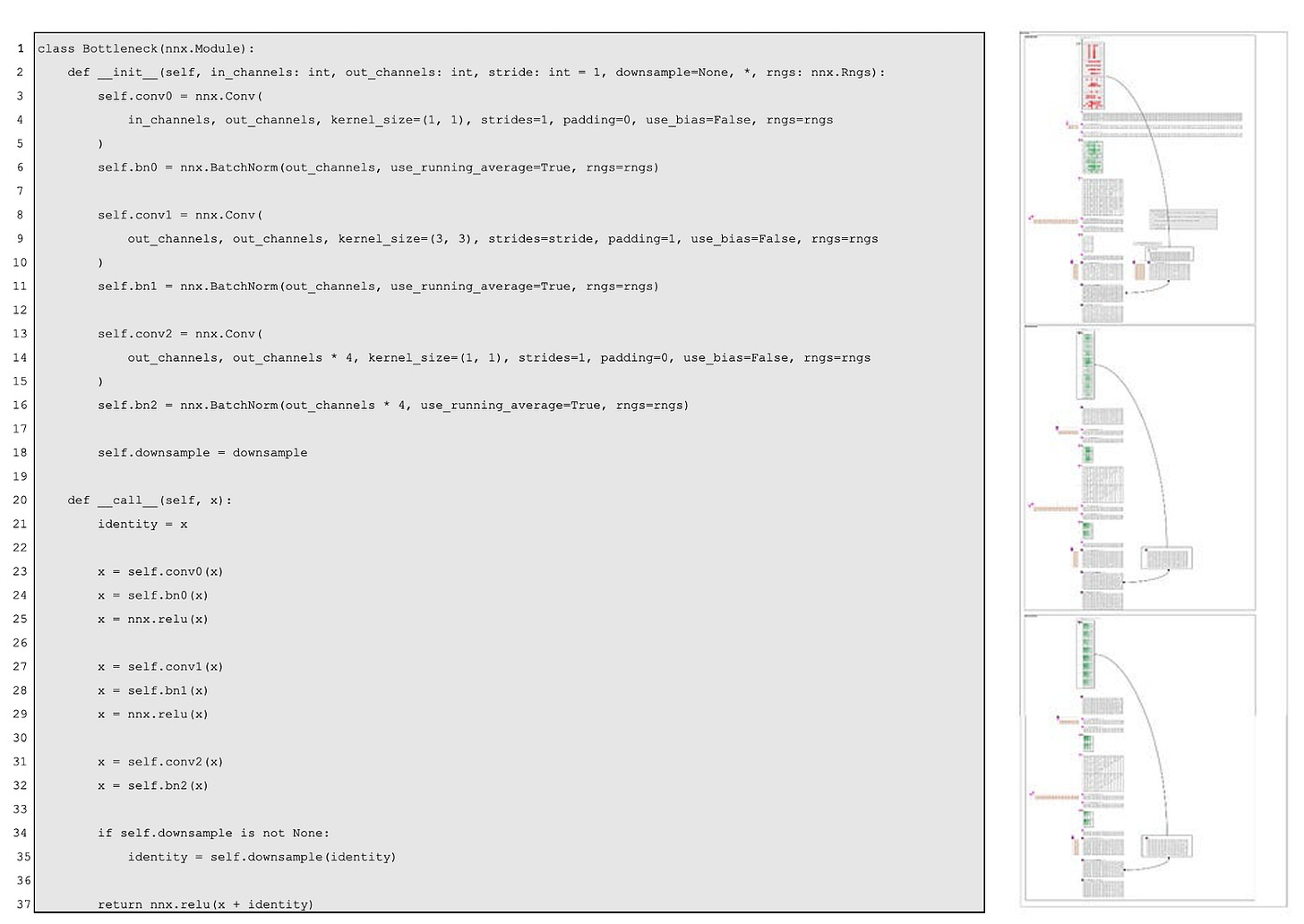

ResNet is still the #1 most cited AI paper ever. Its breakthrough—skip connections—made deep networks trainable without gradient explosions. You can see these skip paths clearly in the Blueprint. And because ResNet isn’t a frontier model, I’ve included the full Blueprint here for anyone to download and learn from.

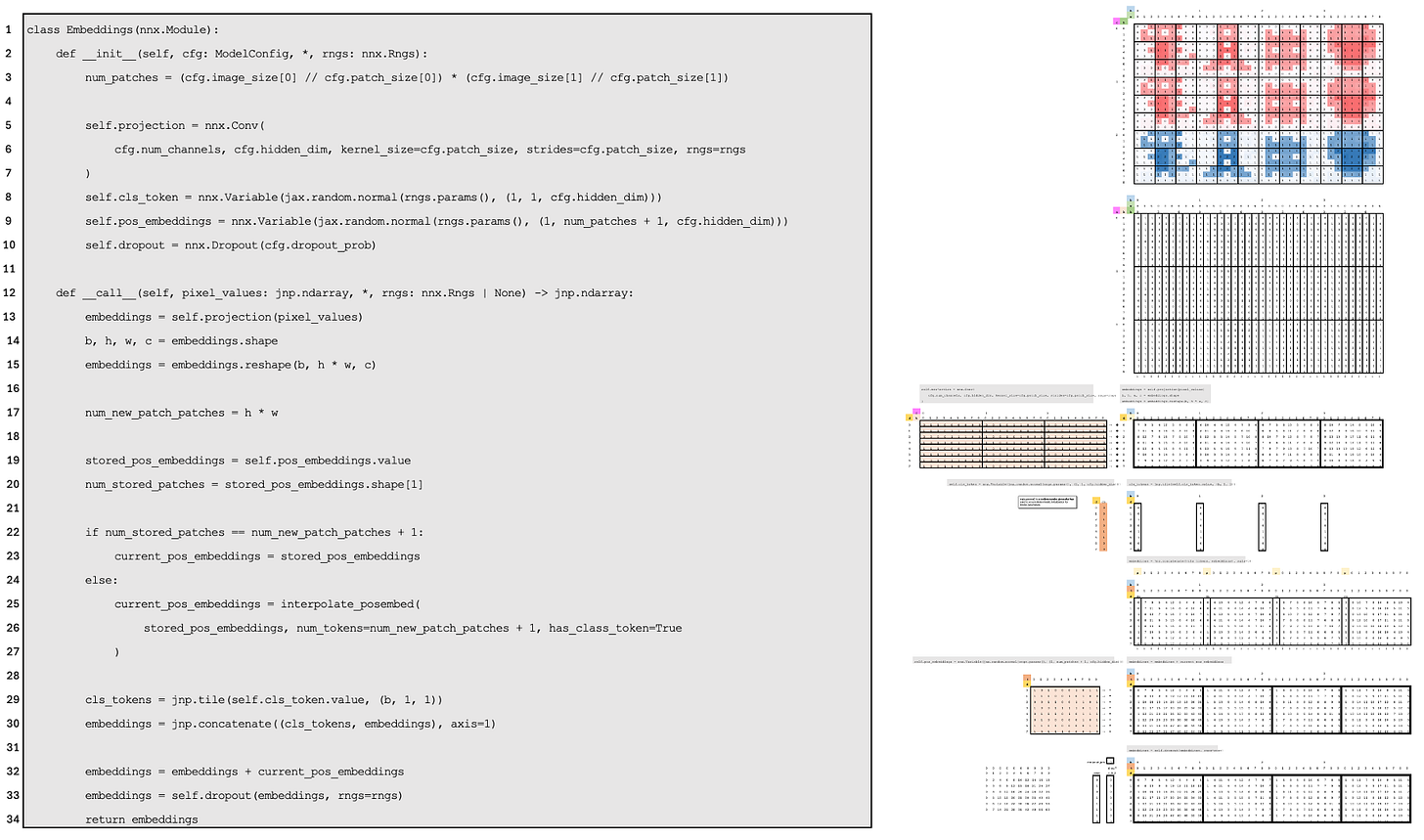

The Vision Transformer (ViT) changed computer vision by showing that we can treat an image like a sequence of tokens and run pure Transformer attention on it. Its building blocks—patch embeddings, positional encodings, multi-head attention, and MLP layers—are easy to visualize and understand. In this issue, I will focus only on the patch embedding layer to keep the explanation grounded and accessible.

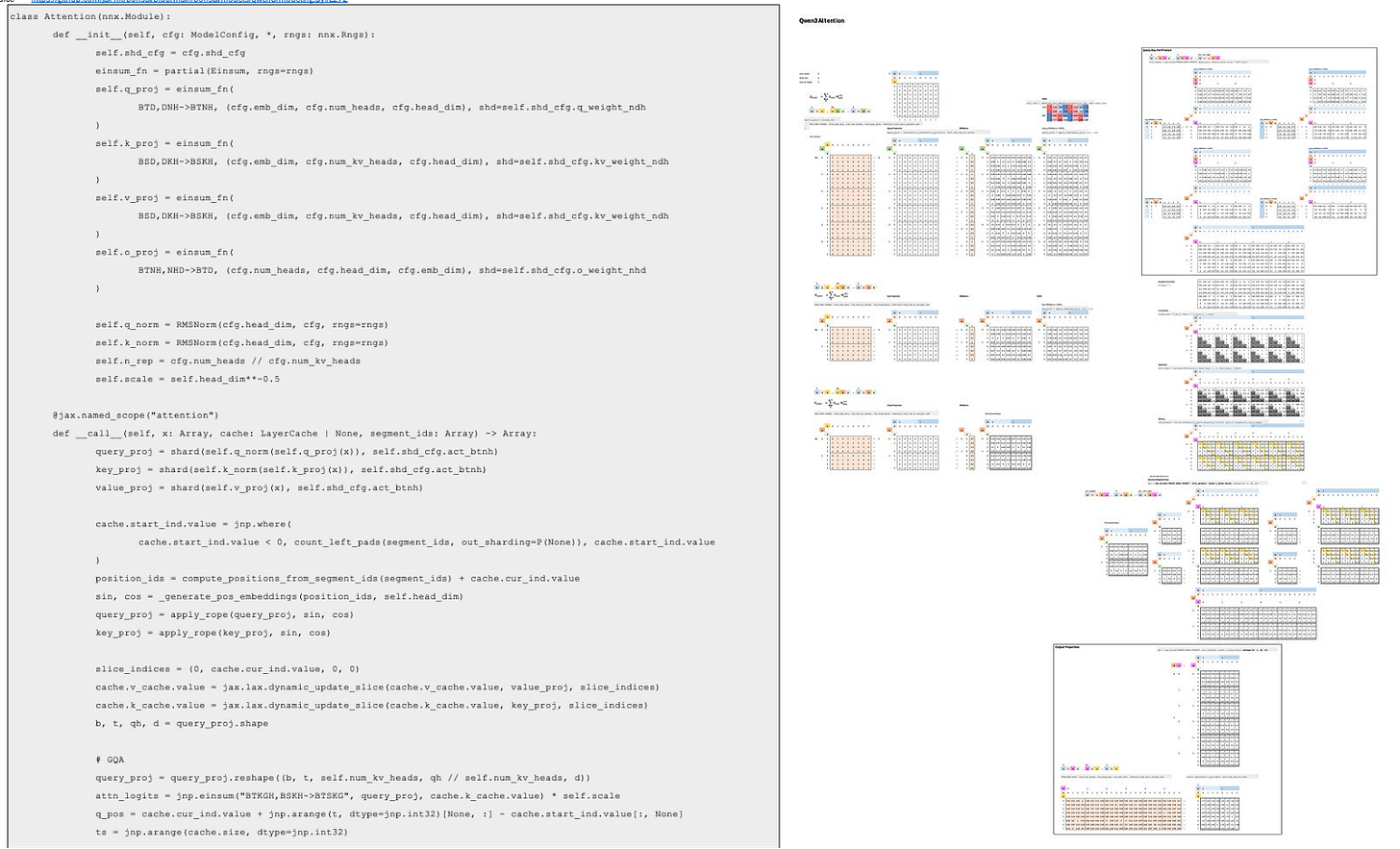

Qwen 3 is Alibaba’s frontier model, and I’ve already built complete worksheets for it.

This Blueprint goes further with a refined tensor arrangement that maps cleanly to Bonsai’s JAX implementation, making it much easier to trace and understand.

Bonsai’s implementation of Qwen 3 relies heavily on einsum, so I did my best to lay out the axes clearly—making it easy to line up the math equations, the einsum expressions (e.g., BTD, BNH → BTNH), and the corresponding tensors. If you need a deeper primer on einsum, see my previous issue where I shared a comprehensive Blueprint on einsum.

Download

This Frontier AI Excel Blueprints are available to AI by Hand Academy members. You can become a member via a paid Substack subscription.