Transformer - Six Levels of Understanding

AI by Hand ✍️ Seminar Series

This week, in the seventh seminar of 2026, I taught Transformers—but instead of jumping straight into attention diagrams and equations, I used the opportunity to guide everyone through six levels of understanding.

We first practiced on something small and concrete—an artificial neuron—going deeper level by level from (1) black box to (2) components, to (3) tensors, to (4) math, to (5) Excel, and finally to (6) coding. Then we applied the exact same progression to the transformer. My goal was to show the audience how to fish, not just hand them a fish—so they can use this framework to unpack any architecture they encounter next.

Artificial Neuron

Level 1 — Black Box: You see an artificial neuron as a function that takes inputs and produces a single output.

Level 2 — Components: You identify its internal parts—inputs, weights, bias, activation function, and output.

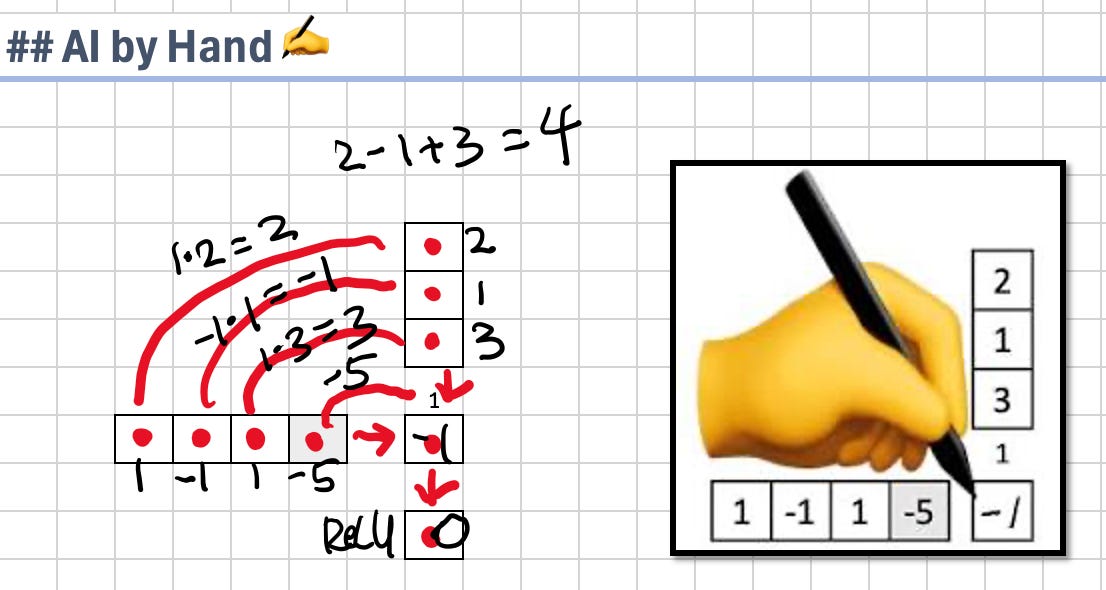

Level 3 — Tensors (AI by Hand ✍️): You trace how each input aligns with a corresponding weight, forms a dot product, adds bias, and passes through an activation gate.

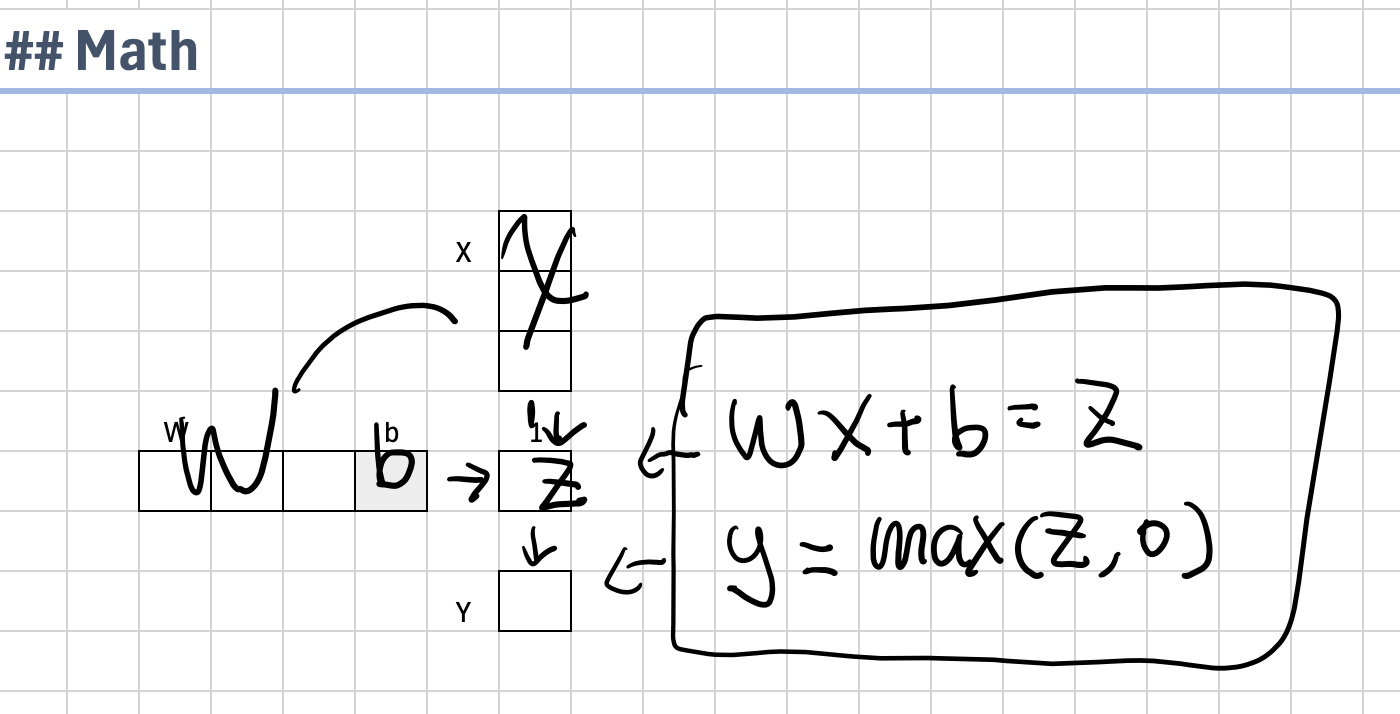

Level 4 — Math: You express it symbolically as y = wx + b and understand what each symbol represents.

Level 5 — Excel: You replicate the full computation step by step in Excel—without libraries, abstractions, or training wheels—so you can directly observe how changing numbers affects the output.

Level 6 — Coding: You implement it in code only after going through the earlier levels and earning the right to code—so clearly verbalizing its logic, and you can even use a coding model like OpenAI’s Codex or an Anthropic’s Opus, so long as you can describe what you want.

Transformer

Level 1 — Black Box: You see a transformer as a function that takes tokens as input and produces the next-token probability distribution as output.

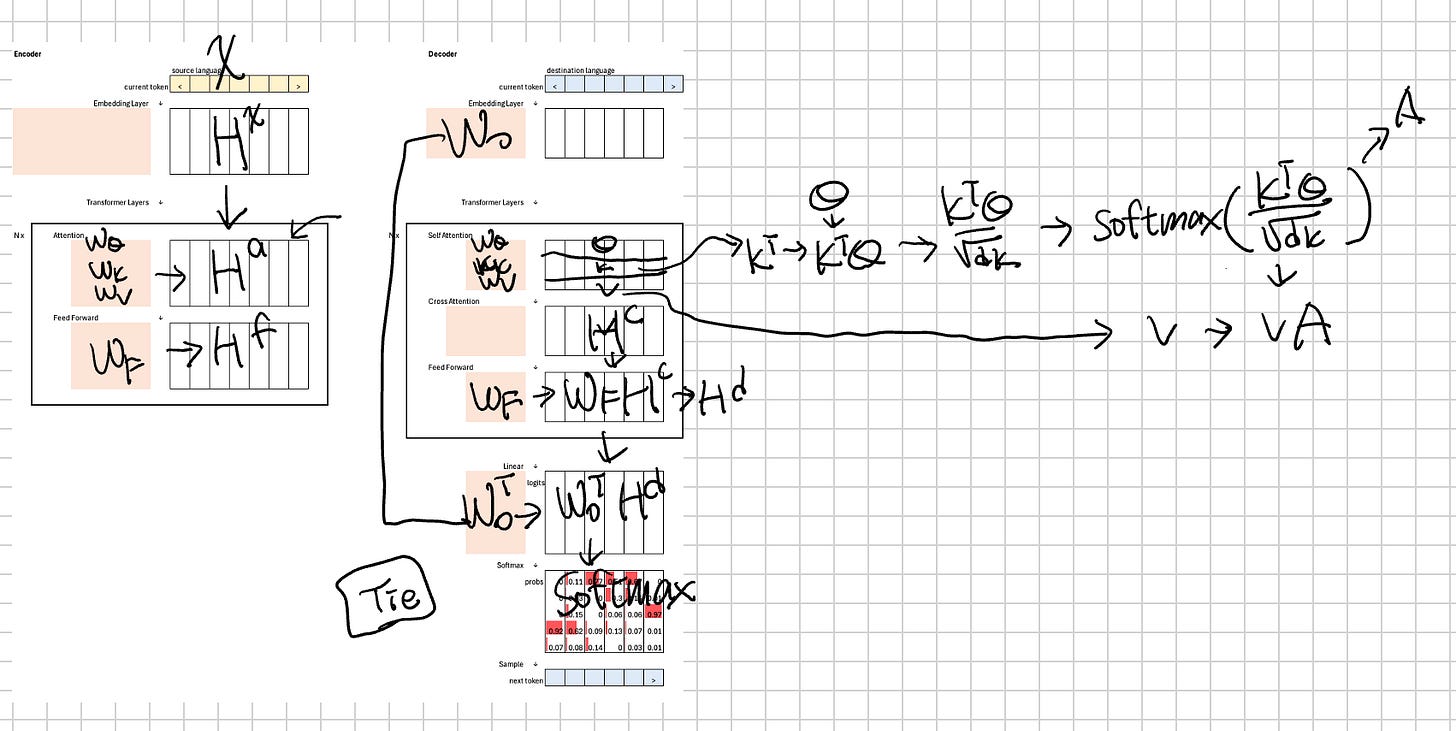

Level 2 — Components: You identify its internal parts—embeddings, positional encoding, self-attention, feed-forward networks, residual connections, layer normalization, and the final linear + softmax head.

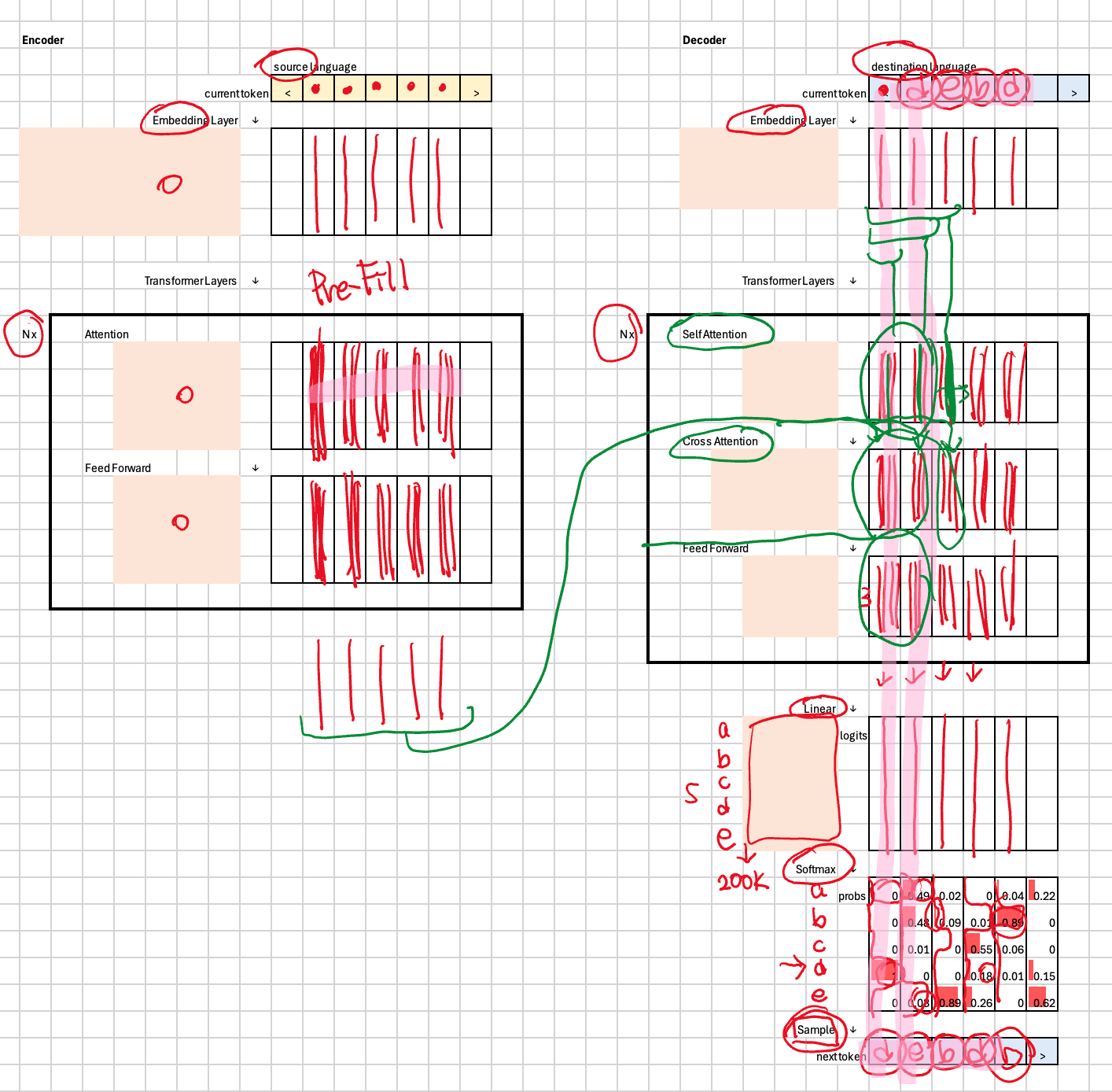

Level 3 — Tensors (AI by Hand ✍️): You trace how tokens become vectors, how encoder layers can run in parallel during prefill, how decoder layers must run one token at a time during decoding, and how shapes stay consistent as representations flow through attention, cross-attention, and feed-forward layers.

Level 4 — Math:You write the equations directly on top of the tensor visualization and understand what each matrix multiplication corresponds to.

Level 5 — Excel: You replicate attention, projection, and softmax step by step in Excel—without libraries, abstractions, or training wheels—so you can directly observe how probabilities emerge from matrix operations.

Level 6 — Coding: You move to implementation only after progressing through the earlier levels and earning the right to build—able to explain embeddings, attention flow, projection, and sampling in plain language, provided you can specify the mechanics clearly rather than leaning on high-level buzzwords.

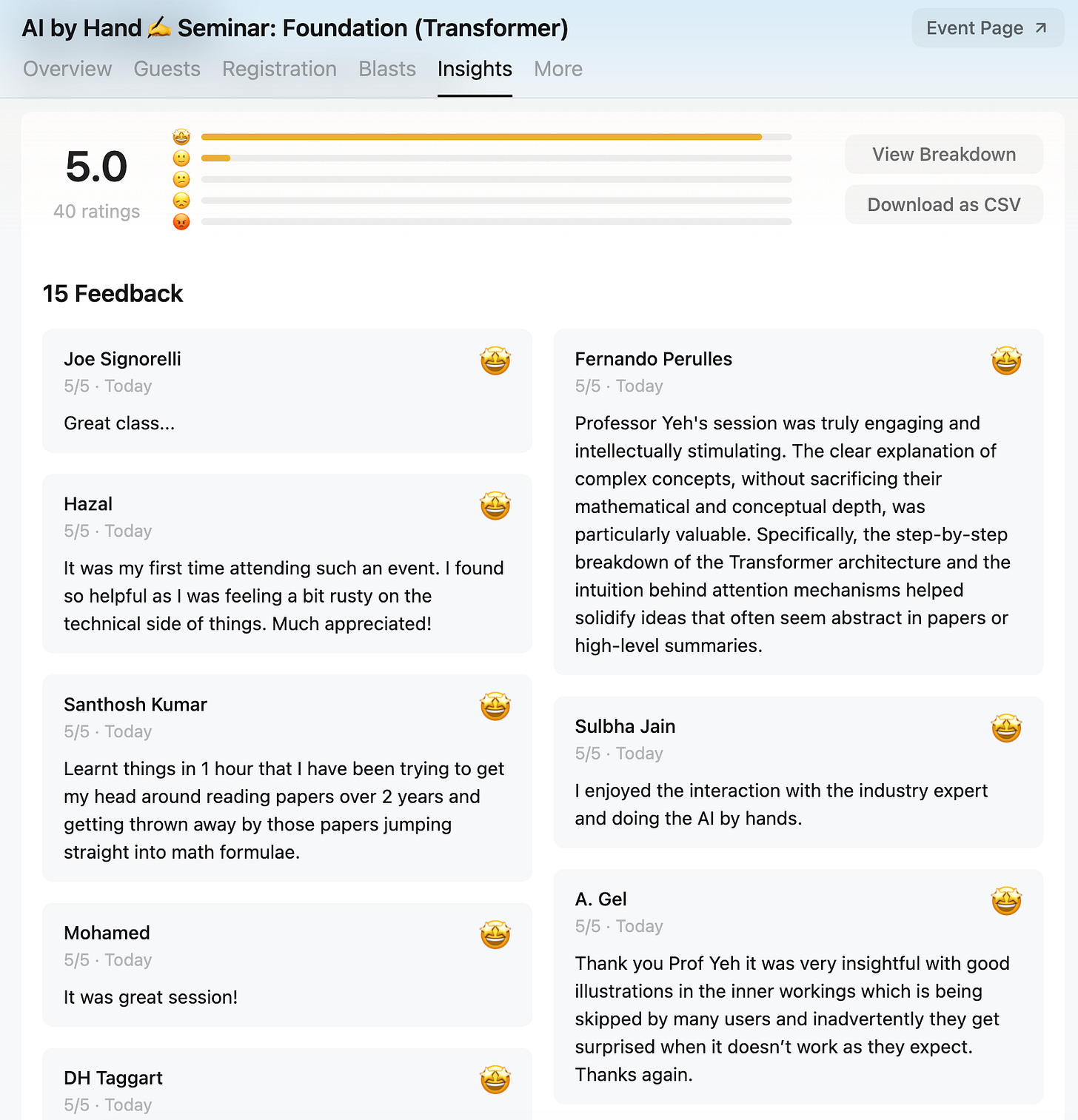

Feedback

Recording & Workbook

(now open to all newsletter subscribers for limited time preview)

The full recording and the associated Excel workbook are available to AI by Hand Academy members. You can become a member via a paid Substack subscription.

Previous Seminars

2/5/2026: Meta Frontier AI Papers: Superintelligence Lab vs. FAIR

1/29/2026: 9 AI Eval Formulas

1/22/2026: Google Ironwood TPU: From Bits to HBM

1/15/2026: How Small Models Learn Tool Use from AWS

1/8/2026: Manifold-Constrained Hyper Connection (mHC) from DeepSeek

1/8:2026: Introduction to Generative AI